Compare commits

merge into: building_data_management_systems.Xuanzhou.2024Fall.DaSE:master

building_data_management_systems.Xuanzhou.2024Fall.DaSE:ckx

building_data_management_systems.Xuanzhou.2024Fall.DaSE:main

building_data_management_systems.Xuanzhou.2024Fall.DaSE:master

building_data_management_systems.Xuanzhou.2024Fall.DaSE:pzy

pull from: building_data_management_systems.Xuanzhou.2024Fall.DaSE:main

building_data_management_systems.Xuanzhou.2024Fall.DaSE:ckx

building_data_management_systems.Xuanzhou.2024Fall.DaSE:main

building_data_management_systems.Xuanzhou.2024Fall.DaSE:master

building_data_management_systems.Xuanzhou.2024Fall.DaSE:pzy

528 changed files with 3524 additions and 143588 deletions

Split View

Diff Options

-

+102 -0.github/workflows/build.yml

-

+2 -0.gitignore

-

+1 -1.gitmodules

-

+17 -19CMakeLists.txt

-

+31 -0CONTRIBUTING.md

-

+3 -246README.md

-

+0 -16TODO

-

+0 -5UseGuaide.txt

-

+8 -6benchmarks/db_bench.cc

-

+4 -0benchmarks/db_bench_log.cc

-

+4 -0benchmarks/db_bench_sqlite3.cc

-

+4 -0benchmarks/db_bench_tree_db.cc

-

+4 -0db/autocompact_test.cc

-

+6 -15db/builder.cc

-

+5 -2db/builder.h

-

+5 -2db/c.cc

-

+4 -0db/c_test.c

-

+1 -2db/corruption_test.cc

-

+1616 -1759db/db_impl.cc

-

+226 -253db/db_impl.h

-

+4 -24db/db_test.cc

-

+2 -11db/filename.cc

-

+1 -7db/filename.h

-

+0 -4db/log_test.cc

-

+4 -6db/repair.cc

-

+0 -20db/vlog_converter.cc

-

+0 -19db/vlog_converter.h

-

+0 -33db/vlog_manager.cc

-

+0 -61db/vlog_manager.h

-

+0 -58db/vlog_reader.cc

-

+0 -25db/vlog_reader.h

-

+0 -26db/vlog_writer.cc

-

+0 -26db/vlog_writer.h

-

+209 -168db/write_batch.cc

-

+1 -1db/write_batch_internal.h

-

+0 -45draw.py

-

+0 -50examples/GCtest.cc

-

+0 -28examples/ValueConvertTest.cc

-

+0 -173examples/WiscKeyTest_1.cc

-

+0 -81examples/WiscKeyTest_1.h

-

+0 -76examples/iterator_test.cc

-

+0 -43examples/kv_sep_test.cc

-

+0 -23examples/main.cc

-

+0 -25examples/test_1.cc

-

+0 -3gitpush.sh

-

+0 -8helpers/memenv/memenv.cc

-

BINimages/1.png

-

BINimages/10.png

-

BINimages/11.png

-

BINimages/12.webp

-

BINimages/13.png

-

BINimages/14.png

-

BINimages/15.png

-

BINimages/16.png

-

BINimages/2.png

-

BINimages/3.png

-

BINimages/4.png

-

BINimages/5.png

-

BINimages/6.png

-

BINimages/7.png

-

BINimages/8.png

-

BINimages/9.png

-

BINimages/GC_test.png

-

BINimages/c1.1.png

-

BINimages/c1.2.png

-

BINimages/c1.png

-

BINimages/c10.png

-

BINimages/c2.png

-

BINimages/c3.png

-

BINimages/c4.png

-

BINimages/c5.png

-

BINimages/c6.png

-

BINimages/c7.png

-

BINimages/c8.png

-

BINimages/c9.png

-

BINimages/field_test.png

-

BINimages/iterate_test.png

-

BINimages/kv_sep_test.png

-

+188 -169include/leveldb/db.h

-

+0 -22include/leveldb/env.h

-

+6 -19include/leveldb/options.h

-

+3 -0include/leveldb/slice.h

-

+94 -93include/leveldb/table_builder.h

-

+86 -83include/leveldb/write_batch.h

-

+0 -0prefetch.txt

-

+63 -0table/blob_file.cc

-

+34 -0table/blob_file.h

-

+345 -280table/table_builder.cc

-

+74 -0test/db_test2.cc

-

+26 -25test/field_test.cc

-

+119 -0test/kv_seperate_test.cc

-

+114 -0test/ttl_test.cc

-

+0 -5third_party/benchmark/.clang-format

-

+0 -7third_party/benchmark/.clang-tidy

-

+0 -32third_party/benchmark/.github/ISSUE_TEMPLATE/bug_report.md

-

+0 -20third_party/benchmark/.github/ISSUE_TEMPLATE/feature_request.md

-

+0 -13third_party/benchmark/.github/install_bazel.sh

-

+0 -27third_party/benchmark/.github/libcxx-setup.sh

-

+0 -35third_party/benchmark/.github/workflows/bazel.yml

-

+0 -46third_party/benchmark/.github/workflows/build-and-test-min-cmake.yml

+ 102

- 0

.github/workflows/build.yml

View File

| @ -0,0 +1,102 @@ | |||

| # Copyright 2021 The LevelDB Authors. All rights reserved. | |||

| # Use of this source code is governed by a BSD-style license that can be | |||

| # found in the LICENSE file. See the AUTHORS file for names of contributors. | |||

| name: ci | |||

| on: [push, pull_request] | |||

| permissions: | |||

| contents: read | |||

| jobs: | |||

| build-and-test: | |||

| name: >- | |||

| CI | |||

| ${{ matrix.os }} | |||

| ${{ matrix.compiler }} | |||

| ${{ matrix.optimized && 'release' || 'debug' }} | |||

| runs-on: ${{ matrix.os }} | |||

| strategy: | |||

| fail-fast: false | |||

| matrix: | |||

| compiler: [clang, gcc, msvc] | |||

| os: [ubuntu-latest, macos-latest, windows-latest] | |||

| optimized: [true, false] | |||

| exclude: | |||

| # MSVC only works on Windows. | |||

| - os: ubuntu-latest | |||

| compiler: msvc | |||

| - os: macos-latest | |||

| compiler: msvc | |||

| # Not testing with GCC on macOS. | |||

| - os: macos-latest | |||

| compiler: gcc | |||

| # Only testing with MSVC on Windows. | |||

| - os: windows-latest | |||

| compiler: clang | |||

| - os: windows-latest | |||

| compiler: gcc | |||

| include: | |||

| - compiler: clang | |||

| CC: clang | |||

| CXX: clang++ | |||

| - compiler: gcc | |||

| CC: gcc | |||

| CXX: g++ | |||

| - compiler: msvc | |||

| CC: | |||

| CXX: | |||

| env: | |||

| CMAKE_BUILD_DIR: ${{ github.workspace }}/build | |||

| CMAKE_BUILD_TYPE: ${{ matrix.optimized && 'RelWithDebInfo' || 'Debug' }} | |||

| CC: ${{ matrix.CC }} | |||

| CXX: ${{ matrix.CXX }} | |||

| BINARY_SUFFIX: ${{ startsWith(matrix.os, 'windows') && '.exe' || '' }} | |||

| BINARY_PATH: >- | |||

| ${{ format( | |||

| startsWith(matrix.os, 'windows') && '{0}\build\{1}\' || '{0}/build/', | |||

| github.workspace, | |||

| matrix.optimized && 'RelWithDebInfo' || 'Debug') }} | |||

| steps: | |||

| - uses: actions/checkout@v2 | |||

| with: | |||

| submodules: true | |||

| - name: Install dependencies on Linux | |||

| if: ${{ runner.os == 'Linux' }} | |||

| # libgoogle-perftools-dev is temporarily removed from the package list | |||

| # because it is currently broken on GitHub's Ubuntu 22.04. | |||

| run: | | |||

| sudo apt-get update | |||

| sudo apt-get install libkyotocabinet-dev libsnappy-dev libsqlite3-dev | |||

| - name: Generate build config | |||

| run: >- | |||

| cmake -S "${{ github.workspace }}" -B "${{ env.CMAKE_BUILD_DIR }}" | |||

| -DCMAKE_BUILD_TYPE=${{ env.CMAKE_BUILD_TYPE }} | |||

| -DCMAKE_INSTALL_PREFIX=${{ runner.temp }}/install_test/ | |||

| - name: Build | |||

| run: >- | |||

| cmake --build "${{ env.CMAKE_BUILD_DIR }}" | |||

| --config "${{ env.CMAKE_BUILD_TYPE }}" | |||

| - name: Run Tests | |||

| working-directory: ${{ github.workspace }}/build | |||

| run: ctest -C "${{ env.CMAKE_BUILD_TYPE }}" --verbose | |||

| - name: Run LevelDB Benchmarks | |||

| run: ${{ env.BINARY_PATH }}db_bench${{ env.BINARY_SUFFIX }} | |||

| - name: Run SQLite Benchmarks | |||

| if: ${{ runner.os != 'Windows' }} | |||

| run: ${{ env.BINARY_PATH }}db_bench_sqlite3${{ env.BINARY_SUFFIX }} | |||

| - name: Run Kyoto Cabinet Benchmarks | |||

| if: ${{ runner.os == 'Linux' && matrix.compiler == 'clang' }} | |||

| run: ${{ env.BINARY_PATH }}db_bench_tree_db${{ env.BINARY_SUFFIX }} | |||

| - name: Test CMake installation | |||

| run: cmake --build "${{ env.CMAKE_BUILD_DIR }}" --target install | |||

+ 2

- 0

.gitignore

View File

+ 1

- 1

.gitmodules

View File

+ 17

- 19

CMakeLists.txt

View File

+ 31

- 0

CONTRIBUTING.md

View File

| @ -0,0 +1,31 @@ | |||

| # How to Contribute | |||

| We'd love to accept your patches and contributions to this project. There are | |||

| just a few small guidelines you need to follow. | |||

| ## Contributor License Agreement | |||

| Contributions to this project must be accompanied by a Contributor License | |||

| Agreement. You (or your employer) retain the copyright to your contribution; | |||

| this simply gives us permission to use and redistribute your contributions as | |||

| part of the project. Head over to <https://cla.developers.google.com/> to see | |||

| your current agreements on file or to sign a new one. | |||

| You generally only need to submit a CLA once, so if you've already submitted one | |||

| (even if it was for a different project), you probably don't need to do it | |||

| again. | |||

| ## Code Reviews | |||

| All submissions, including submissions by project members, require review. We | |||

| use GitHub pull requests for this purpose. Consult | |||

| [GitHub Help](https://help.github.com/articles/about-pull-requests/) for more | |||

| information on using pull requests. | |||

| See [the README](README.md#contributing-to-the-leveldb-project) for areas | |||

| where we are likely to accept external contributions. | |||

| ## Community Guidelines | |||

| This project follows [Google's Open Source Community | |||

| Guidelines](https://opensource.google/conduct/). | |||

+ 3

- 246

README.md

View File

| @ -1,252 +1,9 @@ | |||

| LevelDB is a fast key-value storage library written at Google that provides an ordered mapping from string keys to string values. | |||

| 实验报告请查看以下文档: | |||

| **本仓库提供TTL基本的测试用例** | |||

| 我们的分工已在代码中以注释的形式体现,如:ckx、pzy。 | |||

| - [实验报告](实验报告.md) | |||

| > **This repository is receiving very limited maintenance. We will only review the following types of changes.** | |||

| > | |||

| > * Fixes for critical bugs, such as data loss or memory corruption | |||

| > * Changes absolutely needed by internally supported leveldb clients. These typically fix breakage introduced by a language/standard library/OS update | |||

| [](https://github.com/google/leveldb/actions/workflows/build.yml) | |||

| Authors: Sanjay Ghemawat (sanjay@google.com) and Jeff Dean (jeff@google.com) | |||

| # Features | |||

| * Keys and values are arbitrary byte arrays. | |||

| * Data is stored sorted by key. | |||

| * Callers can provide a custom comparison function to override the sort order. | |||

| * The basic operations are `Put(key,value)`, `Get(key)`, `Delete(key)`. | |||

| * Multiple changes can be made in one atomic batch. | |||

| * Users can create a transient snapshot to get a consistent view of data. | |||

| * Forward and backward iteration is supported over the data. | |||

| * Data is automatically compressed using the [Snappy compression library](https://google.github.io/snappy/), but [Zstd compression](https://facebook.github.io/zstd/) is also supported. | |||

| * External activity (file system operations etc.) is relayed through a virtual interface so users can customize the operating system interactions. | |||

| # Documentation | |||

| [LevelDB library documentation](https://github.com/google/leveldb/blob/main/doc/index.md) is online and bundled with the source code. | |||

| # Limitations | |||

| * This is not a SQL database. It does not have a relational data model, it does not support SQL queries, and it has no support for indexes. | |||

| * Only a single process (possibly multi-threaded) can access a particular database at a time. | |||

| * There is no client-server support builtin to the library. An application that needs such support will have to wrap their own server around the library. | |||

| # Getting the Source | |||

| ```bash | |||

| git clone --recurse-submodules https://github.com/google/leveldb.git | |||

| ``` | |||

| # Building | |||

| This project supports [CMake](https://cmake.org/) out of the box. | |||

| ### Build for POSIX | |||

| Quick start: | |||

| 克隆代码: | |||

| ```bash | |||

| mkdir -p build && cd build | |||

| cmake -DCMAKE_BUILD_TYPE=Release .. && cmake --build . | |||

| git clone --recurse-submodules https://gitea.shuishan.net.cn/building_data_management_systems.Xuanzhou.2024Fall.DaSE/leveldb_base.git | |||

| ``` | |||

| ### Building for Windows | |||

| First generate the Visual Studio 2017 project/solution files: | |||

| ```cmd | |||

| mkdir build | |||

| cd build | |||

| cmake -G "Visual Studio 15" .. | |||

| ``` | |||

| The default default will build for x86. For 64-bit run: | |||

| ```cmd | |||

| cmake -G "Visual Studio 15 Win64" .. | |||

| ``` | |||

| To compile the Windows solution from the command-line: | |||

| ```cmd | |||

| devenv /build Debug leveldb.sln | |||

| ``` | |||

| or open leveldb.sln in Visual Studio and build from within. | |||

| Please see the CMake documentation and `CMakeLists.txt` for more advanced usage. | |||

| # Contributing to the leveldb Project | |||

| > **This repository is receiving very limited maintenance. We will only review the following types of changes.** | |||

| > | |||

| > * Bug fixes | |||

| > * Changes absolutely needed by internally supported leveldb clients. These typically fix breakage introduced by a language/standard library/OS update | |||

| The leveldb project welcomes contributions. leveldb's primary goal is to be | |||

| a reliable and fast key/value store. Changes that are in line with the | |||

| features/limitations outlined above, and meet the requirements below, | |||

| will be considered. | |||

| Contribution requirements: | |||

| 1. **Tested platforms only**. We _generally_ will only accept changes for | |||

| platforms that are compiled and tested. This means POSIX (for Linux and | |||

| macOS) or Windows. Very small changes will sometimes be accepted, but | |||

| consider that more of an exception than the rule. | |||

| 2. **Stable API**. We strive very hard to maintain a stable API. Changes that | |||

| require changes for projects using leveldb _might_ be rejected without | |||

| sufficient benefit to the project. | |||

| 3. **Tests**: All changes must be accompanied by a new (or changed) test, or | |||

| a sufficient explanation as to why a new (or changed) test is not required. | |||

| 4. **Consistent Style**: This project conforms to the | |||

| [Google C++ Style Guide](https://google.github.io/styleguide/cppguide.html). | |||

| To ensure your changes are properly formatted please run: | |||

| ``` | |||

| clang-format -i --style=file <file> | |||

| ``` | |||

| We are unlikely to accept contributions to the build configuration files, such | |||

| as `CMakeLists.txt`. We are focused on maintaining a build configuration that | |||

| allows us to test that the project works in a few supported configurations | |||

| inside Google. We are not currently interested in supporting other requirements, | |||

| such as different operating systems, compilers, or build systems. | |||

| ## Submitting a Pull Request | |||

| Before any pull request will be accepted the author must first sign a | |||

| Contributor License Agreement (CLA) at https://cla.developers.google.com/. | |||

| In order to keep the commit timeline linear | |||

| [squash](https://git-scm.com/book/en/v2/Git-Tools-Rewriting-History#Squashing-Commits) | |||

| your changes down to a single commit and [rebase](https://git-scm.com/docs/git-rebase) | |||

| on google/leveldb/main. This keeps the commit timeline linear and more easily sync'ed | |||

| with the internal repository at Google. More information at GitHub's | |||

| [About Git rebase](https://help.github.com/articles/about-git-rebase/) page. | |||

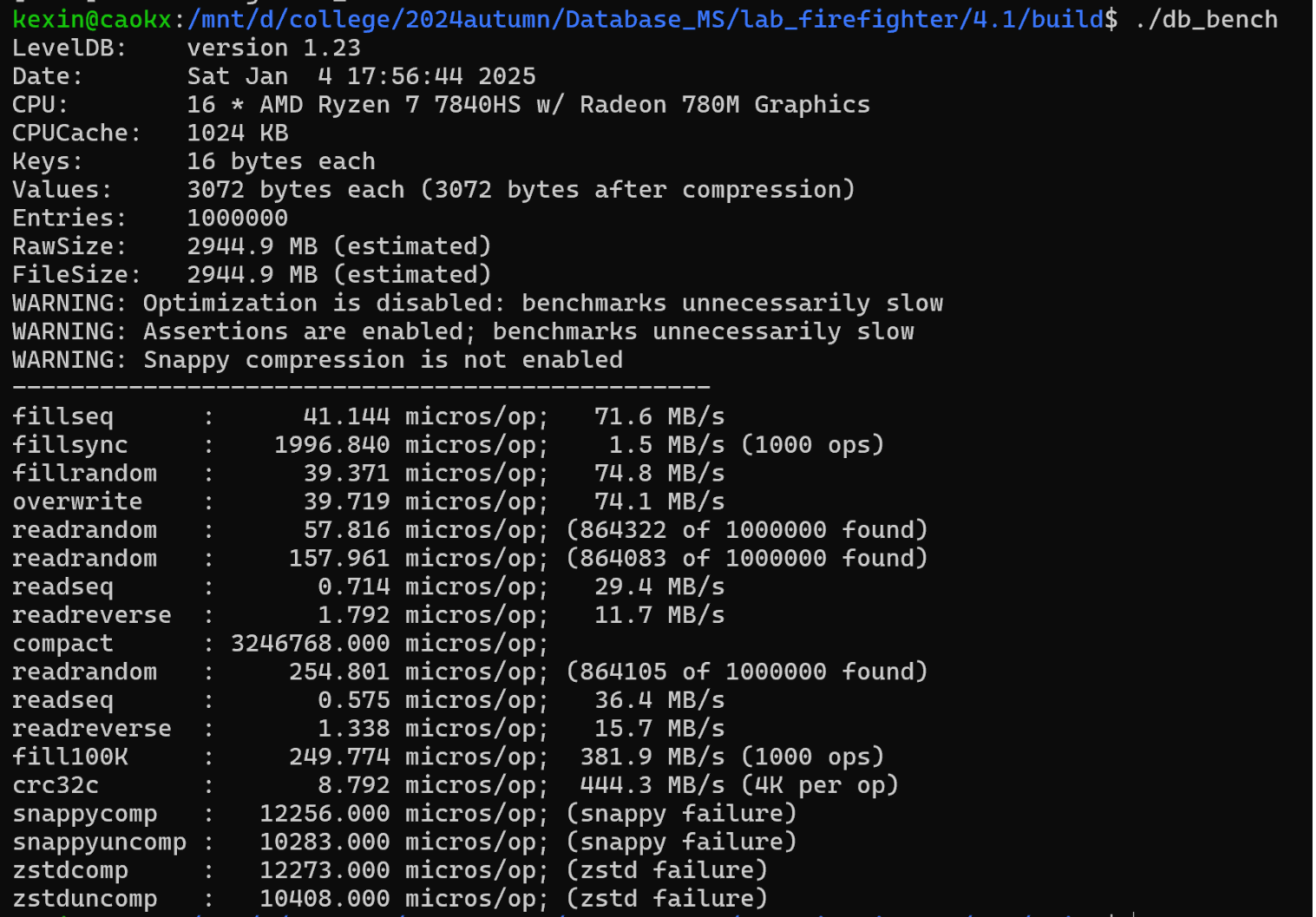

| # Performance | |||

| Here is a performance report (with explanations) from the run of the | |||

| included db_bench program. The results are somewhat noisy, but should | |||

| be enough to get a ballpark performance estimate. | |||

| ## Setup | |||

| We use a database with a million entries. Each entry has a 16 byte | |||

| key, and a 100 byte value. Values used by the benchmark compress to | |||

| about half their original size. | |||

| LevelDB: version 1.1 | |||

| Date: Sun May 1 12:11:26 2011 | |||

| CPU: 4 x Intel(R) Core(TM)2 Quad CPU Q6600 @ 2.40GHz | |||

| CPUCache: 4096 KB | |||

| Keys: 16 bytes each | |||

| Values: 100 bytes each (50 bytes after compression) | |||

| Entries: 1000000 | |||

| Raw Size: 110.6 MB (estimated) | |||

| File Size: 62.9 MB (estimated) | |||

| ## Write performance | |||

| The "fill" benchmarks create a brand new database, in either | |||

| sequential, or random order. The "fillsync" benchmark flushes data | |||

| from the operating system to the disk after every operation; the other | |||

| write operations leave the data sitting in the operating system buffer | |||

| cache for a while. The "overwrite" benchmark does random writes that | |||

| update existing keys in the database. | |||

| fillseq : 1.765 micros/op; 62.7 MB/s | |||

| fillsync : 268.409 micros/op; 0.4 MB/s (10000 ops) | |||

| fillrandom : 2.460 micros/op; 45.0 MB/s | |||

| overwrite : 2.380 micros/op; 46.5 MB/s | |||

| Each "op" above corresponds to a write of a single key/value pair. | |||

| I.e., a random write benchmark goes at approximately 400,000 writes per second. | |||

| Each "fillsync" operation costs much less (0.3 millisecond) | |||

| than a disk seek (typically 10 milliseconds). We suspect that this is | |||

| because the hard disk itself is buffering the update in its memory and | |||

| responding before the data has been written to the platter. This may | |||

| or may not be safe based on whether or not the hard disk has enough | |||

| power to save its memory in the event of a power failure. | |||

| ## Read performance | |||

| We list the performance of reading sequentially in both the forward | |||

| and reverse direction, and also the performance of a random lookup. | |||

| Note that the database created by the benchmark is quite small. | |||

| Therefore the report characterizes the performance of leveldb when the | |||

| working set fits in memory. The cost of reading a piece of data that | |||

| is not present in the operating system buffer cache will be dominated | |||

| by the one or two disk seeks needed to fetch the data from disk. | |||

| Write performance will be mostly unaffected by whether or not the | |||

| working set fits in memory. | |||

| readrandom : 16.677 micros/op; (approximately 60,000 reads per second) | |||

| readseq : 0.476 micros/op; 232.3 MB/s | |||

| readreverse : 0.724 micros/op; 152.9 MB/s | |||

| LevelDB compacts its underlying storage data in the background to | |||

| improve read performance. The results listed above were done | |||

| immediately after a lot of random writes. The results after | |||

| compactions (which are usually triggered automatically) are better. | |||

| readrandom : 11.602 micros/op; (approximately 85,000 reads per second) | |||

| readseq : 0.423 micros/op; 261.8 MB/s | |||

| readreverse : 0.663 micros/op; 166.9 MB/s | |||

| Some of the high cost of reads comes from repeated decompression of blocks | |||

| read from disk. If we supply enough cache to the leveldb so it can hold the | |||

| uncompressed blocks in memory, the read performance improves again: | |||

| readrandom : 9.775 micros/op; (approximately 100,000 reads per second before compaction) | |||

| readrandom : 5.215 micros/op; (approximately 190,000 reads per second after compaction) | |||

| ## Repository contents | |||

| See [doc/index.md](doc/index.md) for more explanation. See | |||

| [doc/impl.md](doc/impl.md) for a brief overview of the implementation. | |||

| The public interface is in include/leveldb/*.h. Callers should not include or | |||

| rely on the details of any other header files in this package. Those | |||

| internal APIs may be changed without warning. | |||

| Guide to header files: | |||

| * **include/leveldb/db.h**: Main interface to the DB: Start here. | |||

| * **include/leveldb/options.h**: Control over the behavior of an entire database, | |||

| and also control over the behavior of individual reads and writes. | |||

| * **include/leveldb/comparator.h**: Abstraction for user-specified comparison function. | |||

| If you want just bytewise comparison of keys, you can use the default | |||

| comparator, but clients can write their own comparator implementations if they | |||

| want custom ordering (e.g. to handle different character encodings, etc.). | |||

| * **include/leveldb/iterator.h**: Interface for iterating over data. You can get | |||

| an iterator from a DB object. | |||

| * **include/leveldb/write_batch.h**: Interface for atomically applying multiple | |||

| updates to a database. | |||

| * **include/leveldb/slice.h**: A simple module for maintaining a pointer and a | |||

| length into some other byte array. | |||

| * **include/leveldb/status.h**: Status is returned from many of the public interfaces | |||

| and is used to report success and various kinds of errors. | |||

| * **include/leveldb/env.h**: | |||

| Abstraction of the OS environment. A posix implementation of this interface is | |||

| in util/env_posix.cc. | |||

| * **include/leveldb/table.h, include/leveldb/table_builder.h**: Lower-level modules that most | |||

| clients probably won't use directly. | |||

+ 0

- 16

TODO

View File

+ 0

- 5

UseGuaide.txt

View File

| @ -1,5 +0,0 @@ | |||

| 运行新代码: | |||

| 1. 在examples/ 添加对应的测试文件 | |||

| 2. 在最外层CMakeLists加入新的测试文件的编译指令,代码参考: + leveldb_test("examples/main.cc") | |||

| 3. 进入build文件,重新编译,指令:"cmake -DCMAKE_BUILD_TYPE=Release .. && cmake --build ."。 | |||

| "cmake -DCMAKE_BUILD_TYPE=Debug .. && cmake --build ." | |||

+ 8

- 6

benchmarks/db_bench.cc

View File

+ 4

- 0

benchmarks/db_bench_log.cc

View File

+ 4

- 0

benchmarks/db_bench_sqlite3.cc

View File

+ 4

- 0

benchmarks/db_bench_tree_db.cc

View File

+ 4

- 0

db/autocompact_test.cc

View File

+ 6

- 15

db/builder.cc

View File

+ 5

- 2

db/builder.h

View File

+ 5

- 2

db/c.cc

View File

+ 4

- 0

db/c_test.c

View File

+ 1

- 2

db/corruption_test.cc

View File

+ 1616

- 1759

db/db_impl.cc

File diff suppressed because it is too large

View File

+ 226

- 253

db/db_impl.h

View File

| @ -1,253 +1,226 @@ | |||

| // Copyright (c) 2011 The LevelDB Authors. All rights reserved. | |||

| // Use of this source code is governed by a BSD-style license that can be | |||

| // found in the LICENSE file. See the AUTHORS file for names of contributors. | |||

| #ifndef STORAGE_LEVELDB_DB_DB_IMPL_H_ | |||

| #define STORAGE_LEVELDB_DB_DB_IMPL_H_ | |||

| #include <atomic> | |||

| #include <deque> | |||

| #include <set> | |||

| #include <string> | |||

| #include "db/dbformat.h" | |||

| #include "db/log_writer.h" | |||

| #include "db/vlog_writer.h" | |||

| #include "db/vlog_reader.h" | |||

| #include "db/vlog_manager.h" | |||

| #include "db/snapshot.h" | |||

| #include "db/vlog_converter.h" | |||

| #include "leveldb/db.h" | |||

| #include "leveldb/env.h" | |||

| #include "port/port.h" | |||

| #include "port/thread_annotations.h" | |||

| #include <thread> | |||

| #include <chrono> // 如果使用了 std::this_thread::sleep_for | |||

| namespace leveldb { | |||

| class MemTable; | |||

| class TableCache; | |||

| class Version; | |||

| class VersionEdit; | |||

| class VersionSet; | |||

| class DBImpl : public DB { | |||

| public: | |||

| DBImpl(const Options& options, const std::string& dbname); | |||

| DBImpl(const DBImpl&) = delete; | |||

| DBImpl& operator=(const DBImpl&) = delete; | |||

| ~DBImpl() override; | |||

| // Implementations of the DB interface | |||

| Status Put(const WriteOptions&, const Slice& key, | |||

| const Slice& value) override; | |||

| Status Delete(const WriteOptions&, const Slice& key) override; | |||

| Status Write(const WriteOptions& options, WriteBatch* updates) override; | |||

| Status Get(const ReadOptions& options, const Slice& key, | |||

| std::string* value) override; | |||

| std::vector<std::string> FindKeysByField(leveldb::DB* db, Field& field) override; | |||

| Iterator* NewIterator(const ReadOptions&) override; | |||

| const Snapshot* GetSnapshot() override; | |||

| void ReleaseSnapshot(const Snapshot* snapshot) override; | |||

| bool GetProperty(const Slice& property, std::string* value) override; | |||

| void GetApproximateSizes(const Range* range, int n, uint64_t* sizes) override; | |||

| void CompactRange(const Slice* begin, const Slice* end) override; | |||

| void StartBackgroundCleanupTask() { // 后台一个自动GC的线程 | |||

| std::thread([this]() { | |||

| while (!shutting_down_.load(std::memory_order_acquire)) { | |||

| vmanager_->CleanupInvalidVlogFiles(options_, dbname_); | |||

| std::this_thread::sleep_for(std::chrono::seconds(60)); // 每分钟检查一次 | |||

| } | |||

| }).detach(); | |||

| } | |||

| // Extra methods (for testing) that are not in the public DB interface | |||

| //to get the KVSepType | |||

| KVSepType GetKVSepType(); | |||

| Status FlushVlog(); | |||

| // Compact any files in the named level that overlap [*begin,*end] | |||

| void TEST_CompactRange(int level, const Slice* begin, const Slice* end); | |||

| // Force current memtable contents to be compacted. | |||

| Status TEST_CompactMemTable(); | |||

| // Return an internal iterator over the current state of the database. | |||

| // The keys of this iterator are internal keys (see format.h). | |||

| // The returned iterator should be deleted when no longer needed. | |||

| Iterator* TEST_NewInternalIterator(); | |||

| // Return the maximum overlapping data (in bytes) at next level for any | |||

| // file at a level >= 1. | |||

| int64_t TEST_MaxNextLevelOverlappingBytes(); | |||

| // Record a sample of bytes read at the specified internal key. | |||

| // Samples are taken approximately once every config::kReadBytesPeriod | |||

| // bytes. | |||

| void RecordReadSample(Slice key); | |||

| private: | |||

| friend class DB; | |||

| struct CompactionState; | |||

| struct Writer; | |||

| // Information for a manual compaction | |||

| struct ManualCompaction { | |||

| int level; | |||

| bool done; | |||

| const InternalKey* begin; // null means beginning of key range | |||

| const InternalKey* end; // null means end of key range | |||

| InternalKey tmp_storage; // Used to keep track of compaction progress | |||

| }; | |||

| // Per level compaction stats. stats_[level] stores the stats for | |||

| // compactions that produced data for the specified "level". | |||

| struct CompactionStats { | |||

| CompactionStats() : micros(0), bytes_read(0), bytes_written(0) {} | |||

| void Add(const CompactionStats& c) { | |||

| this->micros += c.micros; | |||

| this->bytes_read += c.bytes_read; | |||

| this->bytes_written += c.bytes_written; | |||

| } | |||

| int64_t micros; | |||

| int64_t bytes_read; | |||

| int64_t bytes_written; | |||

| }; | |||

| Iterator* NewInternalIterator(const ReadOptions&, | |||

| SequenceNumber* latest_snapshot, | |||

| uint32_t* seed); | |||

| Status NewDB(); | |||

| // Recover the descriptor from persistent storage. May do a significant | |||

| // amount of work to recover recently logged updates. Any changes to | |||

| // be made to the descriptor are added to *edit. | |||

| Status Recover(VersionEdit* edit, bool* save_manifest) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| void MaybeIgnoreError(Status* s) const; | |||

| // Delete any unneeded files and stale in-memory entries. | |||

| void RemoveObsoleteFiles() EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| // Compact the in-memory write buffer to disk. Switches to a new | |||

| // log-file/memtable and writes a new descriptor iff successful. | |||

| // Errors are recorded in bg_error_. | |||

| void CompactMemTable() EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status RecoverLogFile(uint64_t log_number, bool last_log, bool* save_manifest, | |||

| VersionEdit* edit, SequenceNumber* max_sequence) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status WriteLevel0Table(MemTable* mem, VersionEdit* edit, Version* base) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status MakeRoomForWrite(bool force /* compact even if there is room? */) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| WriteBatch* BuildBatchGroup(Writer** last_writer) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| void RecordBackgroundError(const Status& s); | |||

| void MaybeScheduleCompaction() EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| static void BGWork(void* db); | |||

| void BackgroundCall(); | |||

| void BackgroundCompaction() EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| void CleanupCompaction(CompactionState* compact) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status DoCompactionWork(CompactionState* compact) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status OpenCompactionOutputFile(CompactionState* compact); | |||

| Status FinishCompactionOutputFile(CompactionState* compact, Iterator* input); | |||

| Status InstallCompactionResults(CompactionState* compact) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| const Comparator* user_comparator() const { | |||

| return internal_comparator_.user_comparator(); | |||

| } | |||

| // Constant after construction | |||

| Env* const env_; | |||

| const InternalKeyComparator internal_comparator_; | |||

| const InternalFilterPolicy internal_filter_policy_; | |||

| const Options options_; // options_.comparator == &internal_comparator_ | |||

| const bool owns_info_log_; | |||

| const bool owns_cache_; | |||

| const std::string dbname_; | |||

| // table_cache_ provides its own synchronization | |||

| TableCache* const table_cache_; | |||

| // Lock over the persistent DB state. Non-null iff successfully acquired. | |||

| FileLock* db_lock_; | |||

| // State below is protected by mutex_ | |||

| port::Mutex mutex_; | |||

| std::mutex vlog_mutex_; // 用于保护 VLog 操作的互斥锁 | |||

| std::atomic<bool> shutting_down_; | |||

| port::CondVar background_work_finished_signal_ GUARDED_BY(mutex_); | |||

| MemTable* mem_; | |||

| MemTable* imm_ GUARDED_BY(mutex_); // Memtable being compacted | |||

| std::atomic<bool> has_imm_; // So bg thread can detect non-null imm_ | |||

| WritableFile* logfile_; | |||

| uint64_t logfile_number_ GUARDED_BY(mutex_); | |||

| log::Writer* log_; | |||

| //Add Defination of vlog files. | |||

| //TODO: Consider the Concurrency. | |||

| uint64_t vlogfile_number_; | |||

| uint64_t vlogfile_offset_; // 当前vlog_file的偏移 | |||

| WritableFile* vlogfile_; //写vlog_file的文件类 | |||

| vlog::VWriter* vlog_; | |||

| vlog::VlogManager* vmanager_; | |||

| vlog::VlogConverter* vconverter_; | |||

| uint32_t seed_ GUARDED_BY(mutex_); // For sampling. | |||

| // Queue of writers. | |||

| std::deque<Writer*> writers_ GUARDED_BY(mutex_); | |||

| WriteBatch* tmp_batch_ GUARDED_BY(mutex_); | |||

| SnapshotList snapshots_ GUARDED_BY(mutex_); | |||

| // Set of table files to protect from deletion because they are | |||

| // part of ongoing compactions. | |||

| std::set<uint64_t> pending_outputs_ GUARDED_BY(mutex_); | |||

| // Has a background compaction been scheduled or is running? | |||

| bool background_compaction_scheduled_ GUARDED_BY(mutex_); | |||

| ManualCompaction* manual_compaction_ GUARDED_BY(mutex_); | |||

| VersionSet* const versions_ GUARDED_BY(mutex_); | |||

| // Have we encountered a background error in paranoid mode? | |||

| Status bg_error_ GUARDED_BY(mutex_); | |||

| CompactionStats stats_[config::kNumLevels] GUARDED_BY(mutex_); | |||

| public: | |||

| Status WriteValueIntoVlog(const Slice& key, const Slice& val, char* buf, Slice& vptr); | |||

| Status ReadValueFromVlog(std::string* key, std::string* val, std::string* vptr); | |||

| bool IsDiskBusy(const std::string& device) ; | |||

| }; | |||

| // Sanitize db options. The caller should delete result.info_log if | |||

| // it is not equal to src.info_log. | |||

| Options SanitizeOptions(const std::string& db, | |||

| const InternalKeyComparator* icmp, | |||

| const InternalFilterPolicy* ipolicy, | |||

| const Options& src); | |||

| } // namespace leveldb | |||

| #endif // STORAGE_LEVELDB_DB_DB_IMPL_H_ | |||

| // Copyright (c) 2011 The LevelDB Authors. All rights reserved. | |||

| // Use of this source code is governed by a BSD-style license that can be | |||

| // found in the LICENSE file. See the AUTHORS file for names of contributors. | |||

| #ifndef STORAGE_LEVELDB_DB_DB_IMPL_H_ | |||

| #define STORAGE_LEVELDB_DB_DB_IMPL_H_ | |||

| #include <atomic> | |||

| #include <deque> | |||

| #include <set> | |||

| #include <string> | |||

| #include "db/dbformat.h" | |||

| #include "db/log_writer.h" | |||

| #include "db/snapshot.h" | |||

| #include "leveldb/db.h" | |||

| #include "leveldb/env.h" | |||

| #include "port/port.h" | |||

| #include "port/thread_annotations.h" | |||

| namespace leveldb { | |||

| class MemTable; | |||

| class TableCache; | |||

| class Version; | |||

| class VersionEdit; | |||

| class VersionSet; | |||

| class DBImpl : public DB { | |||

| public: | |||

| DBImpl(const Options& options, const std::string& dbname); | |||

| DBImpl(const DBImpl&) = delete; | |||

| DBImpl& operator=(const DBImpl&) = delete; | |||

| ~DBImpl() override; | |||

| // Implementations of the DB interface | |||

| Status Put(const WriteOptions&, const Slice& key, | |||

| const Slice& value) override; | |||

| Status Put(const WriteOptions&, const Slice& key, | |||

| const Slice& value, uint64_t ttl) override; //实现新的put接口,心 | |||

| Status Delete(const WriteOptions&, const Slice& key) override; | |||

| Status Write(const WriteOptions& options, WriteBatch* updates) override; | |||

| Status Get(const ReadOptions& options, const Slice& key, | |||

| std::string* value) override; | |||

| Iterator* NewIterator(const ReadOptions&) override; | |||

| const Snapshot* GetSnapshot() override; | |||

| void ReleaseSnapshot(const Snapshot* snapshot) override; | |||

| bool GetProperty(const Slice& property, std::string* value) override; | |||

| void GetApproximateSizes(const Range* range, int n, uint64_t* sizes) override; | |||

| void CompactRange(const Slice* begin, const Slice* end) override; | |||

| // 朴,添加是否kv分离接口,12.07 | |||

| bool static key_value_separated_; | |||

| // Extra methods (for testing) that are not in the public DB interface | |||

| // Compact any files in the named level that overlap [*begin,*end] | |||

| void TEST_CompactRange(int level, const Slice* begin, const Slice* end); | |||

| // Force current memtable contents to be compacted. | |||

| Status TEST_CompactMemTable(); | |||

| // Return an internal iterator over the current state of the database. | |||

| // The keys of this iterator are internal keys (see format.h). | |||

| // The returned iterator should be deleted when no longer needed. | |||

| Iterator* TEST_NewInternalIterator(); | |||

| // Return the maximum overlapping data (in bytes) at next level for any | |||

| // file at a level >= 1. | |||

| int64_t TEST_MaxNextLevelOverlappingBytes(); | |||

| // Record a sample of bytes read at the specified internal key. | |||

| // Samples are taken approximately once every config::kReadBytesPeriod | |||

| // bytes. | |||

| void RecordReadSample(Slice key); | |||

| private: | |||

| friend class DB; | |||

| struct CompactionState; | |||

| struct Writer; | |||

| // Information for a manual compaction | |||

| struct ManualCompaction { | |||

| int level; | |||

| bool done; | |||

| const InternalKey* begin; // null means beginning of key range | |||

| const InternalKey* end; // null means end of key range | |||

| InternalKey tmp_storage; // Used to keep track of compaction progress | |||

| }; | |||

| // Per level compaction stats. stats_[level] stores the stats for | |||

| // compactions that produced data for the specified "level". | |||

| struct CompactionStats { | |||

| CompactionStats() : micros(0), bytes_read(0), bytes_written(0) {} | |||

| void Add(const CompactionStats& c) { | |||

| this->micros += c.micros; | |||

| this->bytes_read += c.bytes_read; | |||

| this->bytes_written += c.bytes_written; | |||

| } | |||

| int64_t micros; | |||

| int64_t bytes_read; | |||

| int64_t bytes_written; | |||

| }; | |||

| Iterator* NewInternalIterator(const ReadOptions&, | |||

| SequenceNumber* latest_snapshot, | |||

| uint32_t* seed); | |||

| Status NewDB(); | |||

| // Recover the descriptor from persistent storage. May do a significant | |||

| // amount of work to recover recently logged updates. Any changes to | |||

| // be made to the descriptor are added to *edit. | |||

| Status Recover(VersionEdit* edit, bool* save_manifest) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| void MaybeIgnoreError(Status* s) const; | |||

| // Delete any unneeded files and stale in-memory entries. | |||

| void RemoveObsoleteFiles() EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| // Compact the in-memory write buffer to disk. Switches to a new | |||

| // log-file/memtable and writes a new descriptor iff successful. | |||

| // Errors are recorded in bg_error_. | |||

| void CompactMemTable() EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status RecoverLogFile(uint64_t log_number, bool last_log, bool* save_manifest, | |||

| VersionEdit* edit, SequenceNumber* max_sequence) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status WriteLevel0Table(MemTable* mem, VersionEdit* edit, Version* base) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status MakeRoomForWrite(bool force /* compact even if there is room? */) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| WriteBatch* BuildBatchGroup(Writer** last_writer) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| void RecordBackgroundError(const Status& s); | |||

| void MaybeScheduleCompaction() EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| static void BGWork(void* db); | |||

| void BackgroundCall(); | |||

| void BackgroundCompaction() EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| void CleanupCompaction(CompactionState* compact) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status DoCompactionWork(CompactionState* compact) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| Status OpenCompactionOutputFile(CompactionState* compact); | |||

| Status FinishCompactionOutputFile(CompactionState* compact, Iterator* input); | |||

| Status InstallCompactionResults(CompactionState* compact) | |||

| EXCLUSIVE_LOCKS_REQUIRED(mutex_); | |||

| const Comparator* user_comparator() const { | |||

| return internal_comparator_.user_comparator(); | |||

| } | |||

| // Constant after construction | |||

| Env* const env_; | |||

| const InternalKeyComparator internal_comparator_; | |||

| const InternalFilterPolicy internal_filter_policy_; | |||

| const Options options_; // options_.comparator == &internal_comparator_ | |||

| const bool owns_info_log_; | |||

| const bool owns_cache_; | |||

| const std::string dbname_; | |||

| // table_cache_ provides its own synchronization | |||

| TableCache* const table_cache_; | |||

| // Lock over the persistent DB state. Non-null iff successfully acquired. | |||

| FileLock* db_lock_; | |||

| // State below is protected by mutex_ | |||

| port::Mutex mutex_; | |||

| std::atomic<bool> shutting_down_; | |||

| port::CondVar background_work_finished_signal_ GUARDED_BY(mutex_); | |||

| MemTable* mem_; | |||

| MemTable* imm_ GUARDED_BY(mutex_); // Memtable being compacted | |||

| std::atomic<bool> has_imm_; // So bg thread can detect non-null imm_ | |||

| WritableFile* logfile_; | |||

| uint64_t logfile_number_ GUARDED_BY(mutex_); | |||

| log::Writer* log_; | |||

| uint32_t seed_ GUARDED_BY(mutex_); // For sampling. | |||

| // Queue of writers. | |||

| std::deque<Writer*> writers_ GUARDED_BY(mutex_); | |||

| WriteBatch* tmp_batch_ GUARDED_BY(mutex_); | |||

| SnapshotList snapshots_ GUARDED_BY(mutex_); | |||

| // Set of table files to protect from deletion because they are | |||

| // part of ongoing compactions. | |||

| std::set<uint64_t> pending_outputs_ GUARDED_BY(mutex_); | |||

| // Has a background compaction been scheduled or is running? | |||

| bool background_compaction_scheduled_ GUARDED_BY(mutex_); | |||

| ManualCompaction* manual_compaction_ GUARDED_BY(mutex_); | |||

| VersionSet* const versions_ GUARDED_BY(mutex_); | |||

| // Have we encountered a background error in paranoid mode? | |||

| Status bg_error_ GUARDED_BY(mutex_); | |||

| CompactionStats stats_[config::kNumLevels] GUARDED_BY(mutex_); | |||

| }; | |||

| // Sanitize db options. The caller should delete result.info_log if | |||

| // it is not equal to src.info_log. | |||

| Options SanitizeOptions(const std::string& db, | |||

| const InternalKeyComparator* icmp, | |||

| const InternalFilterPolicy* ipolicy, | |||

| const Options& src); | |||

| } // namespace leveldb | |||

| #endif // STORAGE_LEVELDB_DB_DB_IMPL_H_ | |||

+ 4

- 24

db/db_test.cc

View File

+ 2

- 11

db/filename.cc

View File

+ 1

- 7

db/filename.h

View File

+ 0

- 4

db/log_test.cc

View File

+ 4

- 6

db/repair.cc

View File

+ 0

- 20

db/vlog_converter.cc

View File

| @ -1,20 +0,0 @@ | |||

| #include "util/coding.h" | |||

| #include "db/vlog_converter.h" | |||

| namespace leveldb{ | |||

| namespace vlog{ | |||

| // 当需要将键值对插入数据库时,将值的存储位置 (file_no 和 file_offset) 编码为 Vlog Pointer,并与键关联存储。 | |||

| // 紧凑的编码格式便于减少存储开销。 | |||

| Slice VlogConverter::GetVptr(uint64_t file_no, uint64_t file_offset, char* buf){ | |||

| char* vfileno_end = EncodeVarint64(buf, file_no); | |||

| char* vfileoff_end = EncodeVarint64(vfileno_end, file_offset); | |||

| return Slice(buf, vfileoff_end - buf); | |||

| } | |||

| Status VlogConverter::DecodeVptr(uint64_t* file_no, uint64_t* file_offset, Slice* vptr){ | |||

| bool decoded_status = true; | |||

| decoded_status &= GetVarint64(vptr, file_no); | |||

| decoded_status &= GetVarint64(vptr, file_offset); | |||

| if(!decoded_status) return Status::Corruption("Can not Decode vptr from Read Bytes."); | |||

| else return Status::OK(); | |||

| } | |||

| }// namespace vlog | |||

| } | |||

+ 0

- 19

db/vlog_converter.h

View File

| @ -1,19 +0,0 @@ | |||

| #ifndef STORAGE_LEVELDB_DB_VLOG_CONVERTER_H_ | |||

| #define STORAGE_LEVELDB_DB_VLOG_CONVERTER_H_ | |||

| #include <cstdint> | |||

| #include "leveldb/slice.h" | |||

| #include "leveldb/status.h" | |||

| namespace leveldb{ | |||

| namespace vlog{ | |||

| class VlogConverter{ | |||

| public: | |||

| VlogConverter() = default; | |||

| ~VlogConverter() = default; | |||

| Slice GetVptr(uint64_t file_no, uint64_t file_offset, char* buf); | |||

| Status DecodeVptr(uint64_t* file_no, uint64_t* file_offset, Slice* vptr); | |||

| }; | |||

| }// namespace vlog | |||

| } | |||

| #endif | |||

+ 0

- 33

db/vlog_manager.cc

View File

| @ -1,33 +0,0 @@ | |||

| #include "db/vlog_manager.h" | |||

| namespace leveldb{ | |||

| namespace vlog{ | |||

| void VlogManager::AddVlogFile(uint64_t vlogfile_number, SequentialFile* seq_file, WritableFile* write_file){ | |||

| if(vlog_table_.find(vlogfile_number) == vlog_table_.end()){ | |||

| vlog_table_[vlogfile_number] = seq_file; | |||

| writable_to_sequential_[write_file] = seq_file; | |||

| } | |||

| else{ | |||

| //Do Nothing | |||

| } | |||

| } | |||

| SequentialFile* VlogManager::GetVlogFile(uint64_t vlogfile_number){ | |||

| auto it = vlog_table_.find(vlogfile_number); | |||

| if(it != vlog_table_.end()){ | |||

| return it->second; | |||

| } | |||

| else return nullptr; | |||

| } | |||

| bool VlogManager::IsEmpty(){ | |||

| return vlog_table_.size() == 0; | |||

| } | |||

| // 标记一个vlog文件有一个新的无效的value,pzy | |||

| void VlogManager::MarkVlogValueInvalid(uint64_t vlogfile_number, uint64_t offset) { | |||

| auto vlog_file = GetVlogFile(vlogfile_number); | |||

| if (vlog_file) { | |||

| vlog_file->MarkValueInvalid(offset); // 调用具体文件的标记逻辑 | |||

| } | |||

| } | |||

| }// namespace vlog | |||

| } | |||

+ 0

- 61

db/vlog_manager.h

View File

| @ -1,61 +0,0 @@ | |||

| #ifndef STORAGE_LEVELDB_DB_VLOG_MANAGER_H_ | |||

| #define STORAGE_LEVELDB_DB_VLOG_MANAGER_H_ | |||

| #include <unordered_map> | |||

| #include <cstdint> | |||

| #include "leveldb/env.h" | |||

| #include "db/filename.h" | |||

| #include "leveldb/options.h" | |||

| namespace leveldb{ | |||

| class SequentialFile; | |||

| namespace vlog{ | |||

| class VlogManager{ | |||

| public: | |||

| VlogManager() = default; | |||

| ~VlogManager() = default; | |||

| //Add a vlog file, vlog file is already exist. | |||

| void AddVlogFile(uint64_t vlogfile_number, SequentialFile* seq_file, WritableFile* write_file); | |||

| SequentialFile* GetVlogFile(uint64_t vlogfile_number); | |||

| bool IsEmpty(); | |||

| void MarkVlogValueInvalid(uint64_t vlogfile_number, uint64_t offset); | |||

| SequentialFile* GetSequentialFile(WritableFile* write_file) { | |||

| auto it = writable_to_sequential_.find(write_file); | |||

| return it != writable_to_sequential_.end() ? it->second : nullptr; | |||

| } | |||

| void IncrementTotalValueCount(WritableFile* write_file) { | |||

| auto seq_file = GetSequentialFile(write_file); | |||

| if (seq_file) { | |||

| seq_file->IncrementTotalValueCount(); // 假设 SequentialFile 提供该方法 | |||

| } | |||

| } | |||

| void CleanupInvalidVlogFiles(const Options& options, const std::string& dbname) { | |||

| for (const auto& vlog_pair : vlog_table_) { | |||

| uint64_t vlogfile_number = vlog_pair.first; | |||

| auto vlog_file = vlog_pair.second; | |||

| if (vlog_file->AllValuesInvalid()) { // 检查文件内所有值是否无效 | |||

| RemoveVlogFile(vlogfile_number, options, dbname); // 删除 VLog 文件 | |||

| } | |||

| } | |||

| } | |||

| void RemoveVlogFile(uint64_t vlogfile_number, const Options& options, const std::string& dbname) { // 移除无效的vlogfile文件 | |||

| auto it = vlog_table_.find(vlogfile_number); | |||

| if (it != vlog_table_.end()) { | |||

| delete it->second; // 删除对应的 SequentialFile | |||

| vlog_table_.erase(it); // 从管理器中移除 | |||

| options.env->DeleteFile(VlogFileName(dbname, vlogfile_number)); // 删除实际文件 | |||

| } | |||

| } | |||

| private: | |||

| std::unordered_map<uint64_t, SequentialFile*> vlog_table_; // 用映射组织vlog文件号和文件的关系 | |||

| std::unordered_map<WritableFile*, SequentialFile*> writable_to_sequential_; | |||

| }; | |||

| }// namespace vlog | |||

| } | |||

| #endif | |||

+ 0

- 58

db/vlog_reader.cc

View File

| @ -1,58 +0,0 @@ | |||

| #include <cstdint> | |||

| #include "db/vlog_reader.h" | |||

| #include "leveldb/slice.h" | |||

| #include "leveldb/env.h" | |||

| #include "util/coding.h" | |||

| namespace leveldb{ | |||

| namespace vlog{ | |||

| VReader::VReader(SequentialFile* file) // A file abstraction for reading sequentially through a file | |||

| :file_(file){} | |||

| Status VReader::ReadRecord(uint64_t vfile_offset, std::string* record){ | |||

| Status s; | |||

| Slice size_slice; | |||

| char size_buf[11]; | |||

| uint64_t rec_size = 0; | |||

| s = file_->SkipFromHead(vfile_offset); // 将文件的读取位置移动到 vfile_offset | |||

| if(s.ok()) s = file_ -> Read(10, &size_slice, size_buf); // 先把Record 长度读出来, 最长10字节. | |||

| if(s.ok()){ | |||

| if(GetVarint64(&size_slice, &rec_size) == false){ // 解析变长整数,得到记录的长度 rec_size | |||

| return Status::Corruption("Failed to decode vlog record size."); | |||

| } | |||

| std::string rec; | |||

| char* c_rec = new char[rec_size]; // 为记录分配一个临时缓冲区 | |||

| //TODO: Should delete c_rec? | |||

| rec.resize(rec_size); | |||

| Slice rec_slice; | |||

| s = file_->SkipFromHead(vfile_offset + (size_slice.data() - size_buf)); // 将文件的读取位置移动 | |||

| if(!s.ok()) return s; | |||

| s = file_-> Read(rec_size, &rec_slice, c_rec); // 从文件中读取 rec_size 字节的数据到 c_rec 中,并用 rec_slice 包装这些数据 | |||

| if(!s.ok()) return s; | |||

| rec = std::string(c_rec, rec_size); | |||

| *record = std::move(std::string(rec)); | |||

| } | |||

| return s; | |||

| } | |||

| Status VReader::ReadKV(uint64_t vfile_offset, std::string* key, std::string* val){ | |||

| std::string record_str; | |||

| Status s = ReadRecord(vfile_offset, &record_str); | |||

| if(s.ok()){ | |||

| Slice record = Slice(record_str); | |||

| //File the val | |||

| uint64_t key_size; | |||

| bool decode_flag = true; | |||

| decode_flag &= GetVarint64(&record, &key_size); // 获取键的长度 | |||

| if(decode_flag){ | |||

| *key = Slice(record.data(), key_size).ToString(); // 从record中截取键值 | |||

| record = Slice(record.data() + key_size, record.size() - key_size); // 截取剩余的record | |||

| } | |||

| uint64_t val_size; | |||

| decode_flag &= GetVarint64(&record, &val_size); // 获取value的长度 | |||

| if(decode_flag) *val = Slice(record.data(), val_size).ToString(); // 截取value的值 | |||

| if(!decode_flag || val->size() != record.size()){ | |||

| s = Status::Corruption("Failed to decode Record Read From vlog."); | |||

| } | |||

| } | |||

| return s; | |||

| } | |||

| }// namespace vlog. | |||

| } | |||

+ 0

- 25

db/vlog_reader.h

View File

| @ -1,25 +0,0 @@ | |||

| #ifndef STORAGE_LEVELDB_DB_VLOG_READER_H_ | |||

| #define STORAGE_LEVELDB_DB_VLOG_READER_H_ | |||

| #include <cstdint> | |||

| #include "leveldb/slice.h" | |||

| #include "leveldb/status.h" | |||

| #include "port/port.h" | |||

| namespace leveldb { | |||

| class SequentialFile; | |||

| namespace vlog { | |||

| class VReader { | |||

| public: | |||

| explicit VReader(SequentialFile* file); | |||

| ~VReader() = default; | |||

| Status ReadRecord(uint64_t vfile_offset, std::string* record); | |||

| Status ReadKV(uint64_t vfile_offset, std::string* key ,std::string* val); | |||

| private: | |||

| SequentialFile* file_; | |||

| }; | |||

| } // namespace vlog | |||

| } | |||

| #endif | |||

+ 0

- 26

db/vlog_writer.cc

View File

| @ -1,26 +0,0 @@ | |||

| #include <cstdint> | |||

| #include "db/vlog_writer.h" | |||

| #include "leveldb/slice.h" | |||

| #include "leveldb/env.h" | |||

| #include "util/coding.h" | |||

| namespace leveldb{ | |||

| namespace vlog{ | |||

| VWriter::VWriter(WritableFile* vlogfile) | |||

| :vlogfile_(vlogfile){} | |||

| VWriter::~VWriter() = default; | |||

| Status VWriter::AddRecord(const Slice& slice, int& write_size){ | |||

| //append slice length. | |||

| write_size = slice.size(); | |||

| char buf[10]; // Used for Convert int64 to char. | |||

| char* end_byte = EncodeVarint64(buf, slice.size()); | |||

| write_size += end_byte - buf; | |||

| Status s = vlogfile_->Append(Slice(buf, end_byte - buf)); | |||

| //append slice | |||

| if(s.ok()) s = vlogfile_->Append(slice); | |||

| return s; | |||

| } | |||

| Status VWriter::Flush(){ | |||

| return vlogfile_->Flush(); | |||

| } | |||

| }// namespace vlog | |||

| } | |||

+ 0

- 26

db/vlog_writer.h

View File

| @ -1,26 +0,0 @@ | |||

| #ifndef STORAGE_LEVELDB_DB_VLOG_WRITER_H_ | |||

| #define STORAGE_LEVELDB_DB_VLOG_WRITER_H_ | |||

| #include <cstdint> | |||

| #include "leveldb/slice.h" | |||

| #include "leveldb/status.h" | |||

| // format: [size, key, vptr, value]. | |||

| namespace leveldb{ | |||

| class WritableFile; | |||

| namespace vlog{ | |||

| class VWriter{ | |||

| public: | |||

| explicit VWriter(WritableFile* vlogfile); | |||

| ~VWriter(); | |||

| Status AddRecord(const Slice& slice, int& write_size); | |||

| VWriter(const VWriter&) = delete; | |||

| VWriter& operator=(const VWriter&) = delete; | |||

| Status Flush(); | |||

| private: | |||

| WritableFile* vlogfile_; | |||

| }; | |||

| }// namespace vlog | |||

| } | |||

| #endif | |||

+ 209

- 168

db/write_batch.cc

View File

| @ -1,168 +1,209 @@ | |||

| // Copyright (c) 2011 The LevelDB Authors. All rights reserved. | |||

| // Use of this source code is governed by a BSD-style license that can be | |||

| // found in the LICENSE file. See the AUTHORS file for names of contributors. | |||

| // | |||

| // WriteBatch::rep_ := | |||

| // sequence: fixed64 | |||

| // count: fixed32 | |||

| // data: record[count] | |||

| // record := | |||

| // kTypeValue varstring varstring | | |||

| // kTypeDeletion varstring | |||

| // varstring := | |||

| // len: varint32 | |||

| // data: uint8[len] | |||

| #include "leveldb/write_batch.h" | |||

| #include "db/dbformat.h" | |||

| #include "db/memtable.h" | |||

| #include "db/write_batch_internal.h" | |||

| #include "leveldb/db.h" | |||

| #include "util/coding.h" | |||

| namespace leveldb { | |||

| // WriteBatch header has an 8-byte sequence number followed by a 4-byte count. | |||

| static const size_t kHeader = 12; | |||

| WriteBatch::WriteBatch() { Clear(); } | |||

| WriteBatch::~WriteBatch() = default; | |||

| WriteBatch::Handler::~Handler() = default; | |||

| void WriteBatch::Clear() { | |||

| rep_.clear(); | |||

| rep_.resize(kHeader); | |||

| } | |||

| size_t WriteBatch::ApproximateSize() const { return rep_.size(); } | |||

| Status WriteBatch::Iterate(Handler* handler) const { | |||

| Slice input(rep_); | |||

| if (input.size() < kHeader) { | |||

| return Status::Corruption("malformed WriteBatch (too small)"); | |||

| } | |||

| //rep_ Header 12字节, 包含8字节sequence和4字节count. | |||

| input.remove_prefix(kHeader); | |||

| Slice key, value; | |||

| int found = 0; | |||

| while (!input.empty()) { | |||

| found++; | |||

| char tag = input[0]; | |||

| input.remove_prefix(1); | |||

| switch (tag) { | |||

| case kTypeValue: | |||

| if (GetLengthPrefixedSlice(&input, &key) && | |||

| GetLengthPrefixedSlice(&input, &value)) { | |||

| handler->Put(key, value); | |||

| } else { | |||

| return Status::Corruption("bad WriteBatch Put"); | |||

| } | |||

| break; | |||

| case kTypeDeletion: | |||

| if (GetLengthPrefixedSlice(&input, &key)) { | |||

| handler->Delete(key); | |||

| } else { | |||

| return Status::Corruption("bad WriteBatch Delete"); | |||

| } | |||

| break; | |||

| default: | |||

| return Status::Corruption("unknown WriteBatch tag"); | |||

| } | |||

| } | |||

| //合法性判断 | |||

| if (found != WriteBatchInternal::Count(this)) { | |||

| return Status::Corruption("WriteBatch has wrong count"); | |||

| } else { | |||

| return Status::OK(); | |||

| } | |||

| } | |||

| int WriteBatchInternal::Count(const WriteBatch* b) { | |||

| return DecodeFixed32(b->rep_.data() + 8); | |||

| } | |||

| void WriteBatchInternal::SetCount(WriteBatch* b, int n) { | |||

| EncodeFixed32(&b->rep_[8], n); | |||

| } | |||

| SequenceNumber WriteBatchInternal::Sequence(const WriteBatch* b) { | |||

| return SequenceNumber(DecodeFixed64(b->rep_.data())); | |||

| } | |||

| void WriteBatchInternal::SetSequence(WriteBatch* b, SequenceNumber seq) { | |||

| EncodeFixed64(&b->rep_[0], seq); | |||

| } | |||

| void WriteBatch::Put(const Slice& key, const Slice& value) { | |||

| WriteBatchInternal::SetCount(this, WriteBatchInternal::Count(this) + 1); | |||

| rep_.push_back(static_cast<char>(kTypeValue)); | |||

| PutLengthPrefixedSlice(&rep_, key); | |||

| PutLengthPrefixedSlice(&rep_, value); | |||

| } | |||

| void WriteBatch::Delete(const Slice& key) { | |||

| WriteBatchInternal::SetCount(this, WriteBatchInternal::Count(this) + 1); | |||

| rep_.push_back(static_cast<char>(kTypeDeletion)); | |||

| PutLengthPrefixedSlice(&rep_, key); | |||

| } | |||

| void WriteBatch::Append(const WriteBatch& source) { | |||

| WriteBatchInternal::Append(this, &source); | |||

| } | |||

| namespace { | |||

| class MemTableInserter : public WriteBatch::Handler { | |||

| public: | |||

| SequenceNumber sequence_; | |||

| MemTable* mem_; | |||

| void Put(const Slice& key, const Slice& value) override { | |||

| mem_->Add(sequence_, kTypeValue, key, value); | |||

| sequence_++; | |||

| } | |||

| void Delete(const Slice& key) override { | |||

| mem_->Add(sequence_, kTypeDeletion, key, Slice()); | |||

| sequence_++; | |||

| } | |||

| }; | |||

| //Use For KVSeqMem | |||

| class MemTableInserterKVSeq : public WriteBatch::Handler{ | |||

| public: | |||

| SequenceNumber sequence_; | |||

| MemTable* mem_; | |||

| void Put(const Slice& key, const Slice& value) override { | |||

| assert(0 && "TODO"); | |||

| mem_->Add(sequence_, kTypeValue, key, value); | |||

| sequence_++; | |||

| } | |||

| void Delete(const Slice& key) override { | |||

| mem_->Add(sequence_, kTypeDeletion, key, Slice()); | |||

| sequence_++; | |||

| } | |||

| }; | |||

| } // namespace | |||

| Status WriteBatchInternal::InsertInto(const WriteBatch* b, MemTable* memtable) { | |||

| MemTableInserter inserter; | |||

| inserter.sequence_ = WriteBatchInternal::Sequence(b); | |||

| inserter.mem_ = memtable; | |||

| return b->Iterate(&inserter); | |||

| } | |||

| void WriteBatchInternal::SetContents(WriteBatch* b, const Slice& contents) { | |||

| assert(contents.size() >= kHeader); | |||

| b->rep_.assign(contents.data(), contents.size()); | |||

| } | |||

| void WriteBatchInternal::Append(WriteBatch* dst, const WriteBatch* src) { | |||

| SetCount(dst, Count(dst) + Count(src)); | |||

| assert(src->rep_.size() >= kHeader); | |||

| dst->rep_.append(src->rep_.data() + kHeader, src->rep_.size() - kHeader); | |||

| } | |||

| } // namespace leveldb | |||

| // Copyright (c) 2011 The LevelDB Authors. All rights reserved. | |||

| // Use of this source code is governed by a BSD-style license that can be | |||

| // found in the LICENSE file. See the AUTHORS file for names of contributors. | |||

| // | |||

| // WriteBatch::rep_ := | |||

| // sequence: fixed64 | |||

| // count: fixed32 | |||

| // data: record[count] | |||

| // record := | |||

| // kTypeValue varstring varstring | | |||

| // kTypeDeletion varstring | |||

| // varstring := | |||

| // len: varint32 | |||

| // data: uint8[len] | |||

| #include "leveldb/write_batch.h" | |||

| #include "db/dbformat.h" | |||

| #include "db/memtable.h" | |||

| #include "db/write_batch_internal.h" | |||

| #include "leveldb/db.h" | |||

| #include "db/db_impl.h" //朴 | |||

| #include "util/coding.h" | |||

| #include <sstream> // For std::ostringstream 心 | |||

| #include <cstdint> | |||

| #include <string> | |||

| namespace leveldb { | |||

| // WriteBatch header has an 8-byte sequence number followed by a 4-byte count. | |||

| static const size_t kHeader = 12; | |||

| WriteBatch::WriteBatch() { Clear(); } | |||

| WriteBatch::~WriteBatch() = default; | |||

| WriteBatch::Handler::~Handler() = default; | |||

| void WriteBatch::Clear() { | |||

| rep_.clear(); | |||

| rep_.resize(kHeader); | |||

| } | |||

| size_t WriteBatch::ApproximateSize() const { return rep_.size(); } | |||

| Status WriteBatch::Iterate(Handler* handler) const { | |||

| Slice input(rep_); | |||

| if (input.size() < kHeader) { | |||

| return Status::Corruption("malformed WriteBatch (too small)"); | |||

| } | |||

| input.remove_prefix(kHeader); | |||

| Slice key, value; | |||

| int found = 0; | |||

| while (!input.empty()) { | |||

| found++; | |||

| char tag = input[0]; | |||

| input.remove_prefix(1); | |||

| switch (tag) { | |||

| case kTypeValue: | |||

| if (GetLengthPrefixedSlice(&input, &key) && | |||

| GetLengthPrefixedSlice(&input, &value)) { | |||

| handler->Put(key, value); | |||

| } else { | |||

| return Status::Corruption("bad WriteBatch Put"); | |||

| } | |||

| break; | |||

| case kTypeDeletion: | |||

| if (GetLengthPrefixedSlice(&input, &key)) { | |||

| handler->Delete(key); | |||

| } else { | |||

| return Status::Corruption("bad WriteBatch Delete"); | |||

| } | |||

| break; | |||

| default: | |||

| return Status::Corruption("unknown WriteBatch tag"); | |||

| } | |||

| } | |||

| if (found != WriteBatchInternal::Count(this)) { | |||

| return Status::Corruption("WriteBatch has wrong count"); | |||

| } else { | |||

| return Status::OK(); | |||

| } | |||

| } | |||

| int WriteBatchInternal::Count(const WriteBatch* b) { | |||

| return DecodeFixed32(b->rep_.data() + 8); | |||

| } | |||

| void WriteBatchInternal::SetCount(WriteBatch* b, int n) { | |||

| EncodeFixed32(&b->rep_[8], n); | |||

| } | |||

| SequenceNumber WriteBatchInternal::Sequence(const WriteBatch* b) { | |||

| return SequenceNumber(DecodeFixed64(b->rep_.data())); | |||

| } | |||

| void WriteBatchInternal::SetSequence(WriteBatch* b, SequenceNumber seq) { | |||

| EncodeFixed64(&b->rep_[0], seq); | |||

| } | |||

| void WriteBatch::Put(const Slice& key, const Slice& value) { | |||

| WriteBatchInternal::SetCount(this, WriteBatchInternal::Count(this) + 1); | |||

| rep_.push_back(static_cast<char>(kTypeValue)); | |||

| PutLengthPrefixedSlice(&rep_, key); | |||

| PutLengthPrefixedSlice(&rep_, value); | |||

| } | |||

| // void WriteBatch::Put(const Slice& key, const Slice& value) { // 朴,kv分离,12.07 | |||

| // if (DBImpl::key_value_separated_) { | |||

| // // 分离key和value的逻辑 | |||

| // // 例如,你可以将key和value分别存储在不同的容器中 | |||

| // // 这里需要根据你的具体需求来实现 | |||

| // //... | |||

| // if (value.size() > max_value_size_) { | |||

| // // 分离key和value的逻辑 | |||

| // // 将value存进新的数据结构blobfile | |||

| // //... | |||

| // // 例如,你可以使用以下代码将value写入blobfile | |||

| // std::ofstream blobfile("blobfile.dat", std::ios::binary | std::ios::app); | |||

| // blobfile.write(value.data(), value.size()); | |||

| // blobfile.close(); | |||

| // } | |||

| // } | |||

| // else { | |||

| // // 不分离key和value的逻辑 | |||

| // WriteBatchInternal::SetCount(this, WriteBatchInternal::Count(this) + 1); | |||

| // rep_.push_back(static_cast<char>(kTypeValue)); | |||

| // PutLengthPrefixedSlice(&rep_, key); | |||

| // PutLengthPrefixedSlice(&rep_, value); | |||

| // } | |||

| // } | |||

| void WriteBatch::Put(const Slice& key, const Slice& value, uint64_t ttl) { | |||

| WriteBatchInternal::SetCount(this, WriteBatchInternal::Count(this) + 1); | |||

| rep_.push_back(static_cast<char>(kTypeValue)); | |||

| PutLengthPrefixedSlice(&rep_, key); | |||

| // 获取当前时间 | |||

| auto now = std::chrono::system_clock::now(); | |||

| // 加上ttl | |||

| auto future_time = now + std::chrono::seconds(ttl); | |||

| // 转换为 time_t | |||

| std::time_t future_time_t = std::chrono::system_clock::to_time_t(future_time); | |||

| // 将 time_t 转换为 tm 结构 | |||

| std::tm* local_tm = std::localtime(&future_time_t); | |||

| // 格式化为字符串 | |||

| char buffer[20]; // 格式化字符串的缓冲区 | |||

| std::strftime(buffer, sizeof(buffer), "%Y-%m-%d %H:%M:%S", local_tm); | |||

| std::string future_time_str(buffer); | |||

| // 拼接原本的值和时间字符串 | |||

| std::string combined_str = value.ToString() + future_time_str; | |||

| PutLengthPrefixedSlice(&rep_, Slice(combined_str)); | |||

| } // 心 | |||

| void WriteBatch::Delete(const Slice& key) { | |||

| WriteBatchInternal::SetCount(this, WriteBatchInternal::Count(this) + 1); | |||

| rep_.push_back(static_cast<char>(kTypeDeletion)); | |||

| PutLengthPrefixedSlice(&rep_, key); | |||

| } | |||

| void WriteBatch::Append(const WriteBatch& source) { | |||

| WriteBatchInternal::Append(this, &source); | |||

| } | |||

| namespace { | |||

| class MemTableInserter : public WriteBatch::Handler { | |||

| public: | |||

| SequenceNumber sequence_; | |||

| MemTable* mem_; | |||

| void Put(const Slice& key, const Slice& value) override { | |||

| mem_->Add(sequence_, kTypeValue, key, value); | |||

| sequence_++; | |||

| } | |||

| void Delete(const Slice& key) override { | |||

| mem_->Add(sequence_, kTypeDeletion, key, Slice()); | |||

| sequence_++; | |||

| } | |||

| }; | |||

| } // namespace | |||

| Status WriteBatchInternal::InsertInto(const WriteBatch* b, MemTable* memtable) { | |||

| MemTableInserter inserter; | |||

| inserter.sequence_ = WriteBatchInternal::Sequence(b); | |||

| inserter.mem_ = memtable; | |||

| return b->Iterate(&inserter); | |||

| } | |||

| void WriteBatchInternal::SetContents(WriteBatch* b, const Slice& contents) { | |||

| assert(contents.size() >= kHeader); | |||

| b->rep_.assign(contents.data(), contents.size()); | |||

| } | |||

| void WriteBatchInternal::Append(WriteBatch* dst, const WriteBatch* src) { | |||

| SetCount(dst, Count(dst) + Count(src)); | |||

| assert(src->rep_.size() >= kHeader); | |||

| dst->rep_.append(src->rep_.data() + kHeader, src->rep_.size() - kHeader); | |||

| } | |||

| } // namespace leveldb | |||

+ 1

- 1

db/write_batch_internal.h

View File

+ 0

- 45

draw.py

View File

| @ -1,45 +0,0 @@ | |||

| import matplotlib.pyplot as plt | |||

| x = [128, 256, 512, 1024, 2048, 3072, 4096] | |||

| y_w = [ | |||

| [52.8, 67.0, 60.8, 52.3, 42.2, 34.2, 30.2],# noKVSep | |||

| [44.2, 87.5, 139.5, 274.2 ,426.3, 576.2, 770.4], # kvSepBeforeMem | |||

| [59.9, 102.4 ,147.9 ,173.5, 184.2, 199.2, 206.8] #kvSepBeforeSSD | |||

| ] | |||

| y_r = [ | |||

| [731.9, 1127.4, 1515.2, 3274.7, 4261.9, 4886.3, 4529.8],# noKVSep | |||

| [158.9, 154.9, 145.0, 160.9 , 147.3, 144.0, 127.4], # kvSepBeforeMem | |||

| [171.1, 136.0 ,179.8 ,169.8, 159.9, 161.5, 168.6] #kvSepBeforeSSD | |||

| ] | |||

| y_random = [ | |||

| [2.363, 2.698, 3.972, 3.735, 7.428, 12.137, 17.753],# noKVSep | |||

| [2.957, 2.953, 3.417, 3.363 ,3.954, 17.516, 79.023], # kvSepBeforeMem | |||

| [2.927, 2.739 ,2.947, 3.604, 3.530, 19.189, 80.608] #kvSepBeforeSSD | |||

| ] | |||

| plt.figure(num = 1) | |||

| plt.title("Write Performance(fillrandom)") | |||

| plt.xlabel("Value size(B)") | |||

| plt.ylabel("Throughout(MiB/s)") | |||

| l1 = plt.plot(x, y_w[0], "bo", linestyle = "dashed") | |||

| l1 = plt.plot(x, y_w[1], "g^", linestyle = "dashed") | |||

| l1 = plt.plot(x, y_w[2], "y+", linestyle = "dashed") | |||

| plt.legend(["noKVSep", "kvSepBeforeMem", "kvSepBeforeSSD"]) | |||

| plt.show() | |||

| plt.figure(num = 1) | |||

| plt.title("Read Performance(readreverse)") | |||

| plt.xlabel("Value size(B)") | |||

| plt.ylabel("Throughout(MiB/s)") | |||

| l1 = plt.plot(x, y_r[0], "bo", linestyle = "dashed") | |||

| l1 = plt.plot(x, y_r[1], "g^", linestyle = "dashed") | |||

| l1 = plt.plot(x, y_r[2], "y+", linestyle = "dashed") | |||

| plt.legend(["noKVSep", "kvSepBeforeMem", "kvSepBeforeSSD"]) | |||

| plt.show() | |||

| plt.title("Read Performance(readrandom)") | |||

| plt.xlabel("Value size(B)") | |||

| plt.ylabel("Micros/op") | |||

| l1 = plt.plot(x, y_random[0], "bo", linestyle = "dashed") | |||

| l1 = plt.plot(x, y_random[1], "g^", linestyle = "dashed") | |||

| l1 = plt.plot(x, y_random[2], "y+", linestyle = "dashed") | |||

| plt.legend(["noKVSep", "kvSepBeforeMem", "kvSepBeforeSSD"]) | |||

| plt.show() | |||

+ 0

- 50

examples/GCtest.cc

View File

| @ -1,50 +0,0 @@ | |||

| #include <iostream> | |||

| #include "leveldb/db.h" | |||

| #include "leveldb/options.h" | |||

| #include "gtest/gtest.h" | |||

| class LevelDBTest : public ::testing::Test { | |||

| protected: | |||

| leveldb::DB* db; | |||

| leveldb::Options options; | |||

| std::string db_path = "/tmp/testdb"; | |||

| void SetUp() override { | |||

| options.create_if_missing = true; | |||

| leveldb::Status status = leveldb::DB::Open(options, db_path, &db); | |||

| ASSERT_TRUE(status.ok()) << "Failed to open DB: " << status.ToString(); | |||

| } | |||

| void TearDown() override { | |||

| delete db; | |||

| } | |||

| }; | |||

| TEST_F(LevelDBTest, CompactionTest) { | |||

| // 插入数据 | |||

| db->Put(leveldb::WriteOptions(), "start", "value1"); | |||

| db->Put(leveldb::WriteOptions(), "end", "value2"); | |||

| db->Put(leveldb::WriteOptions(), "key_to_delete", "value3"); | |||

| // 删除一个键,模拟删除标记 | |||

| db->Delete(leveldb::WriteOptions(), "key_to_delete"); | |||

| // 触发压缩 | |||

| leveldb::Slice begin_key("start"); | |||

| leveldb::Slice end_key("end"); | |||

| db->CompactRange(&begin_key, &end_key); | |||

| // 验证压缩后的数据 | |||

| std::string value; | |||

| leveldb::Status status = db->Get(leveldb::ReadOptions(), "key_to_delete", &value); | |||

| if (!status.ok()) { | |||

| std::cout << "'key_to_delete' was successfully removed during compaction." << std::endl; | |||

| } else { | |||

| FAIL() << "Unexpected: 'key_to_delete' still exists: " << value; | |||

| } | |||

| } | |||

| int main(int argc, char** argv) { | |||

| ::testing::InitGoogleTest(&argc, argv); | |||

| return RUN_ALL_TESTS(); | |||

| } | |||

+ 0

- 28

examples/ValueConvertTest.cc

View File

| @ -1,28 +0,0 @@ | |||

| #include <cassert> | |||

| #include <iostream> | |||

| #include "leveldb/db.h" | |||

| int main(){ | |||

| leveldb::DB* db; | |||

| leveldb::Options options; | |||

| options.create_if_missing = true; | |||

| options.kvSepType = leveldb::kVSepBeforeSSD; | |||

| leveldb::Status status = leveldb::DB::Open(options, "/tmp/testdb", &db); | |||

| std::cout<< status.ToString() << '\n'; | |||

| std::string fill_str = ""; | |||

| // fill_str 4KB | |||

| for(int i = 1; i<= 4096; i++){ | |||

| fill_str.push_back('%'); | |||

| } | |||

| for(int i = 1E5; i>= 1; i--){ | |||

| status = db -> Put(leveldb::WriteOptions(), "key" + std::to_string(i), "val" + std::to_string(i) + fill_str); | |||

| } | |||

| if(status.ok()) { | |||

| std::string val; | |||

| for(int i = 0; i< 1E5; i++){ | |||

| status = db -> Get(leveldb::ReadOptions(), "key" + std::to_string(i), &val); | |||

| if(status.ok()) std::cout<< "Find value of \'key"<<i<<"\' From db:" << val << "\n"; | |||

| } | |||

| } | |||

| delete db; | |||

| } | |||

+ 0

- 173

examples/WiscKeyTest_1.cc

View File

| @ -1,173 +0,0 @@ | |||

| #include "WiscKeyTest_1.h" | |||

| #include <fstream> | |||

| #include <algorithm> | |||

| #include <vector> | |||

| #include <ctime> | |||

| #include <cstdlib> | |||

| typedef struct WiscKey { // 集成了leveldb数据库和一个logfile链表 | |||

| string dir; | |||

| DB * leveldb; | |||

| FILE * logfile; | |||

| } WK; | |||

| static bool wisckey_get(WK * wk, string &key, string &value) | |||

| { | |||

| cout << "\n\t\tGet Function\n\n"; | |||

| cout << "Key Received: " << key << endl; | |||

| cout << "Value Received: " << value << endl; | |||

| string offsetinfo; | |||

| const bool found = leveldb_get(wk->leveldb, key, offsetinfo); | |||

| if (found) { | |||

| cout << "Offset and Length: " << offsetinfo << endl; | |||

| } | |||

| else { | |||

| cout << "Record:Not Found" << endl; | |||

| return false; | |||

| } | |||

| std::string value_offset; | |||

| std::string value_length; | |||

| std::string s = offsetinfo; | |||

| std::string delimiter = "&&"; | |||

| size_t pos = 0; | |||

| std::string token; | |||

| while ((pos = s.find(delimiter)) != std::string::npos) { | |||

| token = s.substr(0, pos); | |||

| value_offset = token; | |||

| s.erase(0, pos + delimiter.length()); | |||

| } | |||

| value_length = s; | |||

| cout << "Value Offset: " << value_offset << endl; | |||

| cout << "Value Length: " << value_length << endl; | |||

| std::string::size_type sz; | |||