Compare commits

merge into: 10235501447:master

10235501447:finalv1

10235501447:finalv2

10235501447:finalv3

10235501447:game_control

10235501447:gesture

10235501447:gesture-game

10235501447:gesture_for_chrome

10235501447:master

10235501447:screenshot

10235501447:vosk_inc

10235501447:wavesign

10235501447:web

pull from: 10235501447:screenshot

10235501447:finalv1

10235501447:finalv2

10235501447:finalv3

10235501447:game_control

10235501447:gesture

10235501447:gesture-game

10235501447:gesture_for_chrome

10235501447:master

10235501447:screenshot

10235501447:vosk_inc

10235501447:wavesign

10235501447:web

No commits in common. 'master' and 'screenshot' have entirely different histories.

master

...

screenshot

No commits in common. 'master' and 'screenshot' have entirely different histories.

master

...

screenshot

47 changed files with 1001 additions and 809 deletions

Split View

Diff Options

-

+0 -3.gitignore

-

+5 -0.vscode/settings.json

-

+21 -0LICENSE

-

+0 -149README.md

-

BINimage/cropped_Right_1753179393.jpg

-

BINimage/cropped_Right_1753179532.jpg

-

BINimage/cropped_Right_1753179605.jpg

-

BINimg/主平台自动化测试结果.png

-

+0 -0main.py

-

+0 -75sprint1/sprint1-planning.md

-

+0 -10sprint1/迭代计划.md

-

BINsprint1/隔空手势识别系统Sprint1.pptx

-

BINsprint2/WaveControl-sprint2.pptx

-

+0 -120sprint2/sprint2.md

-

BINsprint3/Sprint 3 技术文档:手势识别与游戏控制.pptx

-

+0 -146sprint3/sprint3.md

-

BINsprint4/WaveControl-sprint4.pptx

-

+127 -0utils/GUI.py

-

+0 -0utils/__init__.py

-

BINutils/__pycache__/finger_drawer.cpython-312.pyc

-

BINutils/__pycache__/finger_drawer.cpython-38.pyc

-

BINutils/__pycache__/gesture_data.cpython-312.pyc

-

BINutils/__pycache__/gesture_data.cpython-38.pyc

-

BINutils/__pycache__/hand_gesture.cpython-312.pyc

-

BINutils/__pycache__/hand_gesture.cpython-38.pyc

-

BINutils/__pycache__/index_finger.cpython-312.pyc

-

BINutils/__pycache__/index_finger.cpython-38.pyc

-

BINutils/__pycache__/kalman_filter.cpython-312.pyc

-

BINutils/__pycache__/kalman_filter.cpython-38.pyc

-

BINutils/__pycache__/model.cpython-312.pyc

-

BINutils/__pycache__/model.cpython-38.pyc

-

BINutils/__pycache__/process_images.cpython-312.pyc

-

BINutils/__pycache__/process_images.cpython-38.pyc

-

BINutils/__pycache__/video_recognition.cpython-312.pyc

-

BINutils/__pycache__/video_recognition.cpython-38.pyc

-

+34 -0utils/finger_drawer.py

-

+43 -0utils/gesture_data.py

-

+24 -0utils/gesture_process.py

-

+437 -0utils/gesture_recognition.ipynb

-

+56 -0utils/hand_gesture.py

-

+112 -0utils/index_finger.py

-

+36 -0utils/kalman_filter.py

-

+17 -0utils/model.py

-

+24 -0utils/process_images.py

-

+65 -0utils/video_recognition.py

-

+0 -306项目测试文档.md

-

BIN项目测试结果.xlsx

+ 0

- 3

.gitignore

View File

| @ -1,3 +0,0 @@ | |||

| node_modules/ | |||

| src-tauri/ | |||

| ~$项目测试结果.xlsx | |||

+ 5

- 0

.vscode/settings.json

View File

| @ -0,0 +1,5 @@ | |||

| { | |||

| "python-envs.defaultEnvManager": "ms-python.python:conda", | |||

| "python-envs.defaultPackageManager": "ms-python.python:conda", | |||

| "python-envs.pythonProjects": [] | |||

| } | |||

+ 21

- 0

LICENSE

View File

| @ -0,0 +1,21 @@ | |||

| MIT License | |||

| Copyright (c) 2024 EzraZephyr | |||

| Permission is hereby granted, free of charge, to any person obtaining a copy | |||

| of this software and associated documentation files (the "Software"), to deal | |||

| in the Software without restriction, including without limitation the rights | |||

| to use, copy, modify, merge, publish, distribute, sublicense, and/or sell | |||

| copies of the Software, and to permit persons to whom the Software is | |||

| furnished to do so, subject to the following conditions: | |||

| The above copyright notice and this permission notice shall be included in all | |||

| copies or substantial portions of the Software. | |||

| THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR | |||

| IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, | |||

| FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE | |||

| AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER | |||

| LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, | |||

| OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE | |||

| SOFTWARE. | |||

+ 0

- 149

README.md

View File

| @ -1,149 +0,0 @@ | |||

| # 📡 WaveControl 隔空手势控制系统 | |||

| > 一套融合**手势识别**与**语音控制**的非接触式人机交互系统,包含主控制平台、手语学习平台 WaveSign、赛车游戏控制模块三大子系统,致力于打造自然、高效、多场景适配的“举手即控”体验。 | |||

| ## 项目简介 | |||

| 在厨房、医疗、演讲等“无法触控”或“不便触控”的环境中,传统鼠标/键盘交互模式效率低、操作受限。**WaveControl**以此为切入点,构建了一个基于摄像头识别的隔空控制系统,融合手势识别与语音识别,实现对系统级输入(键盘/鼠标)、手语教学以及游戏控制等功能。 | |||

| 项目采用模块化架构设计,包含三大子系统: | |||

| 1. **主控制平台**:支持窗口操作、媒体控制、鼠标替代、游戏映射等功能 | |||

| 2. **赛车游戏交互系统**:实现与《Rush Rally Origins》等赛车游戏的隔空手柄交互 | |||

| 3. **WaveSign 手语通**:面向听障人群的手语学习与社区互动平台 | |||

| ## 测试信息 | |||

| 本项目配套了完整的[测试文档](./项目测试文档.md),涵盖以下子模块: | |||

| - ✅ **主控制系统测试程序**:手势识别 → 键盘鼠标映射 → 交互反馈 | |||

| - ✅ **游戏控制测试程序**:手势实时识别 → 虚拟手柄信号发送 → 赛车游戏响应验证 | |||

| - ✅ **手语识别与打分测试程序**:摄像头实时捕捉动作 → MediaPipe评分 → UI动画与文本反馈 | |||

| - ✅ **用户交互测试**:社区发帖、点赞评论、任务管理、日程提醒等核心功能均有覆盖 | |||

| 测试方式支持浏览器调试 + 控制台运行日志追踪,若遇白屏可通过 Chrome DevTools 检查模块加载或路径引用情况。 | |||

| ## Git 分支说明 | |||

| | 分支名 | 描述 | 跳转链接 | | |||

| | -------------------- | ------------------------------------------------------------ | ------------------------------------------------------------ | | |||

| | `master` | 主分支,已完成整合,适用于演示与部署 | [🔗 master 分支](https://gitee.com/wydhhh/software-engineering/tree/master/) | | |||

| | `finalv1` | 前后端初步融合尝试,手势 → 页面响应逻辑测试阶段 | [🔗 finalv1](https://gitee.com/wydhhh/software-engineering/tree/finalv1/) | | |||

| | `finalv2` | 完成手势识别与前端事件联动,页面按钮联动测试 | [🔗 finalv2](https://gitee.com/wydhhh/software-engineering/tree/finalv2/) | | |||

| | `finalv3` | 增加语音识别、手势控制切换、音乐控制、手语平台接入、各子系统联调优化 | [🔗 finalv3](https://gitee.com/wydhhh/software-engineering/tree/finalv3/) | | |||

| | `gesture` | 手势识别逻辑独立开发模块 | [🔗 gesture](https://gitee.com/wydhhh/software-engineering/tree/gesture/) | | |||

| | `gesture_for_chrome` | 针对 Chrome 插件开发的手势控制方案(PPT翻页) | [🔗 gesture_for_chrome](https://gitee.com/wydhhh/software-engineering/tree/gesture_for_chrome/) | | |||

| | `gesture-game` | 初步游戏控制实验,控制小球移动 | [🔗 gesture-game](https://gitee.com/wydhhh/software-engineering/tree/gesture-game/) | | |||

| | `game_control` | 控制《Rush Rally Origins》赛车游戏,已支持加速转向等 | [🔗 game_control](https://gitee.com/wydhhh/software-engineering/tree/game_control/) | | |||

| | `wavesign` | 手语通子系统开发主线,包括教学评分、社区、日程等 | [🔗 wavesign](https://gitee.com/wydhhh/software-engineering/tree/wavesign/) | | |||

| | `web` | 最初的网页原型设计,UI 静态草稿 | [🔗 web](https://gitee.com/wydhhh/software-engineering/tree/web/) | | |||

| | `screenshot` | 手势控制截屏模块,用于快速抓取操作界面 | [🔗 screenshot](https://gitee.com/wydhhh/software-engineering/tree/screenshot/) | | |||

| | `vosk_inc` | 接入 VOSK 实现语音识别与实时字幕展示 | [🔗 vosk_inc](https://gitee.com/wydhhh/software-engineering/tree/vosk_inc/) | | |||

| ## 技术架构 | |||

| | 层级 | 技术方案 | | |||

| | ------------ | ------------------------------------------------------------ | | |||

| | 前端 | vue3 + TypeScript +HTML + CSS + Tailwind CSS + JavaScript + PySide2(Qt GUI) | | |||

| | 后端 | Django 4.x(主平台 + 手语通) + Python 脚本逻辑(游戏控制) | | |||

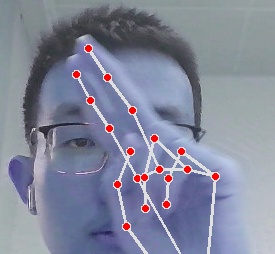

| | 手势识别 | MediaPipe Hand Landmarker | | |||

| | 虚拟设备控制 | 键盘鼠标模拟、vgamepad 虚拟手柄(XInput) | | |||

| | 数据处理 | Kalman Filter(手势抖动滤波)、SQLite3 数据库 | | |||

| ## 模块介绍 | |||

| ### 1️⃣ 主控制平台(WaveControl) | |||

| ##### ✌️ **功能特色** | |||

| - 多种预设手势操作(点击、滚动、后退等) | |||

| - 手势范围调节与自定义手势库 | |||

| - 支持语音识别辅助控制 | |||

| - 界面直观,状态反馈实时 | |||

| ##### 🎯 应用场景 | |||

| - 📺 沙发上追剧时,不再找遥控器,用手势暂停/快进 | |||

| - 👨🏫 教学/演讲中,用手势控制 PPT 流畅翻页 | |||

| - 🧑🍳 厨房做饭时,隔空查食谱不怕弄脏设备 | |||

| - 🏥 医疗/无菌操作室中,非接触式操作电脑界面 | |||

| - 🕹️ 游戏中挥手即控,沉浸感倍增 | |||

| 🖼️ **UI 示例** | |||

| - 控制主面板(准确率/响应时间/识别窗口) | |||

| - 手势管理面板(快捷操作映射设置) | |||

| ### 2️⃣ 游戏控制模块 | |||

| 🔗 项目演示:[游戏控制项目演示视频](https://www.bilibili.com/video/BV1H5gLzsEn8?vd_source=46a0e2ec60dfb8a247c96905ee47d378) | |||

| 🎮 目标:**无需实体手柄,通过摄像头即可玩赛车游戏!** | |||

| 适配游戏:Steam平台《Rush Rally Origins》及支持 Xbox手柄的其他游戏 | |||

| **实现要点:** | |||

| | 功能 | 技术说明 | | |||

| | ------------ | ----------------------------------------------------------- | | |||

| | 摄像头识别 | OpenCV + MediaPipe | | |||

| | 手势控制映射 | 👍右手拇指上扬 = 加速 👍左手 = 刹车 ✋左倾 = 左转,右倾 = 右转 | | |||

| | 虚拟手柄接口 | vgamepad + XInput | | |||

| | 抖动滤除 | Kalman 滤波器平滑动作 | | |||

| | UI反馈 | PySide2 构建调试窗口 | | |||

| ### 3️⃣ 手语通子项目:**WaveSign** | |||

| 🔗 项目演示:[手语通项目演示视频](https://www.bilibili.com/video/BV1Fig5zHEhq?vd_source=46a0e2ec60dfb8a247c96905ee47d378) | |||

| 项目定位:帮助听障人群及其家人朋友学习、练习、交流手语的综合平台 | |||

| **功能模块:** | |||

| - ✅ **手语教学与评分系统**:上传视频或用摄像头练习手语动作,系统打分反馈 | |||

| - 🗺️ **课程地图与互动练习**:任务式学习,配合卡片式巩固练习 | |||

| - 👥 **社区交流**:发帖、评论、点赞、关注等功能 | |||

| - 📅 **日程管理**:内置待办事项与日历,辅助学习安排 | |||

| - 🧭 **生活服务**: | |||

| - 出行导航(结合地图与实时提醒) | |||

| - 辅助器具推荐 | |||

| - 就业信息推送 | |||

| - 无障碍亲子/技能活动预告 | |||

| **技术实现:** | |||

| - Django + SQLite 构建用户系统与服务逻辑 | |||

| - 使用 MediaPipe 实时评分用户手语表现 | |||

| - 前端页面响应式 + 卡片式交互体验 | |||

| ## 项目成员 | |||

| 王云岱 朱子玥 杨嘉莉 | |||

| ## 📌 项目进度 | |||

| - ✅ 第一轮:系统原型 + UI设计 + 手势识别框架 | |||

| - ✅ 第二轮:主平台功能实现 + 手势控制实现 | |||

| - ✅ 第三轮:打通语音识别 + 游戏交互控制 + 手语通平台初步实现 | |||

| - ✅ 第四轮:赛车游戏交互实现 + 手语通平台完整搭建 | |||

| - 🧪 第五轮:综合测试 + 用户体验调优 + 结项演示 | |||

BIN

image/cropped_Right_1753179393.jpg

View File

BIN

image/cropped_Right_1753179532.jpg

View File

BIN

image/cropped_Right_1753179605.jpg

View File

BIN

img/主平台自动化测试结果.png

View File

+ 0

- 0

main.py

View File

+ 0

- 75

sprint1/sprint1-planning.md

View File

| @ -1,75 +0,0 @@ | |||

| ### PPT1 标题 时间 组员 | |||

| - 标题:隔空手势识别系统Sprint1 | |||

| - 时间:[具体时间] | |||

| - 组员:[组员姓名] | |||

| ### PPT2 motivation | |||

| 在当今数字化时代,传统的鼠标键盘操作方式存在一定的局限性,如在某些场景下操作不够便捷、卫生等问题。隔空手势识别系统的出现,能够为用户提供更加自然、便捷的交互方式,填补了市场在非接触式交互领域的空白。它可以广泛应用于智能家居、智能办公、教育演示等多个场景,为用户带来全新的体验。 | |||

| ### PPT3 影响地图 | |||

| - why? | |||

| - 解决传统交互方式在特殊场景下的不便,如疫情期间减少接触、医疗场景避免交叉感染等。 | |||

| - 满足用户对更加自然、便捷交互方式的需求,提升用户体验。 | |||

| - 助力企业提升产品的科技感和竞争力,开拓新的市场领域。 | |||

| - who? | |||

| - 普通消费者:用于家庭娱乐、智能家居控制等。 | |||

| - 办公人群:在会议演示、办公操作中提高效率。 | |||

| - 教育工作者:在教学过程中进行更加生动的演示。 | |||

| - 医疗人员:在医疗操作中避免交叉感染。 | |||

| - how? | |||

| - 核心部分分工:算法团队负责手势识别和语音识别算法的开发;软件团队负责系统的整体架构和交互界面的设计;硬件团队负责传感器等硬件设备的选型和开发。 | |||

| - 采用的技术:计算机视觉技术用于手势识别,语音识别技术用于语音交互,深度学习算法提高识别的准确性和稳定性。 | |||

| - what? | |||

| - 核心功能:远程操控鼠标指针、点击操作、复杂手势识别与响应、语音识别与指令执行。 | |||

| ### PPT4 头脑风暴 | |||

| - 点子1:在游戏中使用手势进行角色控制,增强游戏的沉浸感。 | |||

| - 点子2:在商场的自助购物终端上使用手势操作,提高购物效率。 | |||

| - 点子3:在汽车驾驶中,通过手势控制车内娱乐系统和导航系统,提高驾驶安全性。 | |||

| - 点子4:在智能健身房中,使用手势控制健身设备和课程选择。 | |||

| - 点子5:在虚拟现实和增强现实场景中,使用手势进行更加自然的交互。 | |||

| ### PPT5 basic design | |||

| 系统采用分层架构设计,包括硬件层、驱动层、算法层和应用层。硬件层负责数据的采集,驱动层负责硬件设备的驱动和数据传输,算法层负责手势和语音的识别,应用层负责与用户的交互和业务逻辑的处理。 | |||

| ### PPT6 用户故事 | |||

| - card1 | |||

| - conversation1:用户在客厅看电视,想要切换频道,但不想起身找遥控器。 | |||

| - confirmation1:用户通过隔空手势轻松切换频道,无需使用遥控器。 | |||

| - card2 | |||

| - conversation2:办公人员在会议中需要展示文档,想要翻页但不想触碰鼠标。 | |||

| - confirmation2:办公人员通过手势实现文档的翻页操作,提高会议效率。 | |||

| - card3 | |||

| - conversation3:教育工作者在教学过程中,想要展示图片但不想走到电脑前操作。 | |||

| - confirmation3:教育工作者通过手势控制图片的切换和缩放,使教学更加生动。 | |||

| ### PPT7 初步开发构想 | |||

| - v1(本周四):完成硬件设备的选型和采购,搭建开发环境,实现基本的手势识别算法。 | |||

| - v2(下周一):完成系统的整体架构设计,实现手势识别与鼠标指针的初步关联。 | |||

| - v3(下周四):完善手势识别的准确性和稳定性,实现语音识别功能,并进行初步的测试。 | |||

| - v4(最终):完成系统的所有功能开发,进行全面的测试和优化,准备上线发布。 | |||

| ### PPT8 初步模块设想 | |||

| - 子模块:硬件模块、手势识别模块、语音识别模块、交互界面模块、业务逻辑模块。 | |||

| - 工作量对比:硬件模块和算法模块的工作量相对较大,交互界面模块和业务逻辑模块的工作量相对较小。可以用简单的数字比例表示,如硬件模块:30%,手势识别模块:25%,语音识别模块:20%,交互界面模块:15%,业务逻辑模块:10%。 | |||

| - 优先级:手势识别模块和硬件模块的优先级较高,需要优先开发;语音识别模块和交互界面模块的优先级次之;业务逻辑模块的优先级相对较低。 | |||

| ### PPT9 future view | |||

| 未来,我们将不断优化系统的性能和功能,拓展更多的应用场景。例如,与更多的智能家居设备进行集成,实现更加智能化的家居控制;在工业领域应用,提高生产效率和安全性等。 | |||

| ### PPT10 用户旅程 | |||

| - 优化点1:手势识别的准确性和稳定性需要进一步提高,减少误识别的情况。 | |||

| - 优化点2:语音识别的灵敏度和准确性需要优化,确保在不同环境下都能准确识别用户的语音指令。 | |||

| - 优化点3:交互界面的设计需要更加简洁直观,提高用户的操作体验。 | |||

| - 优化点4:系统的响应速度需要加快,减少用户操作的等待时间。 | |||

| - 优化点5:增加更多的手势和语音指令,满足用户多样化的需求。 | |||

| - 优化点6:提高系统的兼容性,支持更多的操作系统和硬件设备。 | |||

| ### PPT11 在迭代中开发 | |||

| - sprint planning:在每个冲刺阶段开始前,制定详细的计划,明确目标和任务。 | |||

| - daily scrum:每天进行短会,沟通工作进展和遇到的问题。 | |||

| - sprint review:在每个冲刺阶段结束后,进行评审,展示成果并收集反馈。 | |||

| - sprint retrospective:对每个冲刺阶段进行回顾和总结,分析问题并提出改进措施。 | |||

| ### PPT12 thanks | |||

| 感谢大家的聆听! | |||

+ 0

- 10

sprint1/迭代计划.md

View File

| @ -1,10 +0,0 @@ | |||

| # 📈 迭代计划(Sprint Plan) | |||

| ## 🗓️ 项目时间线 | |||

| | 时间 | 内容说明 | | |||

| | -------- | ------------------------------------------------- | | |||

| | 本周四 | 🧩 第一次迭代演示(系统架构设计 + 原型 + UI 草稿) | | |||

| | 下周一 | 🔁 第二次迭代演示(打通手势识别 → 系统控制的闭环) | | |||

| | 下周四 | 🎙 第三次迭代演示(加入语音控制,初步功能联动) | | |||

| | 下下周三 | 🚀 第四次迭代汇报(最终系统完整交付 + 场景演示) | | |||

BIN

sprint1/隔空手势识别系统Sprint1.pptx

View File

BIN

sprint2/WaveControl-sprint2.pptx

View File

+ 0

- 120

sprint2/sprint2.md

View File

| @ -1,120 +0,0 @@ | |||

| ## 一、本轮迭代目标 | |||

| 本 Sprint 主要目标是完成 **基于浏览器端的手势控制系统雏形**,实现如下基础功能: | |||

| - 搭建前端 Web 应用结构与 UI 页面 | |||

| - 集成 MediaPipe 手势识别模型,完成实时手势检测 | |||

| - 映射部分手势为系统控制行为(鼠标移动、点击等) | |||

| - 搭建基础手势处理逻辑与组件封装结构 | |||

| - 支持语音识别指令发送流程(准备后端对接) | |||

| ------ | |||

| ## 二、本轮主要完成内容 | |||

| | 类别 | 工作内容 | | |||

| | -------- | ------------------------------------------------------------ | | |||

| | 前端搭建 | 基于 Vite + Vue 3 + TypeScript 完成项目结构、模块划分、页面初始化 | | |||

| | 手势识别 | 集成 Google MediaPipe WASM 模型,完成实时检测与渲染 | | |||

| | 控制逻辑 | 封装 `GestureHandler`、`TriggerAction`,实现手势到系统操作的映射 | | |||

| | UI设计 | 完成仪表盘式主界面设计,支持响应式、自定义手势预览等 | | |||

| | 模型结构 | 初步梳理 `Detector` 识别流程,统一封装初始化与推理逻辑 | | |||

| | 后端准备 | 初始化 Python 接口 WebSocket 构建,支持语音识别交互 | | |||

| ------ | |||

| ## 三、系统结构设计 | |||

| ``` | |||

| [用户摄像头] | |||

| ↓ | |||

| [VideoDetector.vue] → 捕捉视频帧,传入检测器 | |||

| ↓ | |||

| [Detector.ts] → 调用 MediaPipe 识别手势 | |||

| ↓ | |||

| [GestureHandler.ts] → 映射行为(鼠标/键盘/语音) | |||

| ↓ | |||

| [TriggerAction.ts] → 向后端/系统发出控制指令 | |||

| ↓ | |||

| [Python 后端](语音识别/扩展指令) ← WebSocket 接入 | |||

| ``` | |||

| ------ | |||

| ## 四、核心技术栈与理由 | |||

| | 技术 | 用途 | 原因 | | |||

| | ---------------- | -------------------- | ------------------------------------------ | | |||

| | Vue 3 + Vite | 前端框架 + 构建工具 | 快速开发,热更新快,Composition API 更灵活 | | |||

| | TypeScript | 增强类型约束 | 减少运行时错误,提升可维护性 | | |||

| | MediaPipe Tasks | 手势识别模型 | 体积小,支持浏览器部署,准确率高 | | |||

| | WebSocket | 前后端实时通信 | 保持实时交互流畅 | | |||

| | Python + FastAPI | 后端扩展接口(语音) | 快速搭建接口,后续支持 Py 模型运行 | | |||

| ------ | |||

| ## 五、主要功能点与说明 | |||

| ### 1. Detector 类封装 | |||

| - 初始化 WASM 模型与识别器 | |||

| - 封装 `detect()` 每帧调用逻辑 | |||

| - 支持获取 `landmarks`, `gestures`, `handedness` | |||

| ### 2. 手势识别与映射 | |||

| - 仅识别食指 → 鼠标移动 | |||

| - 食指 + 中指 → 鼠标点击 | |||

| - 食指 + 拇指捏合 → 滚动 | |||

| - 四指竖起 → 发送快捷键 | |||

| - 小指+拇指 → 启动语音识别(前端 WebSocket) | |||

| ### 3. UI 界面模块 | |||

| - 实时视频预览窗口 | |||

| - 子窗口:手势反馈 / 执行动作提示 | |||

| - 配置面板:开关检测 / 配置行为映射 | |||

| ------ | |||

| ## 六、识别代码示例(关键手势) | |||

| ```ts | |||

| if (gesture === HandGesture.ONLY_INDEX_UP) { | |||

| this.triggerAction.moveMouse(x, y) | |||

| } | |||

| if (gesture === HandGesture.INDEX_AND_THUMB_UP) { | |||

| this.triggerAction.scrollUp() | |||

| } | |||

| ``` | |||

| - 每种手势匹配后,调用封装的 WebSocket + 系统接口发送动作 | |||

| - `TriggerAction` 支持复用 | |||

| ------ | |||

| ## 七、问题与解决方案 | |||

| | 问题描述 | 解决方法 | | |||

| | --------------------------------- | ---------------------------------------------------- | | |||

| | WebSocket 连接后反复断开 | 增加连接状态判断 `readyState !== OPEN`,避免提前发送 | | |||

| | Tauri 插件调用 `invoke undefined` | 使用 `npm run tauri dev` 启动,而非普通浏览器启动 | | |||

| | 页面刷新后路由丢失(404) | Vue Router 改为 `createWebHashHistory()` 模式 | | |||

| | 模型未加载或报错提示 | 增加 loading 控制,捕捉初始化异常 | | |||

| ------ | |||

| ## 八、未来规划(Sprint 2 预告) | |||

| | 方向 | 说明 | | |||

| | ----------------- | --------------------------------------------------- | | |||

| | 多手势拓展 | 三指滚动、多指触发多功能、多手联合判断 | | |||

| | 自定义 .task 模型 | 支持用户采集数据,自定义模型替换现有 MediaPipe 模型 | | |||

| | 后端语音指令集成 | Vosk 模型识别语音,触发系统控制 | | |||

| | 设置项管理 | 实现用户自定义快捷键、手势配置界面 | | |||

BIN

sprint3/Sprint 3 技术文档:手势识别与游戏控制.pptx

View File

+ 0

- 146

sprint3/sprint3.md

View File

| @ -1,146 +0,0 @@ | |||

| # Sprint 3 技术文档 | |||

| **识别到控制闭环打通 + 支持游戏操作** | |||

| ------ | |||

| ## 一、概述 | |||

| 本阶段实现了一个运行于浏览器端的手势识别系统,结合 MediaPipe 和 WebSocket,打通从摄像头手势识别 → 系统控制(鼠标、滚动、键盘) → 控制小游戏角色的全流程。 | |||

| 新增支持多种系统控制动作和游戏动作,包括:左右移动、跳跃、组合跳跃等。 | |||

| ------ | |||

| ## 二、模块结构概览 | |||

| 模块分工如下: | |||

| - **Detector.ts** | |||

| 加载并初始化 MediaPipe 手势模型,检测手部关键点与手势状态,并调用 `GestureHandler`。 | |||

| - **GestureHandler.ts** | |||

| 将识别出的手势映射为系统操作(鼠标、滚动、键盘指令等),支持连续确认、平滑处理、动作节流。 | |||

| - **TriggerAction.ts** | |||

| 封装 WebSocket 通信,向后端发送 JSON 格式的控制命令。 | |||

| ``` | |||

| [摄像头视频流] | |||

| ↓ | |||

| [VideoDetector.vue] | |||

| - 捕捉视频帧 | |||

| - 显示实时画面 | |||

| ↓ | |||

| [Detector.ts] | |||

| - 使用 MediaPipe Tasks WASM 模型进行手势识别 | |||

| - 返回关键点、手势类型 | |||

| ↓ | |||

| [GestureHandler.ts] | |||

| - 将识别结果转为行为 | |||

| - 识别特定组合,如食指 + 拇指 → 滚动 | |||

| ↓ | |||

| [TriggerAction.ts] | |||

| - 向 WebSocket 发送控制指令 | |||

| - 控制鼠标/键盘/后端动作 | |||

| ↓ | |||

| [后端 Python] | |||

| - WebSocket 接口接收指令 | |||

| - 准备语音接口/控制中枢 | |||

| ``` | |||

| ------ | |||

| ## 三、识别手势说明(扩展后) | |||

| 每帧识别手指竖起状态(拇指到小指,0 或 1),组合为状态串,查表识别对应手势: | |||

| | 状态串 | 手势名 | 动作描述 | | |||

| | ----------- | ------------------- | ------------------------- | | |||

| | `0,1,0,0,0` | only_index_up | 鼠标移动 | | |||

| | `1,1,0,0,0` | index_and_thumb_up | 鼠标点击 | | |||

| | `0,0,1,1,1` | scroll_gesture_2 | 页面滚动 | | |||

| | `1,0,1,1,1` | scroll_gesture_2 | 页面滚动(兼容变体) | | |||

| | `0,1,1,1,1` | four_fingers_up | 发送快捷键(如播放/全屏) | | |||

| | `1,1,1,1,1` | stop_gesture | 暂停/开始识别 | | |||

| | `1,0,0,0,1` | voice_gesture_start | 启动语音识别 | | |||

| | `0,0,0,0,0` | voice_gesture_stop | 停止语音识别 | | |||

| | `0,1,1,0,0` | jump | 小人跳跃(上键) | | |||

| | `1,1,1,0,0` | rightjump | 小人右跳(右+上组合) | | |||

| | 自定义判断 | direction_right | 小人右移(长按右键) | | |||

| | 自定义判断 | delete_gesture | 小人左移(长按左键) | | |||

| ------ | |||

| ## 四、控制行为逻辑说明 | |||

| ### 鼠标控制 | |||

| - **移动**:基于食指指尖位置映射至屏幕坐标,支持平滑处理,减少抖动。 | |||

| - **点击**:食指与拇指同时上举,节流处理防止重复点击。 | |||

| - **滚动**:捏合(拇指+食指)后上下移动控制滚动方向,带阈值判断。 | |||

| ### 快捷键/系统操作 | |||

| - 四指上举 → 发送自定义按键(如全屏、暂停、下一集等)。 | |||

| - 五指上举 → 暂停/开始识别。支持进度提示。 | |||

| - 删除手势(右手拇指伸出,其余收起)→ 连续发送 Backspace/方向键。 | |||

| - 自定义 holdKey 方法支持模拟长按。 | |||

| ### 游戏控制扩展(键盘模拟) | |||

| | 手势名 | 动作 | | |||

| | --------------- | -------------------- | | |||

| | delete_gesture | 向左移动(左键长按) | | |||

| | direction_right | 向右移动(右键长按) | | |||

| | jump | 向上跳跃(上键) | | |||

| | rightjump | 右跳(右后延迟上) | | |||

| ------ | |||

| ## 五、性能与稳定性处理 | |||

| | 问题 | 解决方案 | | |||

| | ------------------ | ------------------------------------------------------ | | |||

| | 手势误判 | 连续帧确认(minGestureCount ≥ 5) | | |||

| | 鼠标抖动 | 屏幕坐标引入平滑系数 `smoothening` | | |||

| | 动作重复触发 | 节流控制点击/滚动/快捷键/方向键(如 `CLICK_INTERVAL`) | | |||

| | WebSocket 中断重连 | 异常断线后自动重连,带重试时间间隔 | | |||

| | 滚动误触 | 拇指与食指距离阈值控制是否处于“捏合”状态 | | |||

| | UI 状态提示不同步 | 使用全局 store(如 `app_store.sub_window_info`)更新 | | |||

| ------ | |||

| ## 六、技术特性总结 | |||

| - 支持 MediaPipe WASM 模型在前端本地运行,低延迟识别 | |||

| - 所有动作模块化封装,便于未来扩展新手势、新控制指令 | |||

| - 手势控制与后端通过 WebSocket 实时交互,接口稳定 | |||

| - 支持游戏场景(虚拟角色)控制,具备实际互动展示能力 | |||

| - 控制逻辑可配置:如识别区域、快捷键内容、手势灵敏度 | |||

| ------ | |||

| ## 七、后续优化建议(下一阶段) | |||

| | 方向 | 目标 | | |||

| | -------------------- | ----------------------------------------------- | | |||

| | 增加左手手势组合识别 | 允许双手协同控制,比如捏合+四指等 | | |||

| | 提升模型识别精度 | 支持定制 MediaPipe .task 文件、自采样训练 | | |||

| | UI 设置面板 | 提供手势→动作自定义、阈值调节、快捷键修改 | | |||

| | 引入语音控制 | Whisper/Vosk 实现语音命令解析,结合手势联动使用 | | |||

| | 多模式切换 | 视频控制、PPT 控制、游戏控制等可切换交互模式 | | |||

| | 场景演示/视频录制 | 准备真实交互展示视频,用于演示或上线宣传 | | |||

| ------ | |||

| ## 八、结语 | |||

| 本轮迭代成功实现了从手势识别到控制动作的完整闭环,扩展支持了游戏角色控制(多方向移动与跳跃),并在操作流畅性、准确性与系统解耦方面都取得实质进展。后续可以围绕用户可配置性、语音识别联动与多场景适配进一步扩展系统能力。 | |||

BIN

sprint4/WaveControl-sprint4.pptx

View File

+ 127

- 0

utils/GUI.py

View File

| @ -0,0 +1,127 @@ | |||

| import cv2 | |||

| import tkinter as tk | |||

| from tkinter import filedialog, messagebox | |||

| from video_recognition import start_camera, upload_and_process_video, show_frame | |||

| from process_images import HandGestureProcessor | |||

| current_mode = None | |||

| current_cap = None | |||

| # 用于追踪当前模式和摄像头资源 | |||

| # 初始化图形界面主要的逻辑 | |||

| def create_gui(): | |||

| try: | |||

| print("开始创建GUI界面") | |||

| root = tk.Tk() | |||

| root.title("Gesture Recognition") | |||

| root.geometry("800x600") | |||

| print("GUI窗口创建成功") | |||

| canvas = tk.Canvas(root, width=640, height=480) | |||

| canvas.pack() | |||

| print("画布创建成功") | |||

| camera_button = tk.Button( | |||

| root, | |||

| text="Use Camera for Real-time Recognition", | |||

| command=lambda: switch_to_camera(canvas) | |||

| ) | |||

| camera_button.pack(pady=10) | |||

| print("摄像头按钮创建成功") | |||

| video_button = tk.Button( | |||

| root, | |||

| text="Upload Video File for Processing", | |||

| command=lambda: select_and_process_video(canvas, root) | |||

| ) | |||

| video_button.pack(pady=10) | |||

| print("视频上传按钮创建成功") | |||

| print("GUI界面创建完成,进入主循环") | |||

| root.mainloop() | |||

| except Exception as e: | |||

| print(f"[ERROR] 创建GUI时发生异常: {str(e)}") | |||

| import traceback | |||

| print(traceback.format_exc()) | |||

| # 切换到摄像头实时识别模式 | |||

| def switch_to_camera(canvas): | |||

| global current_mode, current_cap | |||

| stop_current_operation() | |||

| # 停止当前操作并释放摄像头 | |||

| current_mode = "camera" | |||

| canvas.delete("all") | |||

| # 设置当前模式为摄像头并清空Canvas | |||

| current_cap = cv2.VideoCapture(1) | |||

| current_cap.open(0) | |||

| if not current_cap.isOpened(): | |||

| messagebox.showerror("Error", "Cannot open camera") | |||

| current_mode = None | |||

| return | |||

| # 启动摄像头 | |||

| start_camera(canvas, current_cap) | |||

| # 传入canvas和current_cap | |||

| # 切换到视频流处理模式 | |||

| def select_and_process_video(canvas, root): | |||

| global current_mode, current_cap | |||

| stop_current_operation() | |||

| current_mode = "video" | |||

| canvas.delete("all") | |||

| video_path = filedialog.askopenfilename( | |||

| title="Select a Video File", | |||

| filetypes=(("MP4 files", "*.mp4"), ("AVI files", "*.avi"), ("All files", "*.*")) | |||

| ) | |||

| # 选择视频文件 | |||

| if video_path: | |||

| cap = cv2.VideoCapture(video_path) | |||

| if not cap.isOpened(): | |||

| messagebox.showerror("Error", "Cannot open video file") | |||

| return | |||

| # 获取视频的宽高并调整 Canvas 大小 | |||

| frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)) | |||

| frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)) | |||

| cap.release() | |||

| canvas.config(width=frame_width, height=frame_height) | |||

| root.geometry(f"{frame_width + 160}x{frame_height + 200}") # 调整窗口大小 | |||

| # 获取视频宽高并动态调整canvas的大小 | |||

| error_message = upload_and_process_video(canvas, video_path) | |||

| if error_message: | |||

| messagebox.showerror("Error", error_message) | |||

| # 上传并处理视频文件 | |||

| def stop_current_operation(): | |||

| global current_cap | |||

| if current_cap and current_cap.isOpened(): | |||

| current_cap.release() | |||

| cv2.destroyAllWindows() | |||

| current_cap = None | |||

| # 停止当前操作 释放摄像头资源并关闭所有窗口 | |||

| def start_camera(canvas, cap): | |||

| if not cap.isOpened(): | |||

| return "Cannot open camera" | |||

| gesture_processor = HandGestureProcessor() | |||

| show_frame(canvas, cap, gesture_processor) | |||

| # 启动摄像头进行实时手势识别 | |||

| if __name__ == "__main__": | |||

| create_gui() | |||

+ 0

- 0

utils/__init__.py

View File

BIN

utils/__pycache__/finger_drawer.cpython-312.pyc

View File

BIN

utils/__pycache__/finger_drawer.cpython-38.pyc

View File

BIN

utils/__pycache__/gesture_data.cpython-312.pyc

View File

BIN

utils/__pycache__/gesture_data.cpython-38.pyc

View File

BIN

utils/__pycache__/hand_gesture.cpython-312.pyc

View File

BIN

utils/__pycache__/hand_gesture.cpython-38.pyc

View File

BIN

utils/__pycache__/index_finger.cpython-312.pyc

View File

BIN

utils/__pycache__/index_finger.cpython-38.pyc

View File

BIN

utils/__pycache__/kalman_filter.cpython-312.pyc

View File

BIN

utils/__pycache__/kalman_filter.cpython-38.pyc

View File

BIN

utils/__pycache__/model.cpython-312.pyc

View File

BIN

utils/__pycache__/model.cpython-38.pyc

View File

BIN

utils/__pycache__/process_images.cpython-312.pyc

View File

BIN

utils/__pycache__/process_images.cpython-38.pyc

View File

BIN

utils/__pycache__/video_recognition.cpython-312.pyc

View File

BIN

utils/__pycache__/video_recognition.cpython-38.pyc

View File

+ 34

- 0

utils/finger_drawer.py

View File

| @ -0,0 +1,34 @@ | |||

| import cv2 | |||

| class FingerDrawer: | |||

| @staticmethod | |||

| def draw_finger_points(image, hand_21, temp_handness, width, height): | |||

| cz0 = hand_21.landmark[0].z | |||

| index_finger_tip_str = '' | |||

| for i in range(21): | |||

| cx = int(hand_21.landmark[i].x * width) | |||

| cy = int(hand_21.landmark[i].y * height) | |||

| cz = hand_21.landmark[i].z | |||

| depth_z = cz0 - cz | |||

| radius = max(int(6 * (1 + depth_z * 5)), 0) | |||

| # 根据深度调整圆点的半径 | |||

| if i == 0: | |||

| image = cv2.circle(image, (cx, cy), radius, (255, 255, 0), thickness=-1) | |||

| elif i == 8: | |||

| image = cv2.circle(image, (cx, cy), radius, (255, 165, 0), thickness=-1) | |||

| index_finger_tip_str += f'{temp_handness}:{depth_z:.2f}, ' | |||

| elif i in [1, 5, 9, 13, 17]: | |||

| image = cv2.circle(image, (cx, cy), radius, (0, 0, 255), thickness=-1) | |||

| elif i in [2, 6, 10, 14, 18]: | |||

| image = cv2.circle(image, (cx, cy), radius, (75, 0, 130), thickness=-1) | |||

| elif i in [3, 7, 11, 15, 19]: | |||

| image = cv2.circle(image, (cx, cy), radius, (238, 130, 238), thickness=-1) | |||

| elif i in [4, 12, 16, 20]: | |||

| image = cv2.circle(image, (cx, cy), radius, (0, 255, 255), thickness=-1) | |||

| # 根据每组关节绘制不同颜色的圆点 同时根据距离掌根的深度信息进行调整 | |||

| return image, index_finger_tip_str | |||

+ 43

- 0

utils/gesture_data.py

View File

| @ -0,0 +1,43 @@ | |||

| from collections import deque | |||

| class HandState: | |||

| def __init__(self): | |||

| self.gesture_locked = {'Left': False, 'Right': False} | |||

| self.gesture_start_time = {'Left': 0, 'Right': 0} | |||

| self.buffer_start_time = {'Left': 0, 'Right': 0} | |||

| self.start_drag_time = {'Left': 0, 'Right': 0} | |||

| self.dragging = {'Left': False, 'Right': False} | |||

| self.drag_point = {'Left': (0, 0), 'Right': (0, 0)} | |||

| self.buffer_duration = {'Left': 0.25, 'Right': 0.25} | |||

| self.is_index_finger_up = {'Left': False, 'Right': False} | |||

| self.index_finger_second = {'Left': 0, 'Right': 0} | |||

| self.index_finger_tip = {'Left': 0, 'Right': 0} | |||

| self.trajectory = {'Left': [], 'Right': []} | |||

| self.square_queue = deque() | |||

| self.wait_time = 1.5 | |||

| self.kalman_wait_time = 0.5 | |||

| self.wait_box = 2 | |||

| self.rect_draw_time = {'Left': 0, 'Right': 0} | |||

| self.last_drawn_box = {'Left': None, 'Right': None} | |||

| def clear_hand_states(self, detected_hand='Both'): | |||

| hands_to_clear = {'Left', 'Right'} | |||

| if detected_hand == 'Both': | |||

| hands_to_clear = hands_to_clear | |||

| else: | |||

| hands_to_clear -= {detected_hand} | |||

| for h in hands_to_clear: | |||

| self.gesture_locked[h] = False | |||

| self.gesture_start_time[h] = 0 | |||

| self.buffer_start_time[h] = 0 | |||

| self.dragging[h] = False | |||

| self.drag_point[h] = (0, 0) | |||

| self.buffer_duration[h] = 0.25 | |||

| self.is_index_finger_up[h] = False | |||

| self.trajectory[h].clear() | |||

| self.start_drag_time[h] = 0 | |||

| self.rect_draw_time[h] = 0 | |||

| self.last_drawn_box[h] = None | |||

| # 用于记录左右手的信息 需要分开存放 否则可能会出现数据冲突 | |||

+ 24

- 0

utils/gesture_process.py

View File

| @ -0,0 +1,24 @@ | |||

| import cv2 | |||

| import time | |||

| from hand_gesture import HandGestureHandler | |||

| class HandGestureProcessor: | |||

| def __init__(self): | |||

| self.hand_handler = HandGestureHandler() | |||

| def process_image(self, image): | |||

| start_time = time.time() | |||

| height, width = image.shape[:2] | |||

| image = cv2.flip(image, 1) | |||

| image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) | |||

| # 获取图像尺寸 翻转并转换颜色空间 | |||

| image = self.hand_handler.handle_hand_gestures(image, width, height) | |||

| spend_time = time.time() - start_time | |||

| FPS = 1.0 / spend_time if spend_time > 0 else 0 | |||

| image = cv2.putText(image, f'FPS {int(FPS)}', (25, 50), cv2.FONT_HERSHEY_SIMPLEX, 1.25, (0, 0, 255), 2) | |||

| # 计算并显示帧率 | |||

| return image | |||

+ 437

- 0

utils/gesture_recognition.ipynb

View File

| @ -0,0 +1,437 @@ | |||

| { | |||

| "cells": [ | |||

| { | |||

| "cell_type": "code", | |||

| "execution_count": 1, | |||

| "id": "initial_id", | |||

| "metadata": { | |||

| "ExecuteTime": { | |||

| "end_time": "2024-09-07T05:11:28.761076Z", | |||

| "start_time": "2024-09-07T05:11:22.404354Z" | |||

| }, | |||

| "collapsed": true | |||

| }, | |||

| "outputs": [], | |||

| "source": [ | |||

| "import cv2\n", | |||

| "import time\n", | |||

| "import mediapipe\n", | |||

| "import numpy as np\n", | |||

| "from collections import deque\n", | |||

| "from filterpy.kalman import KalmanFilter" | |||

| ] | |||

| }, | |||

| { | |||

| "cell_type": "code", | |||

| "execution_count": 2, | |||

| "id": "40aada17ccd31fe", | |||

| "metadata": { | |||

| "ExecuteTime": { | |||

| "end_time": "2024-09-07T05:11:28.777139Z", | |||

| "start_time": "2024-09-07T05:11:28.761076Z" | |||

| } | |||

| }, | |||

| "outputs": [], | |||

| "source": [ | |||

| "gesture_locked = {'Left':False,'Right':False}\n", | |||

| "gesture_start_time = {'Left':0,'Right':0}\n", | |||

| "buffer_start_time = {'Left':0,'Right':0}\n", | |||

| "start_drag_time = {'Left':0,'Right':0}\n", | |||

| "dragging = {'Left':False,'Right':False}\n", | |||

| "drag_point = {'Left':(0, 0),'Right':(0, 0)}\n", | |||

| "buffer_duration = {'Left':0.25,'Right':0.25}\n", | |||

| "is_index_finger_up = {'Left':False,'Right':False}\n", | |||

| "index_finger_second = {'Left':0,'Right':0}\n", | |||

| "index_finger_tip = {'Left':0,'Right':0}\n", | |||

| "trajectory = {'Left':[],'Right':[]}\n", | |||

| "square_queue = deque()\n", | |||

| "wait_time = 1.5\n", | |||

| "kalman_wait_time = 0.5\n", | |||

| "wait_box = 2\n", | |||

| "rect_draw_time = {'Left':0,'Right':0}\n", | |||

| "last_drawn_box = {'Left':None,'Right':None}\n", | |||

| "elapsed_time = {'Left':0,'Right':0}" | |||

| ] | |||

| }, | |||

| { | |||

| "cell_type": "code", | |||

| "execution_count": 3, | |||

| "id": "2ee9323bb1c25cc0", | |||

| "metadata": { | |||

| "ExecuteTime": { | |||

| "end_time": "2024-09-07T05:11:28.824573Z", | |||

| "start_time": "2024-09-07T05:11:28.777139Z" | |||

| } | |||

| }, | |||

| "outputs": [], | |||

| "source": [ | |||

| "def clear_hand_states(detected_hand ='Both'):\n", | |||

| " global gesture_locked, gesture_start_time, buffer_start_time, dragging, drag_point, buffer_duration,is_index_finger_up, trajectory,wait_time,kalman_wait_time, start_drag_time, rect_draw_time, last_drawn_box, wait_box, elapsed_time\n", | |||

| " \n", | |||

| " hands_to_clear = {'Left', 'Right'}\n", | |||

| " if detected_hand == 'Both':\n", | |||

| " hands_to_clear = hands_to_clear\n", | |||

| " else:\n", | |||

| " hands_to_clear -= {detected_hand}\n", | |||

| " # 反向判断左右手\n", | |||

| "\n", | |||

| " for h in hands_to_clear:\n", | |||

| " gesture_locked[h] = False\n", | |||

| " gesture_start_time[h] = 0\n", | |||

| " buffer_start_time[h] = 0\n", | |||

| " dragging[h] = False\n", | |||

| " drag_point[h] = (0, 0)\n", | |||

| " buffer_duration[h] = 0.25\n", | |||

| " is_index_finger_up[h] = False\n", | |||

| " trajectory[h].clear()\n", | |||

| " start_drag_time[h] = 0\n", | |||

| " rect_draw_time[h] = 0\n", | |||

| " last_drawn_box[h] = None\n", | |||

| " elapsed_time[h] = 0\n", | |||

| " # 清空没被检测的手" | |||

| ] | |||

| }, | |||

| { | |||

| "cell_type": "code", | |||

| "execution_count": 4, | |||

| "id": "96cf431d2562e7d", | |||

| "metadata": { | |||

| "ExecuteTime": { | |||

| "end_time": "2024-09-07T05:11:28.855831Z", | |||

| "start_time": "2024-09-07T05:11:28.824573Z" | |||

| } | |||

| }, | |||

| "outputs": [], | |||

| "source": [ | |||

| "kalman_filters = {\n", | |||

| " 'Left': KalmanFilter(dim_x=4, dim_z=2),\n", | |||

| " 'Right': KalmanFilter(dim_x=4, dim_z=2)\n", | |||

| "}\n", | |||

| "\n", | |||

| "for key in kalman_filters:\n", | |||

| " kalman_filters[key].x = np.array([0., 0., 0., 0.])\n", | |||

| " kalman_filters[key].F = np.array([[1, 0, 1, 0], [0, 1, 0, 1], [0, 0, 1, 0], [0, 0, 0, 1]])\n", | |||

| " # 状态转移矩阵\n", | |||

| " kalman_filters[key].H = np.array([[1, 0, 0, 0], [0, 1, 0, 0]])\n", | |||

| " # 观测矩阵\n", | |||

| " kalman_filters[key].P *= 1000.\n", | |||

| " kalman_filters[key].R = 3\n", | |||

| " kalman_filters[key].Q = np.eye(4) * 0.01\n", | |||

| "\n", | |||

| "def kalman_filter_point(hand_label, x, y):\n", | |||

| " kf = kalman_filters[hand_label]\n", | |||

| " kf.predict()\n", | |||

| " kf.update([x, y])\n", | |||

| " # 更新状态\n", | |||

| " return (kf.x[0], kf.x[1])\n", | |||

| "\n", | |||

| "def reset_kalman_filter(hand_label, x, y):\n", | |||

| " kf = kalman_filters[hand_label]\n", | |||

| " kf.x = np.array([x, y, 0., 0.])\n", | |||

| " kf.P *= 1000.\n", | |||

| " # 重置" | |||

| ] | |||

| }, | |||

| { | |||

| "cell_type": "code", | |||

| "execution_count": 5, | |||

| "id": "edc274b7ed495122", | |||

| "metadata": { | |||

| "ExecuteTime": { | |||

| "end_time": "2024-09-07T05:11:28.887346Z", | |||

| "start_time": "2024-09-07T05:11:28.855831Z" | |||

| } | |||

| }, | |||

| "outputs": [], | |||

| "source": [ | |||

| "\n", | |||

| "mp_hands = mediapipe.solutions.hands\n", | |||

| "\n", | |||

| "hands = mp_hands.Hands(\n", | |||

| " static_image_mode=False,\n", | |||

| " max_num_hands=2,\n", | |||

| " # 一只更稳定\n", | |||

| " min_detection_confidence=0.5,\n", | |||

| " min_tracking_confidence=0.5\n", | |||

| ")\n", | |||

| "\n", | |||

| "mp_drawing = mediapipe.solutions.drawing_utils\n", | |||

| "clear_hand_states()" | |||

| ] | |||

| }, | |||

| { | |||

| "cell_type": "code", | |||

| "execution_count": null, | |||

| "id": "51ff809ecaf1f899", | |||

| "metadata": { | |||

| "ExecuteTime": { | |||

| "end_time": "2024-09-07T05:11:28.934274Z", | |||

| "start_time": "2024-09-07T05:11:28.887346Z" | |||

| } | |||

| }, | |||

| "outputs": [], | |||

| "source": [ | |||

| "def process_image(image):\n", | |||

| "\n", | |||

| " start_time = time.time()\n", | |||

| " height, width = image.shape[:2]\n", | |||

| " image = cv2.flip(image, 1)\n", | |||

| " image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)\n", | |||

| " # 预处理帧\n", | |||

| " \n", | |||

| " results = hands.process(image)\n", | |||

| " \n", | |||

| " if results.multi_hand_landmarks:\n", | |||

| " # 如果检测到手\n", | |||

| " \n", | |||

| " handness_str = ''\n", | |||

| " index_finger_tip_str = ''\n", | |||

| " \n", | |||

| " if len(results.multi_hand_landmarks) == 1:\n", | |||

| " clear_hand_states(detected_hand = results.multi_handedness[0].classification[0].label)\n", | |||

| " # 如果只有一只手 则清空另一只手的数据 避免后续冲突导致不稳定\n", | |||

| " \n", | |||

| " for hand_idx in range(len(results.multi_hand_landmarks)):\n", | |||

| " \n", | |||

| " hand_21 = results.multi_hand_landmarks[hand_idx]\n", | |||

| " mp_drawing.draw_landmarks(image, hand_21, mp_hands.HAND_CONNECTIONS)\n", | |||

| " \n", | |||

| " temp_handness = results.multi_handedness[hand_idx].classification[0].label\n", | |||

| " handness_str += '{}:{}, '.format(hand_idx, temp_handness)\n", | |||

| " is_index_finger_up[temp_handness] = False\n", | |||

| " # 先设置为false 防止放下被错误更新为竖起\n", | |||

| " \n", | |||

| " cz0 = hand_21.landmark[0].z\n", | |||

| " index_finger_second[temp_handness] = hand_21.landmark[7]\n", | |||

| " index_finger_tip[temp_handness] = hand_21.landmark[8]\n", | |||

| " # 食指指尖和第一个关节\n", | |||

| " \n", | |||

| " index_x, index_y = int(index_finger_tip[temp_handness].x * width), int(index_finger_tip[temp_handness].y * height)\n", | |||

| "\n", | |||

| " if all(index_finger_second[temp_handness].y < hand_21.landmark[i].y for i in range(21) if i not in [7, 8]) and index_finger_tip[temp_handness].y < index_finger_second[temp_handness].y:\n", | |||

| " is_index_finger_up[temp_handness] = True\n", | |||

| " # 如果指尖和第二个关节高度大于整只手所有关节点 则视为执行“指向”操作 \n", | |||

| "\n", | |||

| " if is_index_finger_up[temp_handness]:\n", | |||

| " if not gesture_locked[temp_handness]:\n", | |||

| " if gesture_start_time[temp_handness] == 0:\n", | |||

| " gesture_start_time[temp_handness] = time.time()\n", | |||

| " # 记录食指抬起的时间\n", | |||

| " elif time.time() - gesture_start_time[temp_handness] > wait_time:\n", | |||

| " dragging[temp_handness] = True\n", | |||

| " gesture_locked[temp_handness] = True\n", | |||

| " drag_point[temp_handness] = (index_x, index_y)\n", | |||

| " # 如果食指抬起的时间大于预设的等待时间则视为执行“指向”操作\n", | |||

| " buffer_start_time[temp_handness] = 0\n", | |||

| " # 检测到食指竖起就刷新缓冲时间\n", | |||

| " else:\n", | |||

| " if buffer_start_time[temp_handness] == 0:\n", | |||

| " buffer_start_time[temp_handness] = time.time()\n", | |||

| " elif time.time() - buffer_start_time[temp_handness] > buffer_duration[temp_handness]:\n", | |||

| " gesture_start_time[temp_handness] = 0\n", | |||

| " gesture_locked[temp_handness] = False\n", | |||

| " dragging[temp_handness] = False\n", | |||

| " # 如果缓冲时间大于设定 就证明已经结束指向操作\n", | |||

| " # 这样可以防止某一帧识别有误导致指向操作被错误清除\n", | |||

| " \n", | |||

| " if dragging[temp_handness]:\n", | |||

| "\n", | |||

| " if start_drag_time[temp_handness] == 0:\n", | |||

| " start_drag_time[temp_handness] = time.time()\n", | |||

| " reset_kalman_filter(temp_handness, index_x, index_y)\n", | |||

| " # 每次画线的时候初始化滤波器\n", | |||

| " \n", | |||

| " smooth_x, smooth_y = kalman_filter_point(temp_handness, index_x, index_y)\n", | |||

| " drag_point[temp_handness] = (index_x, index_y)\n", | |||

| " index_finger_radius = max(int(10 * (1 + (cz0 - index_finger_tip[temp_handness].z) * 5)), 0)\n", | |||

| " cv2.circle(image, drag_point[temp_handness], index_finger_radius, (0, 0, 255), -1)\n", | |||

| " # 根据离掌根的深度距离来构建一个圆\n", | |||

| " # 用来显示已经开始指向操作\n", | |||

| " # 和下方构建的深度点位对应 直接用倍数\n", | |||

| " drag_point_smooth = (smooth_x, smooth_y)\n", | |||

| " \n", | |||

| " if time.time() - start_drag_time[temp_handness] > kalman_wait_time:\n", | |||

| " trajectory[temp_handness].append(drag_point_smooth)\n", | |||

| " # 因为kalman滤波器初始化的时候会很不稳定 前几帧通常会有较为严重的噪声\n", | |||

| " # 所以直接等待前几帧运行完成之后再将点位加到轨迹列表中\n", | |||

| " else:\n", | |||

| " if len(trajectory[temp_handness]) > 4:\n", | |||

| " contour = np.array(trajectory[temp_handness], dtype=np.int32)\n", | |||

| " rect = cv2.minAreaRect(contour)\n", | |||

| " box = cv2.boxPoints(rect)\n", | |||

| " box = np.int64(box)\n", | |||

| " rect_draw_time[temp_handness] = time.time()\n", | |||

| " last_drawn_box[temp_handness] = box\n", | |||

| " # 如果指向操作结束 轨迹列表有至少四个点的时候\n", | |||

| " # 使用最小包围图形将画的不规则图案调整为一个矩形\n", | |||

| "\n", | |||

| " start_drag_time[temp_handness] = 0\n", | |||

| " trajectory[temp_handness].clear()\n", | |||

| "\n", | |||

| " for i in range(1, len(trajectory[temp_handness])):\n", | |||

| "\n", | |||

| " pt1 = (int(trajectory[temp_handness][i-1][0]), int(trajectory[temp_handness][i-1][1]))\n", | |||

| " pt2 = (int(trajectory[temp_handness][i][0]), int(trajectory[temp_handness][i][1]))\n", | |||

| " cv2.line(image, pt1, pt2, (0, 0, 255), 2)\n", | |||

| " # 绘制连接轨迹点的线\n", | |||

| "\n", | |||

| " if last_drawn_box[temp_handness] is not None:\n", | |||

| " elapsed_time[temp_handness] = time.time() - rect_draw_time[temp_handness]\n", | |||

| " \n", | |||

| " if elapsed_time[temp_handness] < wait_box:\n", | |||

| " cv2.drawContours(image, [last_drawn_box[temp_handness]], 0, (0, 255, 0), 2)\n", | |||

| " # 将矩形框保留一段时间 否则一帧太快 无法看清效果\n", | |||

| " \n", | |||

| " elif elapsed_time[temp_handness] >= wait_box - 0.1:\n", | |||

| " \n", | |||

| " box = last_drawn_box[temp_handness]\n", | |||

| " x_min = max(0, min(box[:, 0]))\n", | |||

| " y_min = max(0, min(box[:, 1]))\n", | |||

| " x_max = min(image.shape[1], max(box[:, 0]))\n", | |||

| " y_max = min(image.shape[0], max(box[:, 1]))\n", | |||

| " cropped_image = image[y_min:y_max, x_min:x_max]\n", | |||

| " filename = f\"../image/cropped_{temp_handness}_{int(time.time())}.jpg\"\n", | |||

| " cv2.imwrite(filename, cropped_image)\n", | |||

| " last_drawn_box[temp_handness] = None\n", | |||

| " # 不能直接剪裁画完的图像 可能会错误的将手剪裁进去\n", | |||

| " # 等待一段时间 有一个给手缓冲移动走的时间再将这一帧里的矩形提取出来\n", | |||

| " \n", | |||

| " for i in range(21):\n", | |||

| " \n", | |||

| " cx = int(hand_21.landmark[i].x * width)\n", | |||

| " cy = int(hand_21.landmark[i].y * height)\n", | |||

| " cz = hand_21.landmark[i].z\n", | |||

| " depth_z = cz0 - cz\n", | |||

| " radius = max(int(6 * (1 + depth_z*5)), 0)\n", | |||

| " \n", | |||

| " if i == 0:\n", | |||

| " image = cv2.circle(image, (cx, cy), radius, (255, 255, 0), thickness=-1)\n", | |||

| " if i == 8:\n", | |||

| " image = cv2.circle(image, (cx, cy), radius, (255, 165, 0), thickness=-1)\n", | |||

| " index_finger_tip_str += '{}:{:.2f}, '.format(hand_idx, depth_z)\n", | |||

| " if i in [1,5,9,13,17]: \n", | |||

| " image = cv2.circle(image, (cx, cy), radius, (0, 0, 255), thickness=-1)\n", | |||

| " if i in [2,6,10,14,18]:\n", | |||

| " image = cv2.circle(image, (cx, cy), radius, (75, 0, 130), thickness=-1)\n", | |||

| " if i in [3,7,11,15,19]:\n", | |||

| " image = cv2.circle(image, (cx, cy), radius, (238, 130, 238), thickness=-1)\n", | |||

| " if i in [4,12,16,20]:\n", | |||

| " image = cv2.circle(image, (cx, cy), radius, (0, 255, 255), thickness=-1)\n", | |||

| " # 提取出每一个关节点 赋予对应的颜色和根据掌根的深度\n", | |||

| " \n", | |||

| " scaler= 1\n", | |||

| " image = cv2.putText(image,handness_str, (25*scaler, 100*scaler), cv2.FONT_HERSHEY_SIMPLEX, 1.25*scaler, (0,0,255), 2,)\n", | |||

| " image = cv2.putText(image,index_finger_tip_str, (25*scaler, 150*scaler), cv2.FONT_HERSHEY_SIMPLEX, 1.25*scaler, (0,0,255), 2,)\n", | |||

| "\n", | |||

| " spend_time = time.time() - start_time\n", | |||

| " if spend_time > 0:\n", | |||

| " FPS = 1.0 / spend_time\n", | |||

| " else:\n", | |||

| " FPS = 0\n", | |||

| " \n", | |||

| " image = cv2.putText(image,'FPS '+str(int(FPS)),(25*scaler,50*scaler),cv2.FONT_HERSHEY_SIMPLEX,1.25*scaler,(0,0,255),2,)\n", | |||

| " # 显示FPS 检测到的手和食指指尖对于掌根的深度值\n", | |||

| " \n", | |||

| " else:\n", | |||

| " clear_hand_states()\n", | |||

| " # 如果没检测到手就清空全部信息\n", | |||

| " \n", | |||

| " return image" | |||

| ] | |||

| }, | |||

| { | |||

| "cell_type": "code", | |||

| "execution_count": null, | |||

| "id": "b7ce23e80ed36041", | |||

| "metadata": { | |||

| "ExecuteTime": { | |||

| "end_time": "2024-09-07T05:19:32.248575Z", | |||

| "start_time": "2024-09-07T05:11:28.934663Z" | |||

| } | |||

| }, | |||

| "outputs": [ | |||

| { | |||

| "name": "stderr", | |||

| "output_type": "stream", | |||

| "text": [ | |||

| "C:\\Users\\25055\\AppData\\Local\\Temp\\ipykernel_4200\\752492595.py:89: DeprecationWarning: `np.int0` is a deprecated alias for `np.intp`. (Deprecated NumPy 1.24)\n", | |||

| " box = np.int0(box)\n" | |||

| ] | |||

| }, | |||

| { | |||

| "ename": "KeyboardInterrupt", | |||

| "evalue": "", | |||

| "output_type": "error", | |||

| "traceback": [ | |||

| "\u001b[1;31m---------------------------------------------------------------------------\u001b[0m", | |||

| "\u001b[1;31mKeyboardInterrupt\u001b[0m Traceback (most recent call last)", | |||

| "Cell \u001b[1;32mIn[7], line 10\u001b[0m\n\u001b[0;32m 7\u001b[0m \u001b[38;5;28mprint\u001b[39m(\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mCamera Error\u001b[39m\u001b[38;5;124m\"\u001b[39m)\n\u001b[0;32m 8\u001b[0m \u001b[38;5;28;01mbreak\u001b[39;00m\n\u001b[1;32m---> 10\u001b[0m frame \u001b[38;5;241m=\u001b[39m \u001b[43mprocess_image\u001b[49m\u001b[43m(\u001b[49m\u001b[43mframe\u001b[49m\u001b[43m)\u001b[49m\n\u001b[0;32m 11\u001b[0m cv2\u001b[38;5;241m.\u001b[39mimshow(\u001b[38;5;124m'\u001b[39m\u001b[38;5;124mVideo\u001b[39m\u001b[38;5;124m'\u001b[39m, frame)\n\u001b[0;32m 13\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m cv2\u001b[38;5;241m.\u001b[39mwaitKey(\u001b[38;5;241m1\u001b[39m) \u001b[38;5;241m&\u001b[39m \u001b[38;5;241m0xFF\u001b[39m \u001b[38;5;241m==\u001b[39m \u001b[38;5;28mord\u001b[39m(\u001b[38;5;124m'\u001b[39m\u001b[38;5;124mq\u001b[39m\u001b[38;5;124m'\u001b[39m):\n", | |||

| "Cell \u001b[1;32mIn[6], line 9\u001b[0m, in \u001b[0;36mprocess_image\u001b[1;34m(image)\u001b[0m\n\u001b[0;32m 6\u001b[0m image \u001b[38;5;241m=\u001b[39m cv2\u001b[38;5;241m.\u001b[39mcvtColor(image, cv2\u001b[38;5;241m.\u001b[39mCOLOR_BGR2RGB)\n\u001b[0;32m 7\u001b[0m \u001b[38;5;66;03m# 预处理帧\u001b[39;00m\n\u001b[1;32m----> 9\u001b[0m results \u001b[38;5;241m=\u001b[39m \u001b[43mhands\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mprocess\u001b[49m\u001b[43m(\u001b[49m\u001b[43mimage\u001b[49m\u001b[43m)\u001b[49m\n\u001b[0;32m 11\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m results\u001b[38;5;241m.\u001b[39mmulti_hand_landmarks:\n\u001b[0;32m 12\u001b[0m \u001b[38;5;66;03m# 如果检测到手\u001b[39;00m\n\u001b[0;32m 14\u001b[0m handness_str \u001b[38;5;241m=\u001b[39m \u001b[38;5;124m'\u001b[39m\u001b[38;5;124m'\u001b[39m\n", | |||

| "File \u001b[1;32md:\\app-install-dict\\Anaconda3\\envs\\software_engineering\\lib\\site-packages\\mediapipe\\python\\solutions\\hands.py:153\u001b[0m, in \u001b[0;36mHands.process\u001b[1;34m(self, image)\u001b[0m\n\u001b[0;32m 132\u001b[0m \u001b[38;5;28;01mdef\u001b[39;00m\u001b[38;5;250m \u001b[39m\u001b[38;5;21mprocess\u001b[39m(\u001b[38;5;28mself\u001b[39m, image: np\u001b[38;5;241m.\u001b[39mndarray) \u001b[38;5;241m-\u001b[39m\u001b[38;5;241m>\u001b[39m NamedTuple:\n\u001b[0;32m 133\u001b[0m \u001b[38;5;250m \u001b[39m\u001b[38;5;124;03m\"\"\"Processes an RGB image and returns the hand landmarks and handedness of each detected hand.\u001b[39;00m\n\u001b[0;32m 134\u001b[0m \n\u001b[0;32m 135\u001b[0m \u001b[38;5;124;03m Args:\u001b[39;00m\n\u001b[1;32m (...)\u001b[0m\n\u001b[0;32m 150\u001b[0m \u001b[38;5;124;03m right hand) of the detected hand.\u001b[39;00m\n\u001b[0;32m 151\u001b[0m \u001b[38;5;124;03m \"\"\"\u001b[39;00m\n\u001b[1;32m--> 153\u001b[0m \u001b[38;5;28;01mreturn\u001b[39;00m \u001b[38;5;28;43msuper\u001b[39;49m\u001b[43m(\u001b[49m\u001b[43m)\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mprocess\u001b[49m\u001b[43m(\u001b[49m\u001b[43minput_data\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[43m{\u001b[49m\u001b[38;5;124;43m'\u001b[39;49m\u001b[38;5;124;43mimage\u001b[39;49m\u001b[38;5;124;43m'\u001b[39;49m\u001b[43m:\u001b[49m\u001b[43m \u001b[49m\u001b[43mimage\u001b[49m\u001b[43m}\u001b[49m\u001b[43m)\u001b[49m\n", | |||

| "File \u001b[1;32md:\\app-install-dict\\Anaconda3\\envs\\software_engineering\\lib\\site-packages\\mediapipe\\python\\solution_base.py:335\u001b[0m, in \u001b[0;36mSolutionBase.process\u001b[1;34m(self, input_data)\u001b[0m\n\u001b[0;32m 329\u001b[0m \u001b[38;5;28;01melse\u001b[39;00m:\n\u001b[0;32m 330\u001b[0m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39m_graph\u001b[38;5;241m.\u001b[39madd_packet_to_input_stream(\n\u001b[0;32m 331\u001b[0m stream\u001b[38;5;241m=\u001b[39mstream_name,\n\u001b[0;32m 332\u001b[0m packet\u001b[38;5;241m=\u001b[39m\u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39m_make_packet(input_stream_type,\n\u001b[0;32m 333\u001b[0m data)\u001b[38;5;241m.\u001b[39mat(\u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39m_simulated_timestamp))\n\u001b[1;32m--> 335\u001b[0m \u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43m_graph\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mwait_until_idle\u001b[49m\u001b[43m(\u001b[49m\u001b[43m)\u001b[49m\n\u001b[0;32m 336\u001b[0m \u001b[38;5;66;03m# Create a NamedTuple object where the field names are mapping to the graph\u001b[39;00m\n\u001b[0;32m 337\u001b[0m \u001b[38;5;66;03m# output stream names.\u001b[39;00m\n\u001b[0;32m 338\u001b[0m solution_outputs \u001b[38;5;241m=\u001b[39m collections\u001b[38;5;241m.\u001b[39mnamedtuple(\n\u001b[0;32m 339\u001b[0m \u001b[38;5;124m'\u001b[39m\u001b[38;5;124mSolutionOutputs\u001b[39m\u001b[38;5;124m'\u001b[39m, \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39m_output_stream_type_info\u001b[38;5;241m.\u001b[39mkeys())\n", | |||

| "\u001b[1;31mKeyboardInterrupt\u001b[0m: " | |||

| ] | |||

| }, | |||

| { | |||

| "ename": "", | |||

| "evalue": "", | |||

| "output_type": "error", | |||

| "traceback": [ | |||

| "\u001b[1;31m在当前单元格或上一个单元格中执行代码时 Kernel 崩溃。\n", | |||

| "\u001b[1;31m请查看单元格中的代码,以确定故障的可能原因。\n", | |||

| "\u001b[1;31m单击<a href='https://aka.ms/vscodeJupyterKernelCrash'>此处</a>了解详细信息。\n", | |||

| "\u001b[1;31m有关更多详细信息,请查看 Jupyter <a href='command:jupyter.viewOutput'>log</a>。" | |||

| ] | |||

| } | |||

| ], | |||

| "source": [ | |||

| "cap = cv2.VideoCapture(1)\n", | |||

| "cap.open(0)\n", | |||

| "\n", | |||

| "while cap.isOpened():\n", | |||

| " success, frame = cap.read()\n", | |||

| " if not success:\n", | |||

| " print(\"Camera Error\")\n", | |||

| " break\n", | |||

| " \n", | |||

| " frame = process_image(frame)\n", | |||

| " cv2.imshow('Video', frame)\n", | |||

| " \n", | |||

| " if cv2.waitKey(1) & 0xFF == ord('q'):\n", | |||

| " break\n", | |||

| " \n", | |||

| "cap.release()\n", | |||

| "cv2.destroyAllWindows() " | |||

| ] | |||

| }, | |||

| { | |||

| "cell_type": "code", | |||

| "execution_count": null, | |||

| "id": "10fca4bc34a944ea", | |||

| "metadata": {}, | |||

| "outputs": [], | |||

| "source": [] | |||

| } | |||

| ], | |||

| "metadata": { | |||

| "kernelspec": { | |||

| "display_name": "software_engineering", | |||

| "language": "python", | |||

| "name": "python3" | |||

| }, | |||

| "language_info": { | |||

| "codemirror_mode": { | |||

| "name": "ipython", | |||

| "version": 3 | |||

| }, | |||

| "file_extension": ".py", | |||

| "mimetype": "text/x-python", | |||

| "name": "python", | |||

| "nbconvert_exporter": "python", | |||

| "pygments_lexer": "ipython3", | |||

| "version": "3.8.20" | |||

| } | |||

| }, | |||

| "nbformat": 4, | |||

| "nbformat_minor": 5 | |||

| } | |||

+ 56

- 0

utils/hand_gesture.py

View File

| @ -0,0 +1,56 @@ | |||

| import cv2 | |||

| from model import HandTracker | |||

| from index_finger import IndexFingerHandler | |||

| from gesture_data import HandState | |||

| from kalman_filter import KalmanHandler | |||

| from finger_drawer import FingerDrawer | |||

| class HandGestureHandler: | |||

| def __init__(self): | |||

| self.hand_state = HandState() | |||

| self.kalman_handler = KalmanHandler() | |||

| self.hand_tracker = HandTracker() | |||

| self.index_handler = IndexFingerHandler(self.hand_state, self.kalman_handler) | |||

| def handle_hand_gestures(self, image, width, height, is_video): | |||

| results = self.hand_tracker.process(image) | |||

| if results.multi_hand_landmarks: | |||

| handness_str = '' | |||

| index_finger_tip_str = '' | |||

| if len(results.multi_hand_landmarks) == 1: | |||

| detected_hand = results.multi_handedness[0].classification[0].label | |||

| self.hand_state.clear_hand_states(detected_hand) | |||

| # 如果只检测到了一只手 那么就清空另一只手的信息 以免第二只手出现的时候数据冲突 | |||

| for hand_idx, hand_21 in enumerate(results.multi_hand_landmarks): | |||

| self.hand_tracker.mp_drawing.draw_landmarks( | |||

| image, hand_21, self.hand_tracker.mp_hands.HAND_CONNECTIONS | |||

| ) | |||

| # 绘制手部关键点连接 | |||

| temp_handness = results.multi_handedness[hand_idx].classification[0].label | |||

| handness_str += f'{hand_idx}:{temp_handness}, ' | |||

| self.hand_state.is_index_finger_up[temp_handness] = False | |||

| image = self.index_handler.handle_index_finger( | |||

| image, hand_21, temp_handness, width, height | |||

| ) | |||

| # 处理食指 | |||

| image, index_finger_tip_str = FingerDrawer.draw_finger_points(image, hand_21, temp_handness, width, height) | |||

| if is_video: | |||

| image = cv2.flip(image, 1) | |||

| image = cv2.putText(image, handness_str, (25, 100), cv2.FONT_HERSHEY_SIMPLEX, 1.25, (0, 0, 255), 2) | |||

| image = cv2.putText(image, index_finger_tip_str, (25, 150), cv2.FONT_HERSHEY_SIMPLEX, 1.25, (0, 0, 255), 2) | |||

| else: | |||

| if is_video: | |||

| image = cv2.flip(image, 1) | |||

| # 如果是后置摄像头的输入视频,则需要在处理前翻转图像,确保手势检测的左右手正确; | |||

| # 处理完毕后再翻转回来,以防止最终输出的图像出现镜像错误。 | |||

| self.hand_state.clear_hand_states() | |||

| # 如果未检测到手 则清空手部状态 | |||

| return image | |||

+ 112

- 0

utils/index_finger.py

View File

| @ -0,0 +1,112 @@ | |||

| import cv2 | |||

| import time | |||

| import numpy as np | |||

| class IndexFingerHandler: | |||

| def __init__(self, hand_state, kalman_handler): | |||

| self.hand_state = hand_state | |||

| self.kalman_handler = kalman_handler | |||

| self.wait_time = 1.5 | |||

| self.kalman_wait_time = 0.5 | |||

| self.wait_box = 2 | |||

| def handle_index_finger(self, image, hand_21, temp_handness, width, height): | |||

| cz0 = hand_21.landmark[0].z | |||

| self.hand_state.index_finger_second[temp_handness] = hand_21.landmark[7] | |||

| self.hand_state.index_finger_tip[temp_handness] = hand_21.landmark[8] | |||

| index_x = int(self.hand_state.index_finger_tip[temp_handness].x * width) | |||

| index_y = int(self.hand_state.index_finger_tip[temp_handness].y * height) | |||

| self.update_index_finger_state(hand_21, temp_handness, index_x, index_y) | |||

| self.draw_index_finger_gesture(image, temp_handness, index_x, index_y, cz0) | |||

| return image | |||

| # 处理食指的状态和手势效果,并更新图像 | |||

| def update_index_finger_state(self, hand_21, temp_handness, index_x, index_y): | |||

| if all(self.hand_state.index_finger_second[temp_handness].y < hand_21.landmark[i].y | |||

| for i in range(21) if i not in [7, 8]) and \ | |||

| self.hand_state.index_finger_tip[temp_handness].y < self.hand_state.index_finger_second[temp_handness].y: | |||

| self.hand_state.is_index_finger_up[temp_handness] = True | |||

| # 如果食指指尖和第一个关节都大于其他关键点 则判定为食指抬起 | |||

| if self.hand_state.is_index_finger_up[temp_handness]: | |||

| if not self.hand_state.gesture_locked[temp_handness]: | |||

| if self.hand_state.gesture_start_time[temp_handness] == 0: | |||

| self.hand_state.gesture_start_time[temp_handness] = time.time() | |||

| elif time.time() - self.hand_state.gesture_start_time[temp_handness] > self.wait_time: | |||

| self.hand_state.dragging[temp_handness] = True | |||

| self.hand_state.gesture_locked[temp_handness] = True | |||

| self.hand_state.drag_point[temp_handness] = (index_x, index_y) | |||

| # 如果食指指向操作已经超过了等待的时间 则设定为正式进行指向操作 | |||

| self.hand_state.buffer_start_time[temp_handness] = 0 | |||

| # 防止识别错误导致指向操作迅速中断的缓冲时间 | |||

| else: | |||

| if self.hand_state.buffer_start_time[temp_handness] == 0: | |||

| self.hand_state.buffer_start_time[temp_handness] = time.time() | |||

| elif time.time() - self.hand_state.buffer_start_time[temp_handness] > self.hand_state.buffer_duration[temp_handness]: | |||

| self.hand_state.gesture_start_time[temp_handness] = 0 | |||

| self.hand_state.gesture_locked[temp_handness] = False | |||

| self.hand_state.dragging[temp_handness] = False | |||

| # 如果食指指向操作的中断时间已经超过了设定的缓冲时间 则正式终断 | |||

| def draw_index_finger_gesture(self, image, temp_handness, index_x, index_y, cz0): | |||

| if self.hand_state.dragging[temp_handness]: | |||

| if self.hand_state.start_drag_time[temp_handness] == 0: | |||

| self.hand_state.start_drag_time[temp_handness] = time.time() | |||

| self.kalman_handler.reset_kalman_filter(temp_handness, index_x, index_y) | |||

| # 如果是首次操作 则记录时间并重置kalman滤波器 | |||

| smooth_x, smooth_y = self.kalman_handler.kalman_filter_point(temp_handness, index_x, index_y) | |||

| # 使用kalman滤波器平滑生成的轨迹 减少噪声和抖动 | |||

| self.hand_state.drag_point[temp_handness] = (index_x, index_y) | |||

| index_finger_radius = max(int(10 * (1 + (cz0 - self.hand_state.index_finger_tip[temp_handness].z) * 5)), 0) | |||

| cv2.circle(image, self.hand_state.drag_point[temp_handness], index_finger_radius, (0, 0, 255), -1) | |||

| # 根据离掌根的距离同步调整圆圈大小 但是要比FingerDrawer的同比增大一些 可以看清是否锁定指向操作 | |||

| drag_point_smooth = (smooth_x, smooth_y) | |||

| if time.time() - self.hand_state.start_drag_time[temp_handness] > self.kalman_wait_time: | |||

| self.hand_state.trajectory[temp_handness].append(drag_point_smooth) | |||

| # 因为滤波器初始化时需要时间稳定数据 所以等待其稳定后再将坐标点加到轨迹中 | |||

| else: | |||

| if len(self.hand_state.trajectory[temp_handness]) > 4: | |||

| contour = np.array(self.hand_state.trajectory[temp_handness], dtype=np.int32) | |||

| rect = cv2.minAreaRect(contour) | |||

| box = cv2.boxPoints(rect) | |||

| box = np.int64(box) | |||

| # 当拖拽点数大于4时则计算最小外接矩形 | |||

| self.hand_state.rect_draw_time[temp_handness] = time.time() | |||

| self.hand_state.last_drawn_box[temp_handness] = box | |||

| self.hand_state.start_drag_time[temp_handness] = 0 | |||

| self.hand_state.trajectory[temp_handness].clear() | |||

| # 重置 清空 | |||

| for i in range(1, len(self.hand_state.trajectory[temp_handness])): | |||

| pt1 = (int(self.hand_state.trajectory[temp_handness][i-1][0]), int(self.hand_state.trajectory[temp_handness][i-1][1])) | |||

| pt2 = (int(self.hand_state.trajectory[temp_handness][i][0]), int(self.hand_state.trajectory[temp_handness][i][1])) | |||

| cv2.line(image, pt1, pt2, (0, 0, 255), 2) | |||

| # 绘制拖拽路径 | |||

| if self.hand_state.last_drawn_box[temp_handness] is not None: | |||

| elapsed_time = time.time() - self.hand_state.rect_draw_time[temp_handness] | |||

| if elapsed_time < self.wait_box: | |||

| cv2.drawContours(image, [self.hand_state.last_drawn_box[temp_handness]], 0, (0, 255, 0), 2) | |||

| # 为了方便观测 需要保留显示包围框一定时间 | |||

| elif elapsed_time >= self.wait_box - 0.1: | |||

| box = self.hand_state.last_drawn_box[temp_handness] | |||

| x_min = max(0, min(box[:, 0])) | |||

| y_min = max(0, min(box[:, 1])) | |||

| x_max = min(image.shape[1], max(box[:, 0])) | |||

| y_max = min(image.shape[0], max(box[:, 1])) | |||

| cropped_image = image[y_min:y_max, x_min:x_max] | |||

| filename = f"../image/cropped_{temp_handness}_{int(time.time())}.jpg" | |||

| cv2.imwrite(filename, cropped_image) | |||

| self.hand_state.last_drawn_box[temp_handness] = None | |||

| # 因为如果画完包围框立即剪裁 很有可能把手错误的剪裁进去 | |||

| # 所以在包围框消失的前0.1秒剪裁 这样有足够的时间让手移走 | |||

+ 36

- 0

utils/kalman_filter.py

View File

| @ -0,0 +1,36 @@ | |||

| import numpy as np | |||

| from filterpy.kalman import KalmanFilter | |||

| class KalmanHandler: | |||

| def __init__(self): | |||

| self.kalman_filters = { | |||

| 'Left': KalmanFilter(dim_x=4, dim_z=2), | |||

| 'Right': KalmanFilter(dim_x=4, dim_z=2) | |||

| } | |||

| for key in self.kalman_filters: | |||

| self.kalman_filters[key].x = np.array([0., 0., 0., 0.]) | |||

| self.kalman_filters[key].F = np.array([[1, 0, 1, 0], | |||

| [0, 1, 0, 1], | |||

| [0, 0, 1, 0], | |||

| [0, 0, 0, 1]]) | |||

| self.kalman_filters[key].H = np.array([[1, 0, 0, 0], | |||

| [0, 1, 0, 0]]) | |||

| self.kalman_filters[key].P *= 1000. | |||

| self.kalman_filters[key].R = 3 | |||

| self.kalman_filters[key].Q = np.eye(4) * 0.01 | |||

| # 这些参数通过多次测试得出 表现较为稳定 | |||

| def kalman_filter_point(self, hand_label, x, y): | |||

| kf = self.kalman_filters[hand_label] | |||

| kf.predict() | |||

| kf.update([x, y]) | |||

| # 更新状态 | |||

| return (kf.x[0], kf.x[1]) | |||

| def reset_kalman_filter(self, hand_label, x, y): | |||

| kf = self.kalman_filters[hand_label] | |||

| kf.x = np.array([x, y, 0., 0.]) | |||

| kf.P *= 1000. | |||

| # 重置 | |||

+ 17

- 0

utils/model.py

View File

| @ -0,0 +1,17 @@ | |||

| import mediapipe as mp | |||

| class HandTracker: | |||

| def __init__(self): | |||

| self.mp_hands = mp.solutions.hands | |||

| self.hands = self.mp_hands.Hands( | |||

| static_image_mode=False, | |||

| max_num_hands=1, | |||

| # 一只会更稳定 | |||

| min_detection_confidence=0.5, | |||

| min_tracking_confidence=0.5 | |||

| ) | |||

| self.mp_drawing = mp.solutions.drawing_utils | |||

| def process(self, image): | |||

| results = self.hands.process(image) | |||

| return results | |||

+ 24

- 0

utils/process_images.py

View File

| @ -0,0 +1,24 @@ | |||

| import cv2 | |||

| import time | |||

| from hand_gesture import HandGestureHandler | |||

| class HandGestureProcessor: | |||

| def __init__(self): | |||

| self.hand_handler = HandGestureHandler() | |||

| def process_image(self, image, is_video): | |||

| start_time = time.time() | |||

| height, width = image.shape[:2] | |||

| image = cv2.flip(image, 1) | |||

| image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) | |||

| # 预处理传入的视频帧 | |||

| image = self.hand_handler.handle_hand_gestures(image, width, height, is_video) | |||

| spend_time = time.time() - start_time | |||

| FPS = 1.0 / spend_time if spend_time > 0 else 0 | |||

| image = cv2.putText(image, f'FPS {int(FPS)}', (25, 50), cv2.FONT_HERSHEY_SIMPLEX, 1.25, (0, 0, 255), 2) | |||

| # 计算并显示帧率 | |||

| return image | |||

+ 65

- 0

utils/video_recognition.py

View File

| @ -0,0 +1,65 @@ | |||

| import cv2 | |||

| from process_images import HandGestureProcessor | |||

| from tkinter import messagebox | |||

| from PIL import Image, ImageTk | |||

| def start_camera(canvas): | |||

| cap = cv2.VideoCapture(0) | |||

| if not cap.isOpened(): | |||

| return "Cannot open camera" | |||

| gesture_processor = HandGestureProcessor() | |||

| show_frame(canvas, cap, gesture_processor) | |||

| def show_frame(canvas, cap, gesture_processor): | |||

| success, frame = cap.read() | |||

| if success: | |||

| processed_frame = gesture_processor.process_image(frame,False) | |||

| img = cv2.cvtColor(processed_frame, cv2.COLOR_BGR2RGB) | |||

| img = Image.fromarray(img) | |||

| imgtk = ImageTk.PhotoImage(image=img) | |||

| canvas.imgtk = imgtk | |||

| canvas.create_image(0, 0, anchor="nw", image=imgtk) | |||

| # 对该帧进行处理并转换为RGB显示在画布上 | |||

| canvas.after(10, show_frame, canvas, cap, gesture_processor) | |||

| # 实现循环调用 持续处理并显示后续的每一帧 | |||

| else: | |||

| cap.release() | |||

| cv2.destroyAllWindows() | |||

| def upload_and_process_video(canvas, video_path): | |||

| cap = cv2.VideoCapture(video_path) | |||

| if not cap.isOpened(): | |||

| return "Cannot open video file" | |||

| frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)) | |||

| frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)) | |||

| fps = cap.get(cv2.CAP_PROP_FPS) | |||

| # 获取视频的参数 | |||

| output_filename = "../video/processed_output.mp4" | |||

| fourcc = cv2.VideoWriter_fourcc(*'XVID') | |||

| out = cv2.VideoWriter(output_filename, fourcc, fps, (frame_width, frame_height)) | |||

| # 设置输出视频文件路径和编码 | |||

| gesture_processor = HandGestureProcessor() | |||

| process_video_frame(canvas, cap, gesture_processor, out) | |||

| def process_video_frame(canvas, cap, gesture_processor, out): | |||

| success, frame = cap.read() | |||

| if success: | |||

| processed_frame = gesture_processor.process_image(frame,True) | |||

| out.write(processed_frame) | |||

| img = cv2.cvtColor(processed_frame, cv2.COLOR_BGR2RGB) | |||

| img = Image.fromarray(img) | |||

| imgtk = ImageTk.PhotoImage(image=img) | |||

| canvas.imgtk = imgtk | |||

| canvas.create_image(0, 0, anchor="nw", image=imgtk) | |||

| canvas.after(10, process_video_frame, canvas, cap, gesture_processor, out) | |||

| else: | |||

| cap.release() | |||

| out.release() | |||

| cv2.destroyAllWindows() | |||

| messagebox.showinfo("Info", "Processed video saved as processed_output.avi") | |||

| print("Processed video saved as processed_output.avi") | |||

+ 0

- 306

项目测试文档.md

View File

| @ -1,306 +0,0 @@ | |||

| # 📋 WaveControl 隔空手势控制系统 - 测试文档 | |||

| ## 一、测试概述 | |||

| ### 1.1 测试目标 | |||

| 确保系统三个核心子模块功能完整、交互稳定、性能可靠,满足多场景下的非接触式人机交互需求,包括主控制平台、手语通平台、游戏控制模块。 | |||

| ### 1.2 测试对象 | |||

| - 主控制系统(控制面板 + 手势库管理 + 语音识别) | |||

| - WaveSign 手语通系统(教学、评分、社区) | |||

| - 虚拟赛车手柄系统(游戏中控制响应) | |||

| ### 1.3 测试类型 | |||

| | 测试类型 | 说明 | | |||

| | ------------ | ------------------------------------------ | | |||

| | 功能测试 | 各模块是否能完成核心功能 | | |||

| | 集成测试 | 各子模块之间的数据流与交互是否正确 | | |||

| | 性能测试 | 系统是否在高帧率下稳定运行、响应及时 | | |||

| | 边界测试 | 识别模糊手势、断网、摄像头断连等异常情况 | | |||

| | 用户体验测试 | 普通用户是否能流畅使用、易上手、有清晰反馈 | | |||

| ## 二、测试环境 | |||

| | 项目 | 配置 | | |||

| | ----------- | --------------------------------- | | |||

| | 操作系统 | Windows 10 / 11、MacOS | | |||

| | Python 版本 | Python 3.8+ | | |||

| | 浏览器 | Chrome / Edge | | |||

| | 识别设备 | USB 外接摄像头 / 笔记本自带摄像头 | | |||

| | 游戏平台 | Steam 平台《Rush Rally Origins》 | | |||

| ## 三、测试用例设计 | |||

| ### ✅ 主控制系统 | |||

| | 序号 | 手势名称 | 手势动作说明 | 所属类型 | | |||

| | ---- | ------------ | --------------------------------------------- | -------- | | |||

| | 01 | 光标控制 | 竖起食指滑动控制光标位置 | 通用控制 | | |||

| | 02 | 鼠标左键点击 | 食指 + 大拇指上举执行点击 | 通用控制 | | |||

| | 03 | 滚动控制 | okay 手势(食指+拇指捏合),上下移动滚动页面 | 通用控制 | | |||

| | 04 | 全屏控制 | 四指并拢向上 → 触发设定键(默认 f 键) | 通用控制 | | |||

| | 05 | 退格 | 特定手势触发退格键 | 通用控制 | | |||

| | 06 | 开始语音识别 | 六指手势触发语音识别启动 | 通用控制 | | |||

| | 07 | 结束语音识别 | 拳头手势触发语音识别停止 | 通用控制 | | |||

| | 08 | 暂停/继续 | 单手张开保持 1.5 秒触发暂停/继续识别 | 通用控制 | | |||

| | 09 | 向右移动 | 拇指上抬,其余手指收回 → 控制游戏角色向右移动 | 游戏控制 | | |||

| | 10 | 跳跃 | 食指、中指上举 → 控制跳跃动作 | 游戏控制 | | |||

| | 11 | 右跳跃 | 拇指 + 食指 + 中指上举 → 控制右跳跃 | 游戏控制 | | |||

| | 12 | 上一首 | 大拇指向左摆动 → 上一首音乐 | 音乐控制 | | |||

| | 13 | 下一首 | 大拇指向右摆动 → 下一首音乐 | 音乐控制 | | |||

| | 14 | 暂停/播放 | 比耶手势(✌️ ) → 暂停或播放音乐 | 音乐控制 | | |||

| | 15 | 切换音乐模式 | rock 手势(🤘)→ 切换音乐/普通控制模式 | 模式切换 | | |||

| ### 🤟 手语通 WaveSign | |||

| #### ✅ 1. **SLClassroom(手语教室)模块** | |||

| | 用例编号 | 用例名称 | 测试点 | 预期结果 | | |||

| | -------- | ------------------ | --------------------------- | --------------------------- | | |||

| | TC-SL-01 | 摄像头实时识别 | 摄像头接通后手势是否被识别 | 返回手语内容 + 实时评分动画 | | |||

| | TC-SL-02 | 视频上传评分 | 上传手语视频后是否正常评分 | 返回分数、标准建议 | | |||

| | TC-SL-03 | 视频课程学习流程 | 是否能顺序播放、标记已学 | 视频播放正常,课程解锁 | | |||

| | TC-SL-04 | 翻转卡片练习 | 卡片是否翻转 + 显示正确答案 | 点击翻面后显示预设解释 | | |||

| | TC-SL-05 | 任务式课程地图跳转 | 点击课程节点是否正确跳转 | 跳转至对应课程页 | | |||

| #### ✅ 2. **Community(社区系统)模块** | |||

| | 用例编号 | 用例名称 | 测试点 | 预期结果 | | |||

| | --------- | ------------- | -------------------------- | -------------------------- | | |||

| | TC-COM-01 | 发帖功能 | 输入文字/图片/视频发帖 | 帖子成功展示 + ID 唯一标识 | | |||

| | TC-COM-02 | 评论功能 | 帖子下评论 + 删除 | 评论正常显示/删除 | | |||

| | TC-COM-03 | 点赞机制 | 点赞后数值变化 | 点赞数+1,重复点则取消 | | |||

| | TC-COM-04 | 热门话题显示 | 帖子互动数高时是否上热门区 | 热门区出现帖子 | | |||

| | TC-COM-05 | 内容审核机制 | 敏感词是否被拦截/提示 | 给出“内容不合规”提示 | | |||

| | TC-COM-06 | 标签推荐 | 选择话题是否推荐相关内容 | 推荐结果合理、及时加载 | | |||

| | TC-COM-07 | 关注/取关系统 | 关注后是否成功建立关注关系 | 动态展示更新 | | |||

| #### ✅ 3. **Schedule(日程与任务模块)** | |||

| | 用例编号 | 用例名称 | 测试点 | 预期结果 | | |||

| | --------- | -------------------- | -------------------------- | ------------------------- | | |||

| | TC-SCH-01 | 添加任务清单 | 是否可设置日期/优先级 | 列表显示任务 + 状态可勾选 | | |||

| | TC-SCH-02 | 事件提醒触发 | 设置提醒是否能按时通知 | 到时弹出提醒/响铃提示 | | |||

| | TC-SCH-03 | 日/周/月视图切换 | 是否能无误切换不同日历视图 | 各视图正常显示 | | |||

| | TC-SCH-04 | 删除任务是否更新视图 | 删除后是否同步更新 | 日历图/列表同步清除 | | |||

| #### ✅ 4. **LifeServing(生活服务)模块** | |||

| | 用例编号 | 用例名称 | 测试点 | 预期结果 | | |||

| | -------- | ---------------- | ---------------------------------- | ---------------------------- | | |||

| | TC-LS-01 | 发布内容管理 | 发布好物推荐/活动等信息 | 内容展示无误 | | |||

| | TC-LS-02 | 辅助器具推荐 | 推荐列表是否分类清晰/加载正确 | 分类显示 + 图片正常 | | |||

| | TC-LS-03 | 学习设备推荐 | 展示硬件学习工具列表 | 内容图文加载完整 | | |||

| | TC-LS-04 | 就业信息推送 | 职位内容、公司信息展示是否完整 | 包含岗位名称、描述、联系方式 | | |||

| | TC-LS-05 | 残障友好企业标识 | 是否加V / 标签区分 | 有“友好企业”提示 | | |||

| | TC-LS-06 | 无障碍路线规划 | 是否能绘制无台阶/电梯路线 | 地图路径合理 | | |||

| | TC-LS-07 | 实时公交提醒 | 路线是否加载正确/更新是否及时 | 实时展示公交进站时间 | | |||

| | TC-LS-08 | 活动预告展示 | 亲子/文娱/演出分类信息加载是否完整 | 可报名 + 有时间地点说明 | | |||

| #### ✅ 5. **MyPage(个人中心)模块** | |||

| | 用例编号 | 用例名称 | 测试点 | 预期结果 | | |||

| | -------- | ------------------ | ------------------------------- | ---------------------------- | | |||

| | TC-MY-01 | 修改头像 | 上传头像 + 预览功能是否生效 | 显示新头像 | | |||

| | TC-MY-02 | 修改资料 | 昵称/简介/联系方式可修改 | 保存后页面同步更新 | | |||

| | TC-MY-03 | 收藏管理 | 收藏帖子/课程后是否能查看 | 我的收藏页显示内容 | | |||

| | TC-MY-04 | 账号注册/登录/登出 | 是否可注册新用户 + 正确跳转状态 | 新用户进入首页,旧账号可退出 | | |||

| | TC-MY-05 | 权限角色识别 | 是否区分普通用户/审核员角色 | 页面显示不同选项 | | |||

| #### ✅ 6. **技术实现相关(稳定性/架构)测试** | |||

| | 用例编号 | 用例名称 | 测试点 | 预期结果 | | |||

| | ---------- | --------------------- | ----------------------------- | ------------------------ | | |||

| | TC-TECH-01 | SQLite 数据读写测试 | 批量操作课程/帖子是否存取正常 | 数据不丢失,响应时间正常 | | |||

| | TC-TECH-02 | MediaPipe模型崩溃恢复 | 强行关闭摄像头后是否重连 | 显示重连提示/自动恢复 | | |||

| | TC-TECH-03 | Tailwind 前端样式响应 | 各分辨率下页面是否响应式变化 | 不溢出,元素自适应 | | |||

| ### 🏎️ 游戏控制模块 | |||

| | 用例编号 | 模块 | 用例名称 | 前置条件 | 测试步骤 | 预期结果 | 优先级 | | |||

| | ---------- | -------- | ---------------- | ------------------------ | -------------------------------- | ---------------------------------- | ------ | | |||

| | TC-GAME-01 | 游戏控制 | 加速动作识别 | 摄像头运行正常,程序启动 | 举起右手拇指上扬 | 车辆开始加速 | 高 | | |||

| | TC-GAME-02 | 游戏控制 | 刹车动作识别 | 同上 | 举起左手拇指上扬 | 车辆开始减速 | 高 | | |||

| | TC-GAME-03 | 游戏控制 | 左右转向动作识别 | 同上 | 向左倾手掌 | 车辆向左转弯 | 高 | | |||

| | TC-GAME-04 | 游戏控制 | 手势连续识别切换 | 程序运行中 | 快速从加速 → 左转 → 刹车切换手势 | 每步操作都有反馈,游戏响应流畅 | 高 | | |||

| | TC-GAME-05 | 游戏控制 | 识别抖动干扰测试 | 程序运行中,抖动手指 | 快速小幅度摆动手指 | 系统不误触操作,保持稳定 | 中 | | |||

| | TC-GAME-06 | 游戏控制 | 虚拟手柄断连恢复 | 手柄模拟开启中 | 断开 vgamepad 端口 → 重连 | 程序检测中断并尝试自动恢复 | 中 | | |||

| | TC-GAME-07 | 游戏控制 | 低帧率下性能表现 | 模拟20fps摄像头 | 尝试完成左右转、加速等动作 | 出现识别滞后,界面给出低帧警告 | 中 | | |||

| | TC-GAME-08 | 游戏控制 | UI反馈准确性 | 摄像头运行中 | 做出加速动作,观察 UI 面板反馈 | 显示“当前动作:加速”,图像区域标亮 | 中 | | |||

| ## 四、测试程序实现与技术支撑 | |||

| 本项目测试除使用主系统 UI 操作外,亦开发了两套独立测试程序用于识别稳定性、输入准确性与边界场景的验证,覆盖核心逻辑路径,支撑高频回归测试与离线分析。 | |||

| ### 4.1 手势识别测试程序(wavecontrol-test 模块) | |||

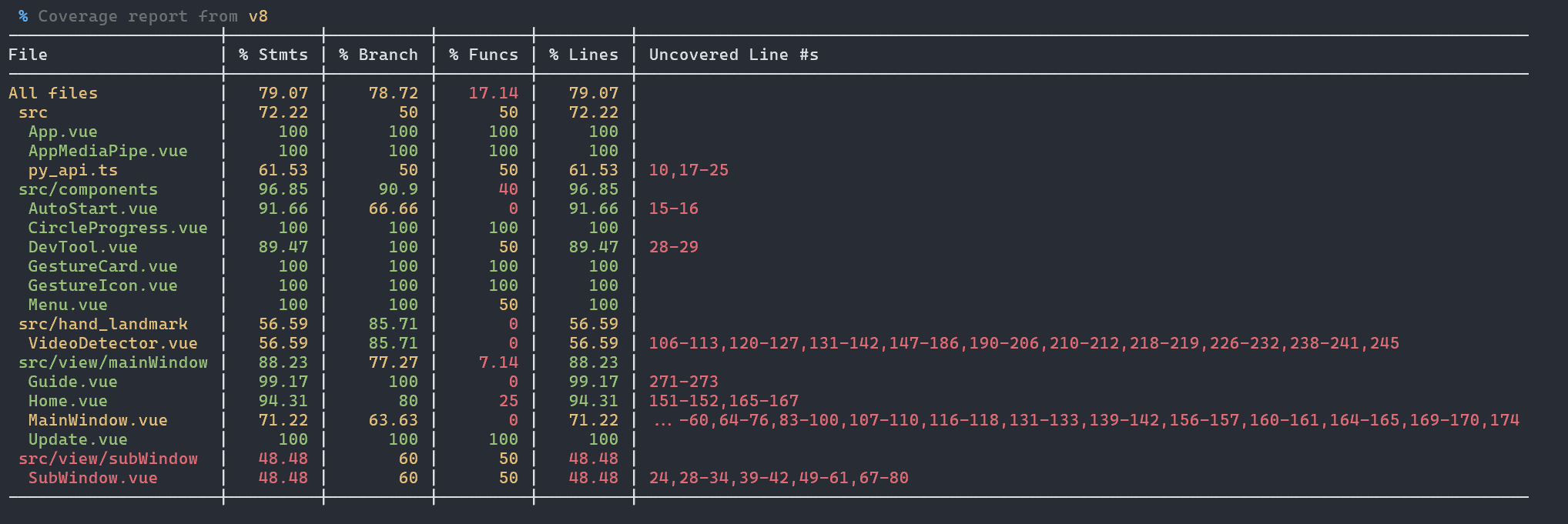

| 项目测试脚本集中存放于 `wavecontrol-test/src/` (gesture分支)路径下,采用 TypeScript + Vue3 框架实现,通过 MediaPipe 实时检测与手势逻辑模块协同,实现系统功能验证。 | |||

| #### 4.1.1 hand_landmark 模块 | |||

| - **detector.ts** | |||

| 核心手部关键点检测模块,封装对 MediaPipe 的调用逻辑,统一输出手部21个关键点的坐标、置信度等数据。 | |||

| - 功能点:初始化摄像头流、绑定回调、封装模型参数。 | |||

| - 用于:为 `VideoDetector.vue` 和 `gesture_handler.ts` 提供关键点数据源。 | |||

| - **gesture_handler.ts** | |||

| 手势解析与事件派发模块,将 landmark 数据解析为具体手势动作(如光标控制、点击、跳跃等)。 | |||

| - 支持自定义手势库扩展。 | |||

| - 与游戏控制模块或系统控制指令绑定。 | |||

| - **VideoDetector.vue** | |||

| Vue 组件封装,展示摄像头实时画面 + 可视化 landmark 点位(调试模式用)。 | |||

| - 提供测试 UI 面板,便于调试每个手势识别过程。 | |||

| - 集成 FPS 状态、实时识别手势结果反馈。 | |||

| #### 4.1.2 独立运行说明与调试提示 | |||