26 changed files with 129 additions and 353 deletions

Unified View

Diff Options

-

+5 -0.vscode/settings.json

-

BINimage/cropped_Right_1753179393.jpg

-

BINimage/cropped_Right_1753179532.jpg

-

BINimage/cropped_Right_1753179605.jpg

-

+0 -0main.py

-

+44 -29utils/GUI.py

-

BINutils/__pycache__/finger_drawer.cpython-312.pyc

-

BINutils/__pycache__/finger_drawer.cpython-38.pyc

-

BINutils/__pycache__/gesture_data.cpython-312.pyc

-

BINutils/__pycache__/gesture_data.cpython-38.pyc

-

BINutils/__pycache__/hand_gesture.cpython-312.pyc

-

BINutils/__pycache__/hand_gesture.cpython-38.pyc

-

BINutils/__pycache__/index_finger.cpython-312.pyc

-

BINutils/__pycache__/index_finger.cpython-38.pyc

-

BINutils/__pycache__/kalman_filter.cpython-312.pyc

-

BINutils/__pycache__/kalman_filter.cpython-38.pyc

-

BINutils/__pycache__/model.cpython-312.pyc

-

BINutils/__pycache__/model.cpython-38.pyc

-

BINutils/__pycache__/process_images.cpython-312.pyc

-

BINutils/__pycache__/process_images.cpython-38.pyc

-

BINutils/__pycache__/video_recognition.cpython-312.pyc

-

BINutils/__pycache__/video_recognition.cpython-38.pyc

-

+78 -44utils/gesture_recognition.ipynb

-

+1 -1utils/hand_gesture.py

-

+1 -1utils/index_finger.py

-

+0 -278utils/main.py

+ 5

- 0

.vscode/settings.json

View File

| @ -0,0 +1,5 @@ | |||||

| { | |||||

| "python-envs.defaultEnvManager": "ms-python.python:conda", | |||||

| "python-envs.defaultPackageManager": "ms-python.python:conda", | |||||

| "python-envs.pythonProjects": [] | |||||

| } | |||||

BIN

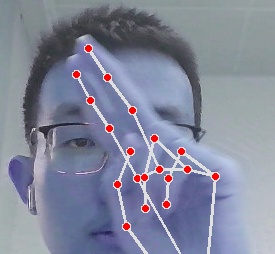

image/cropped_Right_1753179393.jpg

View File

BIN

image/cropped_Right_1753179532.jpg

View File

BIN

image/cropped_Right_1753179605.jpg

View File

+ 0

- 0

main.py

View File

+ 44

- 29

utils/GUI.py

View File

BIN

utils/__pycache__/finger_drawer.cpython-312.pyc

View File

BIN

utils/__pycache__/finger_drawer.cpython-38.pyc

View File

BIN

utils/__pycache__/gesture_data.cpython-312.pyc

View File

BIN

utils/__pycache__/gesture_data.cpython-38.pyc

View File

BIN

utils/__pycache__/hand_gesture.cpython-312.pyc

View File

BIN

utils/__pycache__/hand_gesture.cpython-38.pyc

View File

BIN

utils/__pycache__/index_finger.cpython-312.pyc

View File

BIN

utils/__pycache__/index_finger.cpython-38.pyc

View File

BIN

utils/__pycache__/kalman_filter.cpython-312.pyc

View File

BIN

utils/__pycache__/kalman_filter.cpython-38.pyc

View File

BIN

utils/__pycache__/model.cpython-312.pyc

View File

BIN

utils/__pycache__/model.cpython-38.pyc

View File

BIN

utils/__pycache__/process_images.cpython-312.pyc

View File

BIN

utils/__pycache__/process_images.cpython-38.pyc

View File

BIN

utils/__pycache__/video_recognition.cpython-312.pyc

View File

BIN

utils/__pycache__/video_recognition.cpython-38.pyc

View File

+ 78

- 44

utils/gesture_recognition.ipynb

View File

+ 1

- 1

utils/hand_gesture.py

View File

+ 1

- 1

utils/index_finger.py

View File

+ 0

- 278

utils/main.py

View File

| @ -1,278 +0,0 @@ | |||||

| import cv2 | |||||

| import time | |||||

| import mediapipe | |||||

| import numpy as np | |||||

| from collections import deque | |||||

| from filterpy.kalman import KalmanFilter | |||||

| gesture_locked = {'Left':False,'Right':False} | |||||

| gesture_start_time = {'Left':0,'Right':0} | |||||

| buffer_start_time = {'Left':0,'Right':0} | |||||

| start_drag_time = {'Left':0,'Right':0} | |||||

| dragging = {'Left':False,'Right':False} | |||||

| drag_point = {'Left':(0, 0),'Right':(0, 0)} | |||||

| buffer_duration = {'Left':0.25,'Right':0.25} | |||||

| is_index_finger_up = {'Left':False,'Right':False} | |||||

| index_finger_second = {'Left':0,'Right':0} | |||||

| index_finger_tip = {'Left':0,'Right':0} | |||||

| trajectory = {'Left':[],'Right':[]} | |||||

| square_queue = deque() | |||||

| wait_time = 1.5 | |||||

| kalman_wait_time = 0.5 | |||||

| wait_box = 2 | |||||

| rect_draw_time = {'Left':0,'Right':0} | |||||

| last_drawn_box = {'Left':None,'Right':None} | |||||

| elapsed_time = {'Left':0,'Right':0} | |||||

| def clear_hand_states(detected_hand ='Both'): | |||||

| global gesture_locked, gesture_start_time, buffer_start_time, dragging, drag_point, buffer_duration,is_index_finger_up, trajectory,wait_time,kalman_wait_time, start_drag_time, rect_draw_time, last_drawn_box, wait_box, elapsed_time | |||||

| hands_to_clear = {'Left', 'Right'} | |||||

| if detected_hand == 'Both': | |||||

| hands_to_clear = hands_to_clear | |||||

| else: | |||||

| hands_to_clear -= {detected_hand} | |||||

| # 反向判断左右手 | |||||

| for h in hands_to_clear: | |||||

| gesture_locked[h] = False | |||||

| gesture_start_time[h] = 0 | |||||

| buffer_start_time[h] = 0 | |||||

| dragging[h] = False | |||||

| drag_point[h] = (0, 0) | |||||

| buffer_duration[h] = 0.25 | |||||

| is_index_finger_up[h] = False | |||||

| trajectory[h].clear() | |||||

| start_drag_time[h] = 0 | |||||

| rect_draw_time[h] = 0 | |||||

| last_drawn_box[h] = None | |||||

| elapsed_time[h] = 0 | |||||

| # 清空没被检测的手 | |||||

| kalman_filters = { | |||||

| 'Left': KalmanFilter(dim_x=4, dim_z=2), | |||||

| 'Right': KalmanFilter(dim_x=4, dim_z=2) | |||||

| } | |||||

| for key in kalman_filters: | |||||

| kalman_filters[key].x = np.array([0., 0., 0., 0.]) | |||||

| kalman_filters[key].F = np.array([[1, 0, 1, 0], [0, 1, 0, 1], [0, 0, 1, 0], [0, 0, 0, 1]]) | |||||

| # 状态转移矩阵 | |||||

| kalman_filters[key].H = np.array([[1, 0, 0, 0], [0, 1, 0, 0]]) | |||||

| # 观测矩阵 | |||||

| kalman_filters[key].P *= 1000. | |||||

| kalman_filters[key].R = 3 | |||||

| kalman_filters[key].Q = np.eye(4) * 0.01 | |||||

| def kalman_filter_point(hand_label, x, y): | |||||

| kf = kalman_filters[hand_label] | |||||

| kf.predict() | |||||

| kf.update([x, y]) | |||||

| # 更新状态 | |||||

| return (kf.x[0], kf.x[1]) | |||||

| def reset_kalman_filter(hand_label, x, y): | |||||

| kf = kalman_filters[hand_label] | |||||

| kf.x = np.array([x, y, 0., 0.]) | |||||

| kf.P *= 1000. | |||||

| # 重置 | |||||

| mp_hands = mediapipe.solutions.hands | |||||

| hands = mp_hands.Hands( | |||||

| static_image_mode=False, | |||||

| max_num_hands=2, | |||||

| # 一只更稳定 | |||||

| min_detection_confidence=0.5, | |||||

| min_tracking_confidence=0.5 | |||||

| ) | |||||

| mp_drawing = mediapipe.solutions.drawing_utils | |||||

| clear_hand_states() | |||||

| def process_image(image): | |||||

| start_time = time.time() | |||||

| height, width = image.shape[:2] | |||||

| image = cv2.flip(image, 1) | |||||

| image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) | |||||

| # 预处理帧 | |||||

| results = hands.process(image) | |||||

| if results.multi_hand_landmarks: | |||||

| # 如果检测到手 | |||||

| handness_str = '' | |||||

| index_finger_tip_str = '' | |||||

| if len(results.multi_hand_landmarks) == 1: | |||||

| clear_hand_states(detected_hand = results.multi_handedness[0].classification[0].label) | |||||

| # 如果只有一只手 则清空另一只手的数据 避免后续冲突导致不稳定 | |||||

| for hand_idx in range(len(results.multi_hand_landmarks)): | |||||

| hand_21 = results.multi_hand_landmarks[hand_idx] | |||||

| mp_drawing.draw_landmarks(image, hand_21, mp_hands.HAND_CONNECTIONS) | |||||

| temp_handness = results.multi_handedness[hand_idx].classification[0].label | |||||

| handness_str += '{}:{}, '.format(hand_idx, temp_handness) | |||||

| is_index_finger_up[temp_handness] = False | |||||

| # 先设置为false 防止放下被错误更新为竖起 | |||||

| cz0 = hand_21.landmark[0].z | |||||

| index_finger_second[temp_handness] = hand_21.landmark[7] | |||||

| index_finger_tip[temp_handness] = hand_21.landmark[8] | |||||

| # 食指指尖和第一个关节 | |||||

| index_x, index_y = int(index_finger_tip[temp_handness].x * width), int(index_finger_tip[temp_handness].y * height) | |||||

| if all(index_finger_second[temp_handness].y < hand_21.landmark[i].y for i in range(21) if i not in [7, 8]) and index_finger_tip[temp_handness].y < index_finger_second[temp_handness].y: | |||||

| is_index_finger_up[temp_handness] = True | |||||

| # 如果指尖和第二个关节高度大于整只手所有关节点 则视为执行“指向”操作 | |||||

| if is_index_finger_up[temp_handness]: | |||||

| if not gesture_locked[temp_handness]: | |||||

| if gesture_start_time[temp_handness] == 0: | |||||

| gesture_start_time[temp_handness] = time.time() | |||||

| # 记录食指抬起的时间 | |||||

| elif time.time() - gesture_start_time[temp_handness] > wait_time: | |||||

| dragging[temp_handness] = True | |||||

| gesture_locked[temp_handness] = True | |||||

| drag_point[temp_handness] = (index_x, index_y) | |||||

| # 如果食指抬起的时间大于预设的等待时间则视为执行“指向”操作 | |||||

| buffer_start_time[temp_handness] = 0 | |||||

| # 检测到食指竖起就刷新缓冲时间 | |||||

| else: | |||||

| if buffer_start_time[temp_handness] == 0: | |||||

| buffer_start_time[temp_handness] = time.time() | |||||

| elif time.time() - buffer_start_time[temp_handness] > buffer_duration[temp_handness]: | |||||

| gesture_start_time[temp_handness] = 0 | |||||

| gesture_locked[temp_handness] = False | |||||

| dragging[temp_handness] = False | |||||

| # 如果缓冲时间大于设定 就证明已经结束指向操作 | |||||

| # 这样可以防止某一帧识别有误导致指向操作被错误清除 | |||||

| if dragging[temp_handness]: | |||||

| if start_drag_time[temp_handness] == 0: | |||||

| start_drag_time[temp_handness] = time.time() | |||||

| reset_kalman_filter(temp_handness, index_x, index_y) | |||||

| # 每次画线的时候初始化滤波器 | |||||

| smooth_x, smooth_y = kalman_filter_point(temp_handness, index_x, index_y) | |||||

| drag_point[temp_handness] = (index_x, index_y) | |||||

| index_finger_radius = max(int(10 * (1 + (cz0 - index_finger_tip[temp_handness].z) * 5)), 0) | |||||

| cv2.circle(image, drag_point[temp_handness], index_finger_radius, (0, 0, 255), -1) | |||||

| # 根据离掌根的深度距离来构建一个圆 | |||||

| # 用来显示已经开始指向操作 | |||||

| # 和下方构建的深度点位对应 直接用倍数 | |||||

| drag_point_smooth = (smooth_x, smooth_y) | |||||

| if time.time() - start_drag_time[temp_handness] > kalman_wait_time: | |||||

| trajectory[temp_handness].append(drag_point_smooth) | |||||

| # 因为kalman滤波器初始化的时候会很不稳定 前几帧通常会有较为严重的噪声 | |||||

| # 所以直接等待前几帧运行完成之后再将点位加到轨迹列表中 | |||||

| else: | |||||

| if len(trajectory[temp_handness]) > 4: | |||||

| contour = np.array(trajectory[temp_handness], dtype=np.int32) | |||||

| rect = cv2.minAreaRect(contour) | |||||

| box = cv2.boxPoints(rect) | |||||

| box = np.int0(box) | |||||

| rect_draw_time[temp_handness] = time.time() | |||||

| last_drawn_box[temp_handness] = box | |||||

| # 如果指向操作结束 轨迹列表有至少四个点的时候 | |||||

| # 使用最小包围图形将画的不规则图案调整为一个矩形 | |||||

| start_drag_time[temp_handness] = 0 | |||||

| trajectory[temp_handness].clear() | |||||

| for i in range(1, len(trajectory[temp_handness])): | |||||

| pt1 = (int(trajectory[temp_handness][i-1][0]), int(trajectory[temp_handness][i-1][1])) | |||||

| pt2 = (int(trajectory[temp_handness][i][0]), int(trajectory[temp_handness][i][1])) | |||||

| cv2.line(image, pt1, pt2, (0, 0, 255), 2) | |||||

| # 绘制连接轨迹点的线 | |||||

| if last_drawn_box[temp_handness] is not None: | |||||

| elapsed_time[temp_handness] = time.time() - rect_draw_time[temp_handness] | |||||

| if elapsed_time[temp_handness] < wait_box: | |||||

| cv2.drawContours(image, [last_drawn_box[temp_handness]], 0, (0, 255, 0), 2) | |||||

| # 将矩形框保留一段时间 否则一帧太快 无法看清效果 | |||||

| elif elapsed_time[temp_handness] >= wait_box - 0.1: | |||||

| box = last_drawn_box[temp_handness] | |||||

| x_min = max(0, min(box[:, 0])) | |||||

| y_min = max(0, min(box[:, 1])) | |||||

| x_max = min(image.shape[1], max(box[:, 0])) | |||||

| y_max = min(image.shape[0], max(box[:, 1])) | |||||

| cropped_image = image[y_min:y_max, x_min:x_max] | |||||

| filename = f"../image/cropped_{temp_handness}_{int(time.time())}.jpg" | |||||

| cv2.imwrite(filename, cropped_image) | |||||

| last_drawn_box[temp_handness] = None | |||||

| # 不能直接剪裁画完的图像 可能会错误的将手剪裁进去 | |||||

| # 等待一段时间 有一个给手缓冲移动走的时间再将这一帧里的矩形提取出来 | |||||

| for i in range(21): | |||||

| cx = int(hand_21.landmark[i].x * width) | |||||

| cy = int(hand_21.landmark[i].y * height) | |||||

| cz = hand_21.landmark[i].z | |||||

| depth_z = cz0 - cz | |||||

| radius = max(int(6 * (1 + depth_z*5)), 0) | |||||

| if i == 0: | |||||

| image = cv2.circle(image, (cx, cy), radius, (255, 255, 0), thickness=-1) | |||||

| if i == 8: | |||||

| image = cv2.circle(image, (cx, cy), radius, (255, 165, 0), thickness=-1) | |||||

| index_finger_tip_str += '{}:{:.2f}, '.format(hand_idx, depth_z) | |||||

| if i in [1,5,9,13,17]: | |||||

| image = cv2.circle(image, (cx, cy), radius, (0, 0, 255), thickness=-1) | |||||

| if i in [2,6,10,14,18]: | |||||

| image = cv2.circle(image, (cx, cy), radius, (75, 0, 130), thickness=-1) | |||||

| if i in [3,7,11,15,19]: | |||||

| image = cv2.circle(image, (cx, cy), radius, (238, 130, 238), thickness=-1) | |||||

| if i in [4,12,16,20]: | |||||

| image = cv2.circle(image, (cx, cy), radius, (0, 255, 255), thickness=-1) | |||||

| # 提取出每一个关节点 赋予对应的颜色和根据掌根的深度 | |||||

| scaler= 1 | |||||

| image = cv2.putText(image,handness_str, (25*scaler, 100*scaler), cv2.FONT_HERSHEY_SIMPLEX, 1.25*scaler, (0,0,255), 2,) | |||||

| image = cv2.putText(image,index_finger_tip_str, (25*scaler, 150*scaler), cv2.FONT_HERSHEY_SIMPLEX, 1.25*scaler, (0,0,255), 2,) | |||||

| spend_time = time.time() - start_time | |||||

| if spend_time > 0: | |||||

| FPS = 1.0 / spend_time | |||||

| else: | |||||

| FPS = 0 | |||||

| image = cv2.putText(image,'FPS '+str(int(FPS)),(25*scaler,50*scaler),cv2.FONT_HERSHEY_SIMPLEX,1.25*scaler,(0,0,255),2,) | |||||

| # 显示FPS 检测到的手和食指指尖对于掌根的深度值 | |||||

| else: | |||||

| clear_hand_states() | |||||

| # 如果没检测到手就清空全部信息 | |||||

| return image | |||||

| ######################################## | |||||

| cap = cv2.VideoCapture(1) | |||||

| cap.open(0) | |||||

| while cap.isOpened(): | |||||

| success, frame = cap.read() | |||||

| if not success: | |||||

| print("Camera Error") | |||||

| break | |||||

| frame = process_image(frame) | |||||

| cv2.imshow('Video', frame) | |||||

| if cv2.waitKey(1) & 0xFF == ord('q'): | |||||

| break | |||||

| cap.release() | |||||

| cv2.destroyAllWindows() | |||||